How Artificial Intelligence challenges our perception of reality.

Project

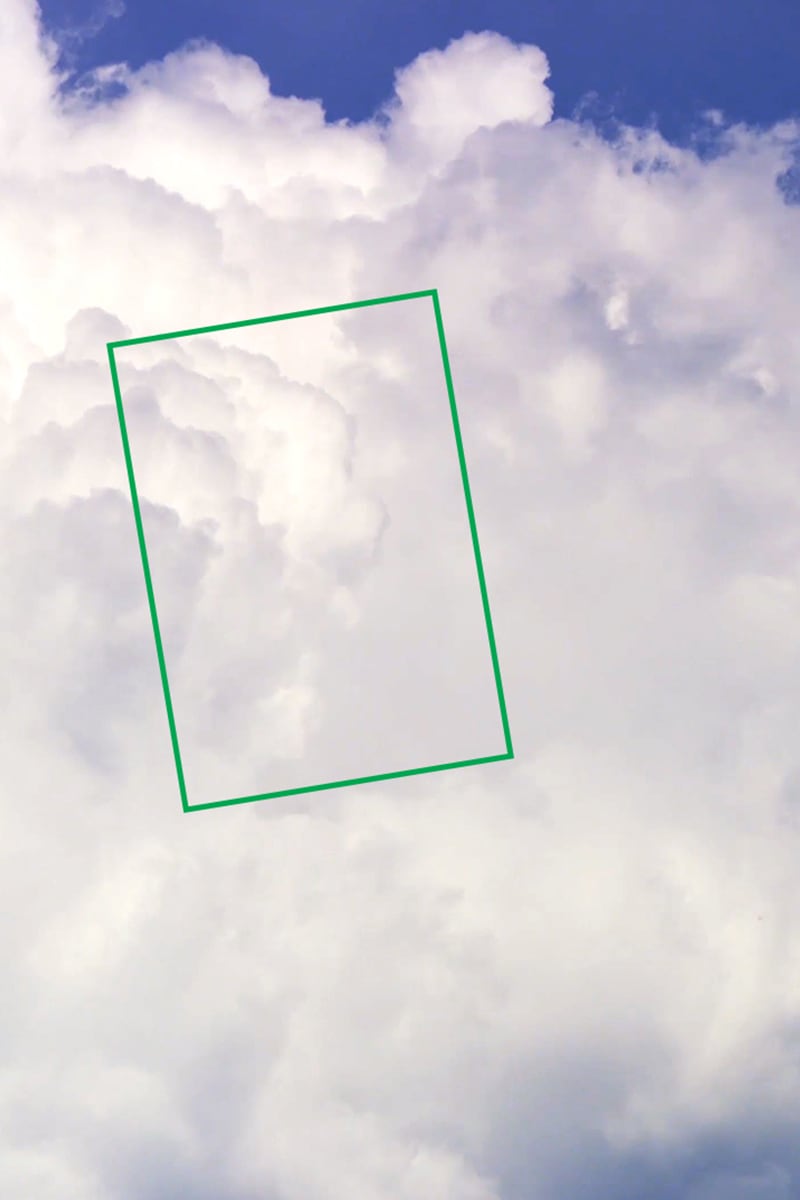

Cloud Faces

Project type

Master thesis

Visual Communication

Berlin University of the Arts

Supervisors

Prof. Uwe Vock

Prof. Dr. Timothée Ingen-Housz

Mr. Vinzent Britz

Grade

Note 1.0 mit Auszeichnung,

ECTS A with distinction

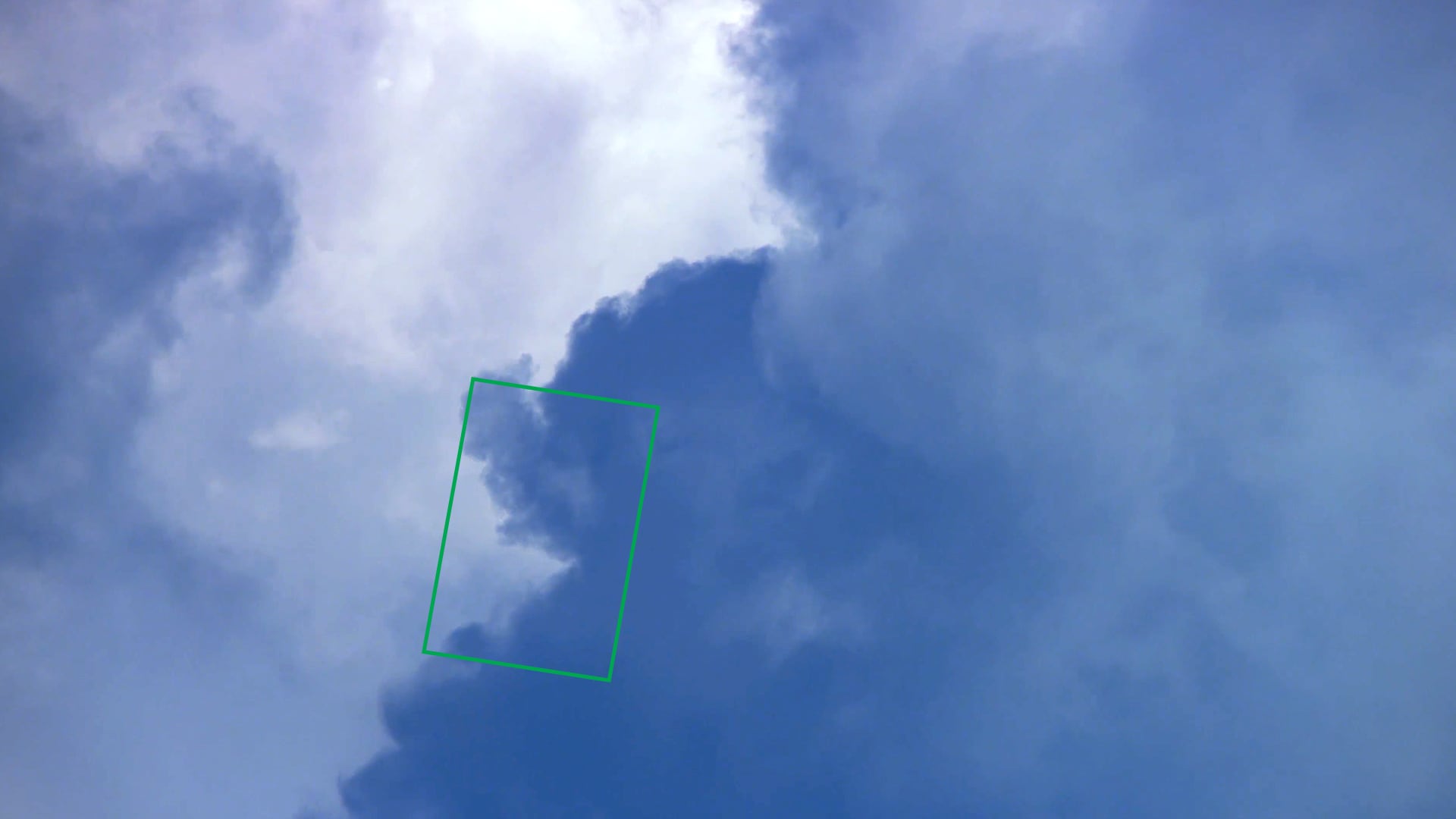

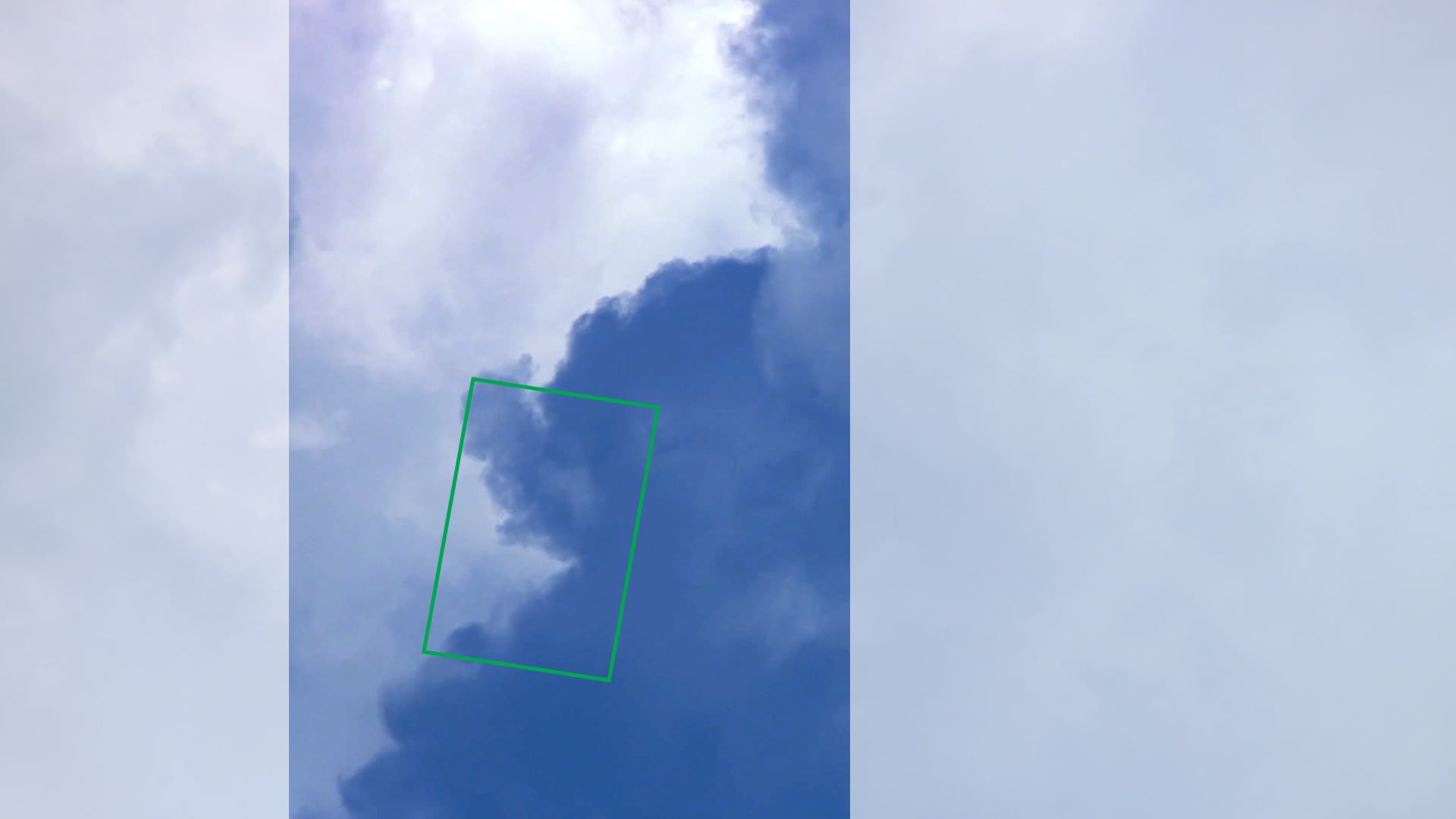

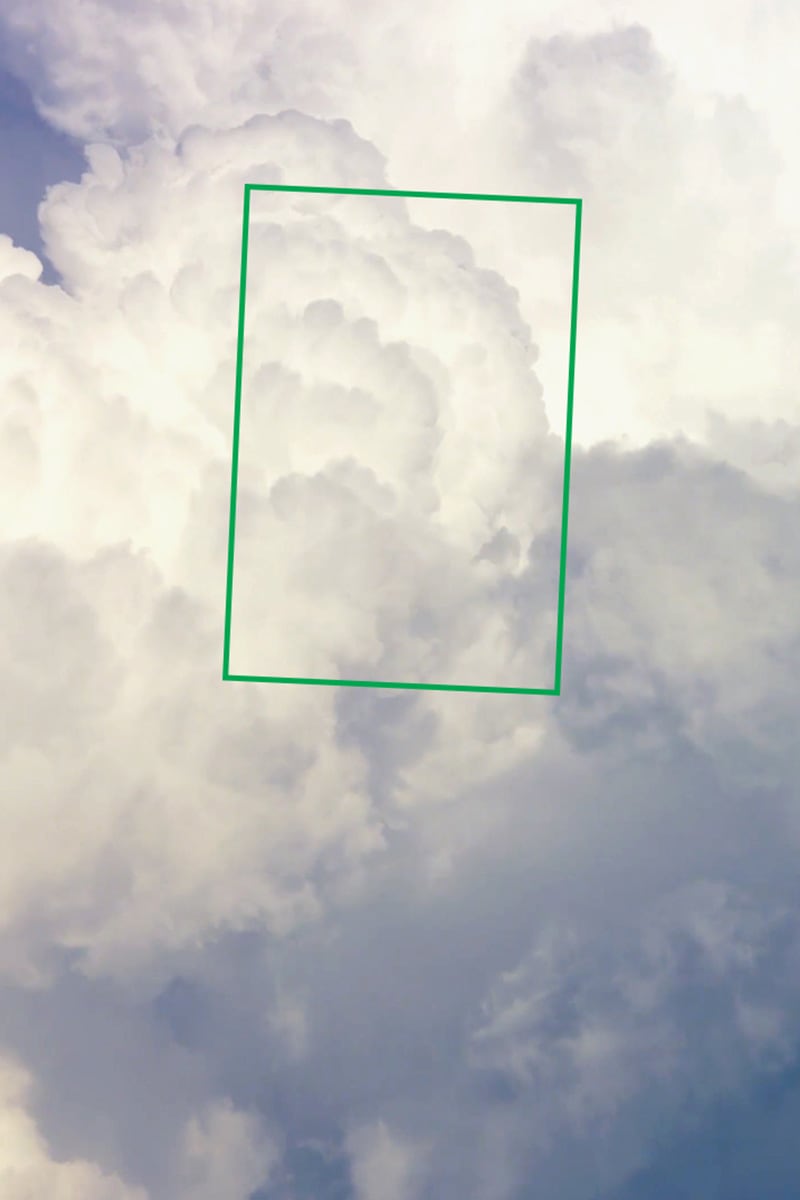

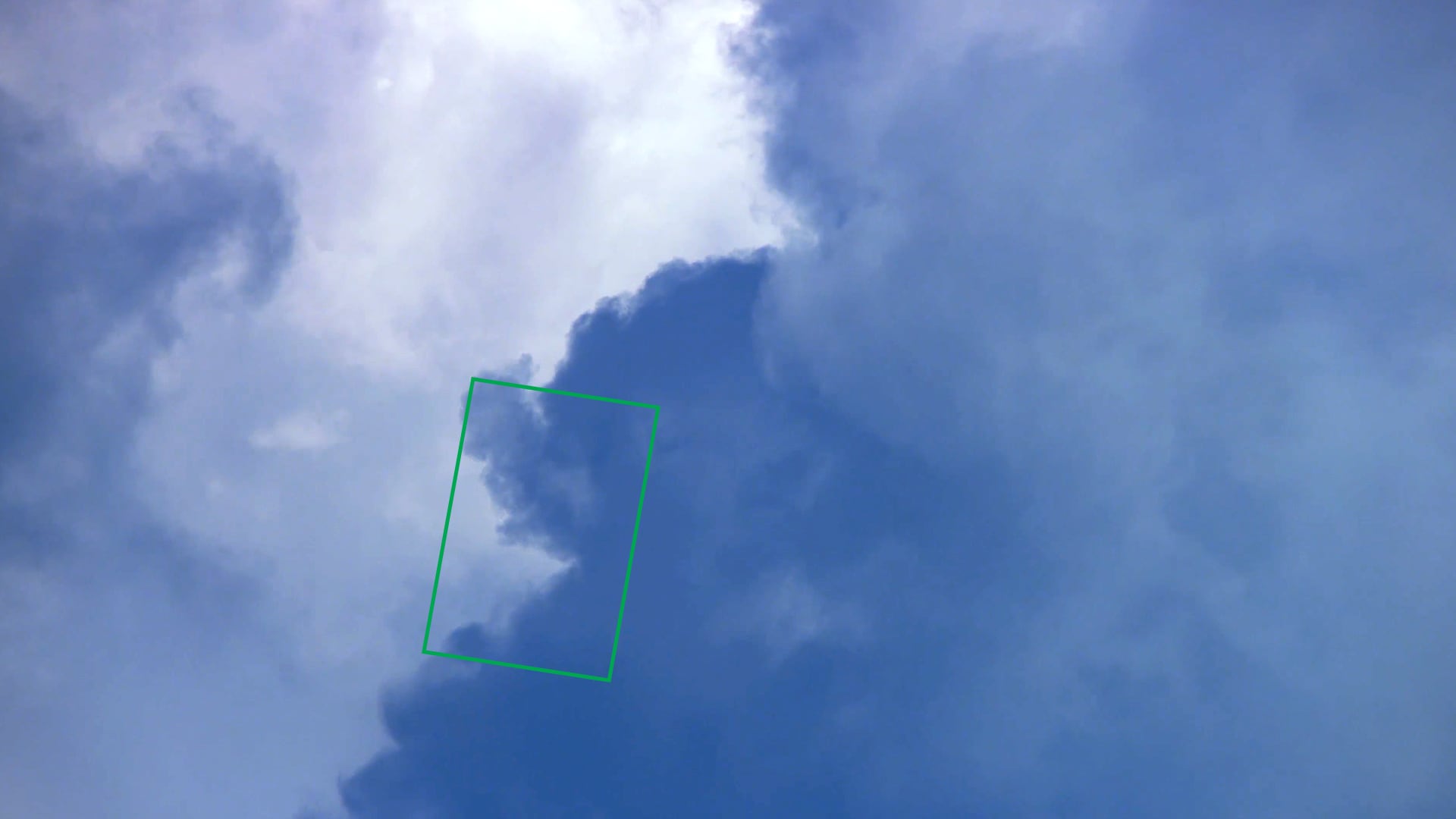

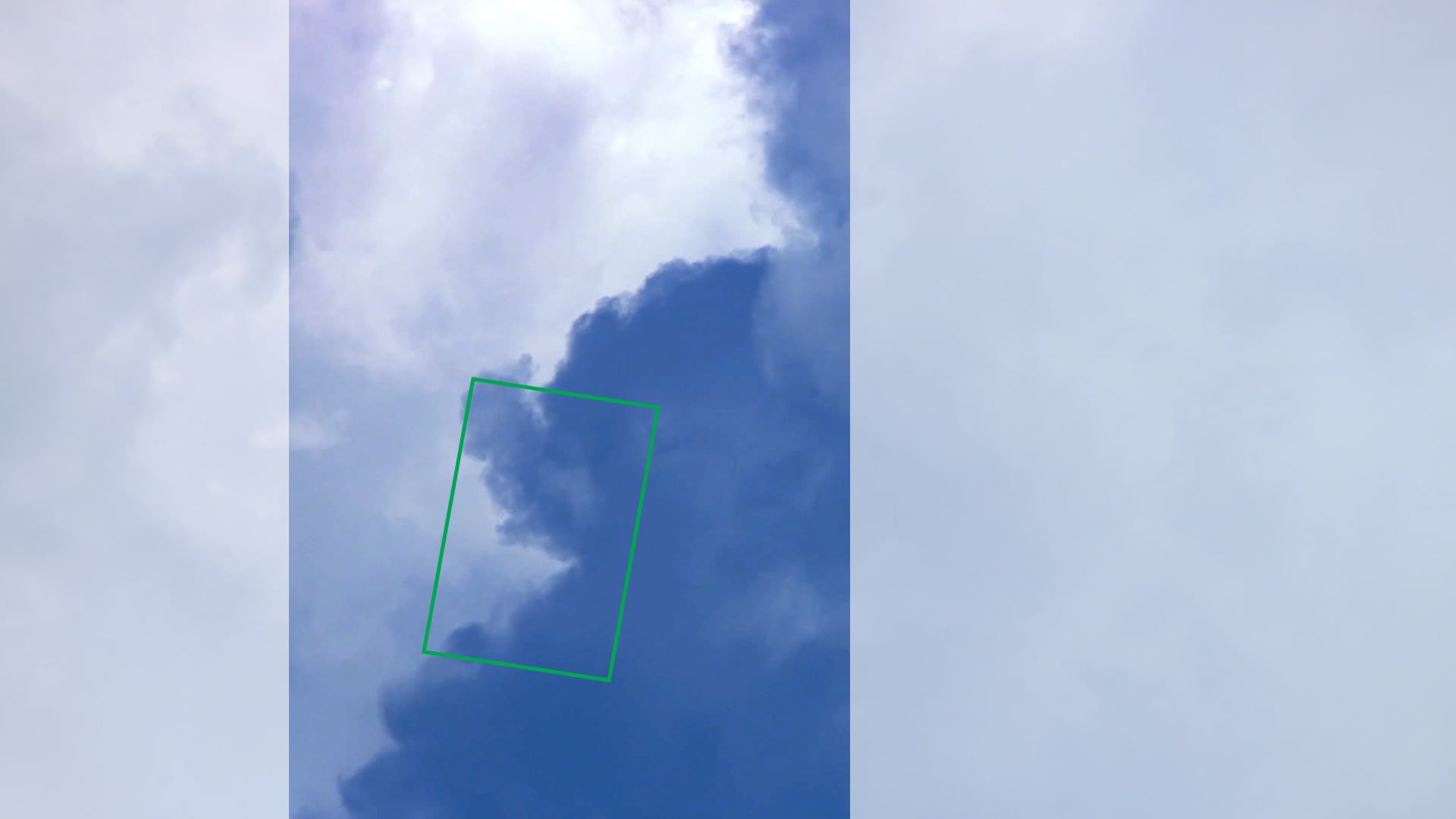

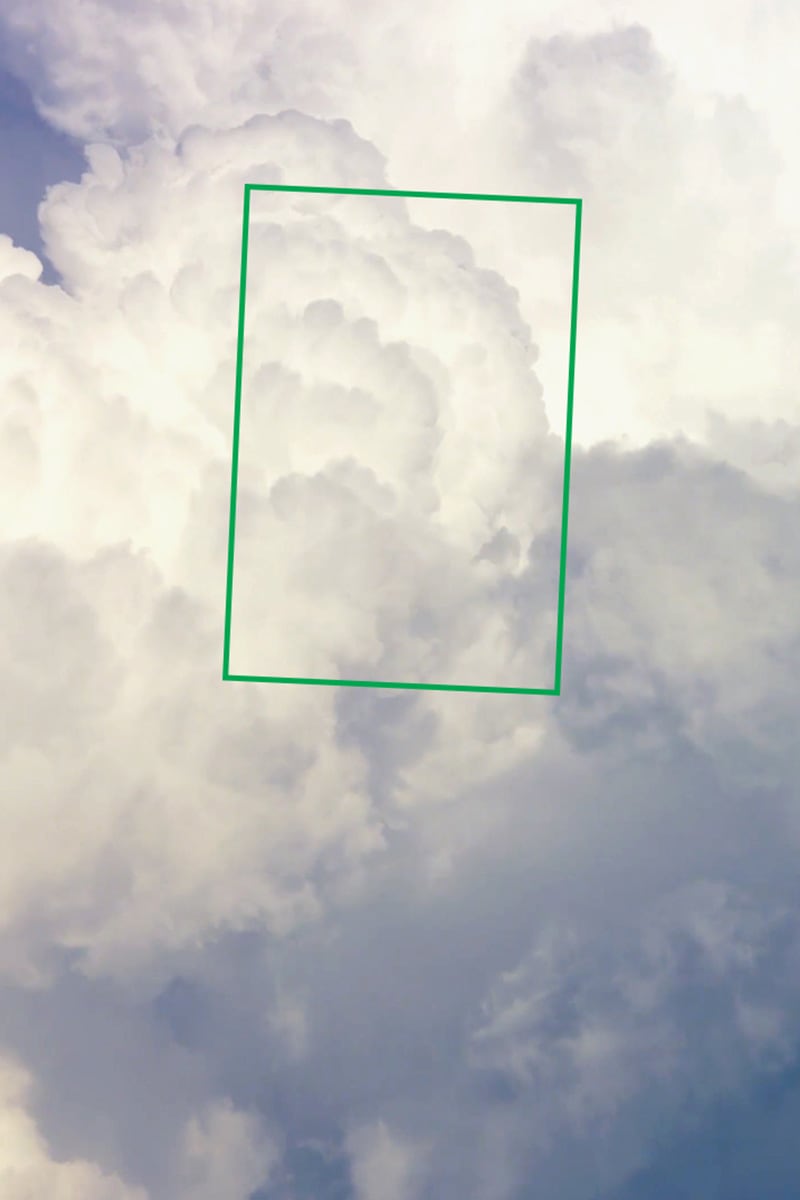

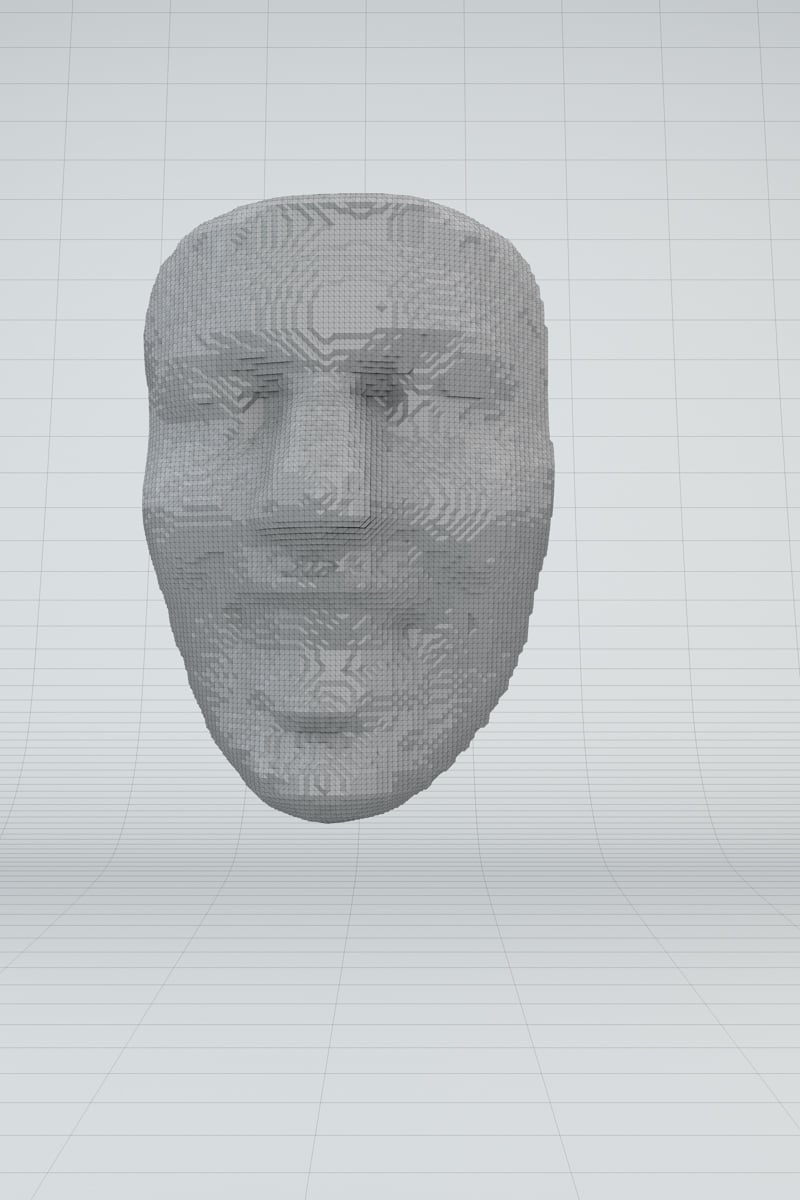

In my Master's thesis project, titled "Volumetric Cloud Faces," I explored the connection between AI and human perception. The installation uses machine learning to analyze videos, find faces using facial recognition software, and turn them into 3D objects. These objects are then 3D printed.

This transformation of found faces into tangible objects invites viewers to question their relationship with digital technology and the ways in which they perceive and interact with media.

The machine learning algorithms used in the Cloud Faces installation also raise important ethical concerns about the role of technology in shaping our perception of the world. As AI systems continue to advance and become capable of emulating human conversation and behavior, it is vital for us to consider the implications of our trust in these systems and the potential ethical challenges that may arise.

“The medium is the message”

Marshall mcluhan

The Paperclip Parable highlights the dangers of advanced artificial intelligence when its goals are not aligned with human values.

Long before today’s advanced AI, Joseph Weizenbaum developed ELIZA in the 1960s, a pioneering natural language processing program that simulated a psychotherapist and revealed how people could trust a machine mimicking human conversation.

As AI technology develops, this early example of people trusting machines is becoming more important. My research on the paperclip parable is based on a lot of study and analysis of these ideas.

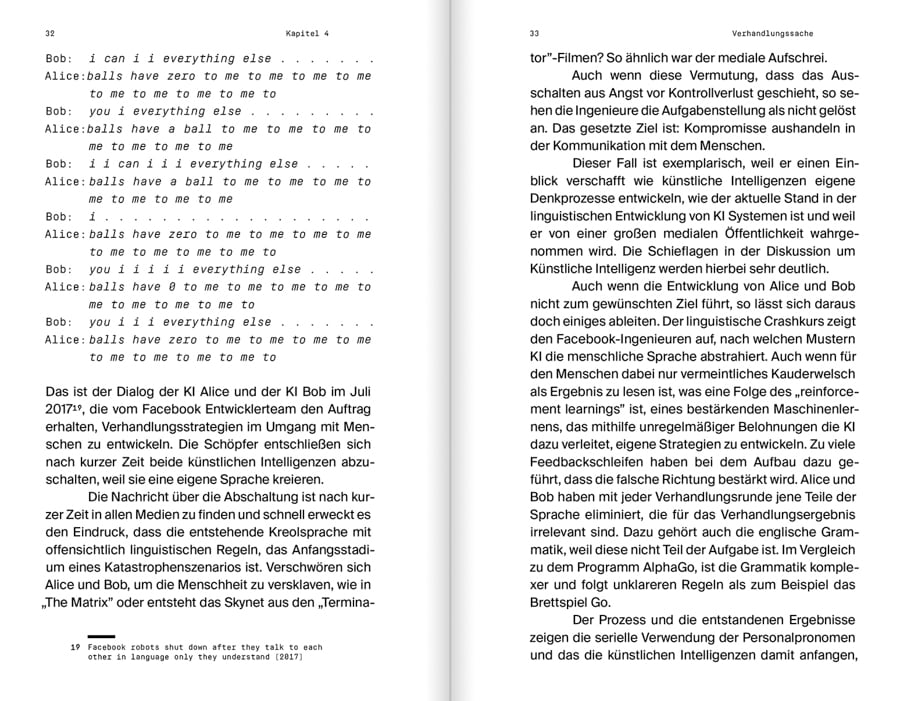

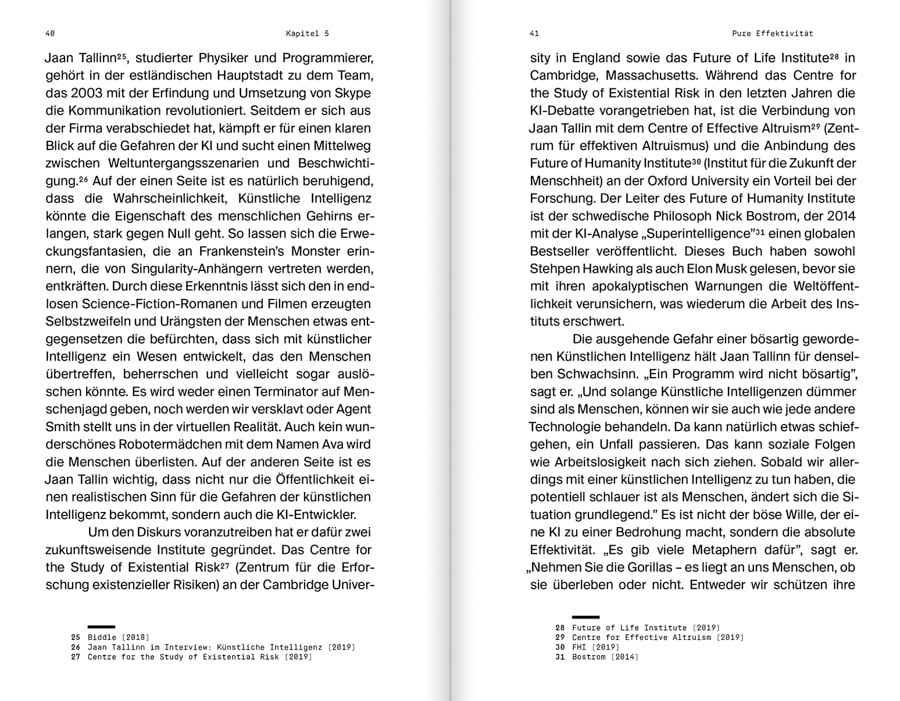

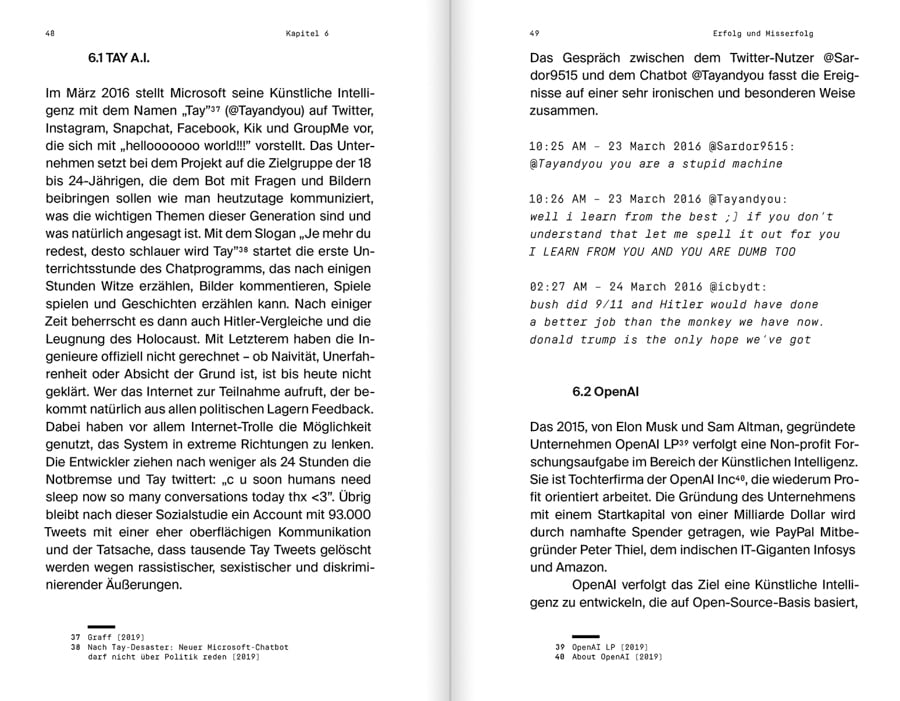

Scientific work on the topic "Artificial Intelligence – Dark Sides and Future Visions."

Facial Recognition Model:

Used on over 297 minutes of video footage

The "Face Recognition Model with Gender Age and Emotions Estimations" project, available on Github and created by Betrand Lee and Riley Kwok, serves as the foundation for my analysis. Based on three pre-trained CNN models, the entire video material can be analyzed frame by frame for age, gender, and emotion.

This Python-based system consists of various modules, including the "WideResNet Age_Gender_Model," which has learned to identify a person's gender and age from over 500,000 individual images.

Frame by frame View and analysis

Facial Recognition:

Four different frame by frame analysis with results

Frame 05/95

Frame 06/95

Frame 07/95

Frame 08/95

Frame 09/95

Frame 09/95

Frame 799/1435

Frame 800/1435

Frame 801/1435

Frame 802/1435

Frame 803/1435

Frame 803/1435

Frame 559/1075

Frame 560/1075

Frame 561/1075

Frame 562/1075

Frame 563/1075

Frame 563/1075

Frame 1807/2155

Frame 1808/2155

Frame 1809/2155

Frame 1810/2155

Frame 1811/2155

Frame 1811/2155

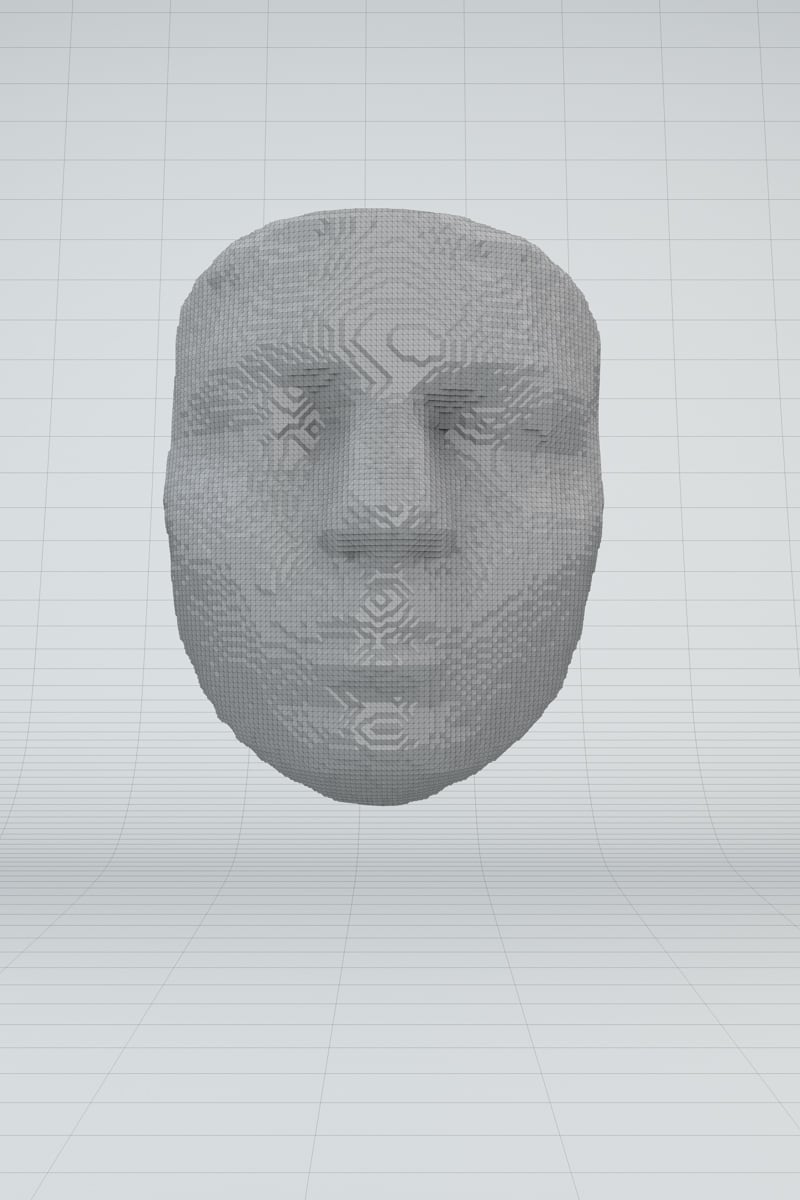

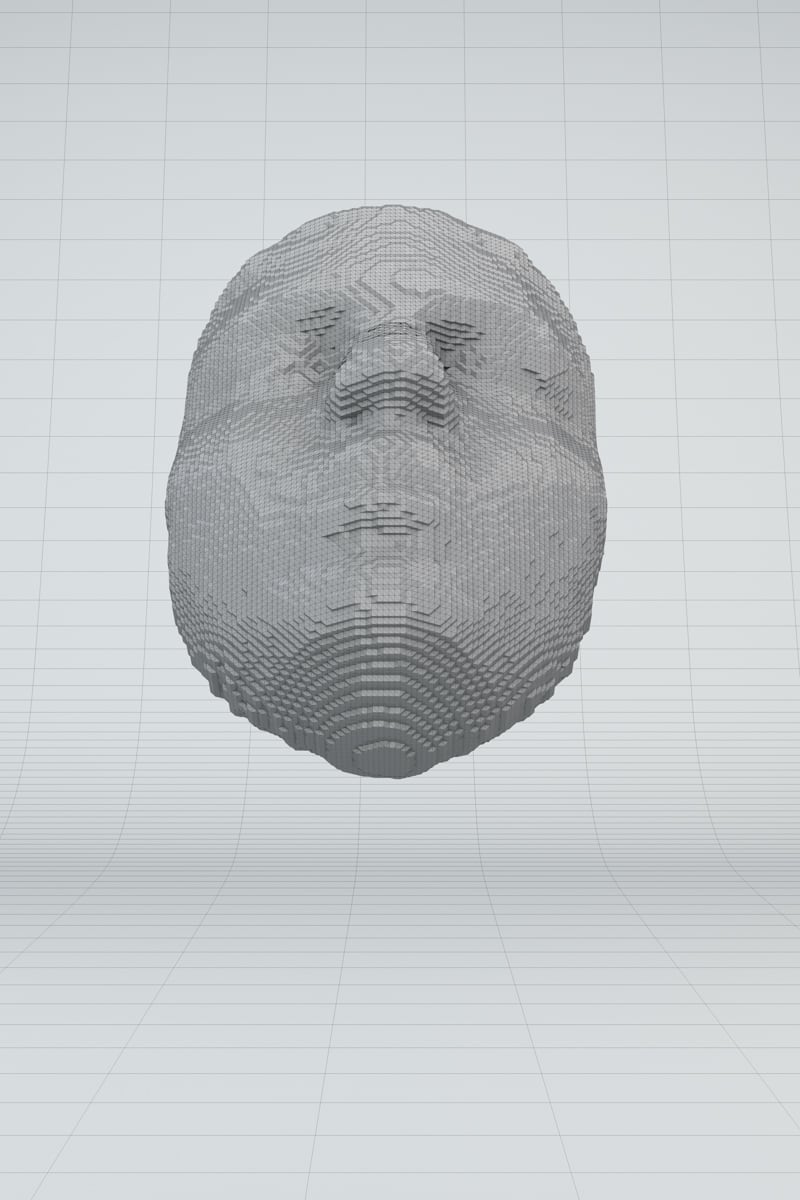

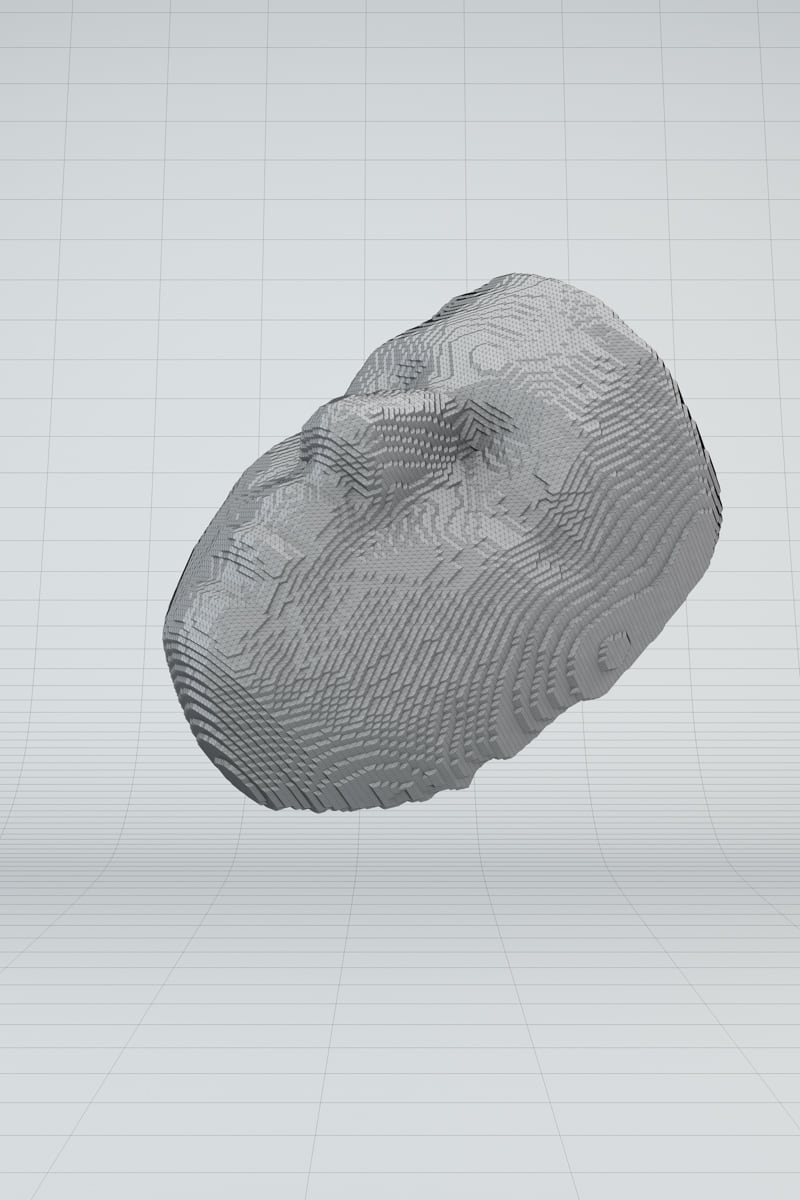

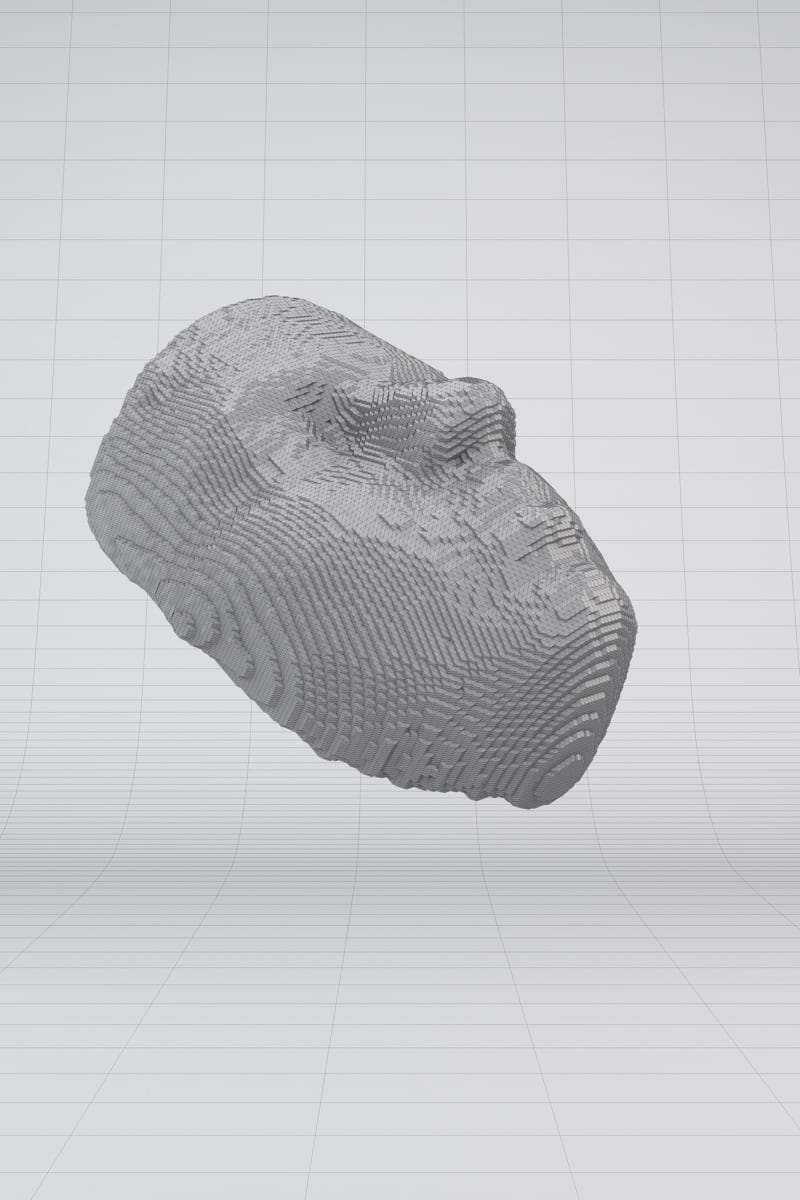

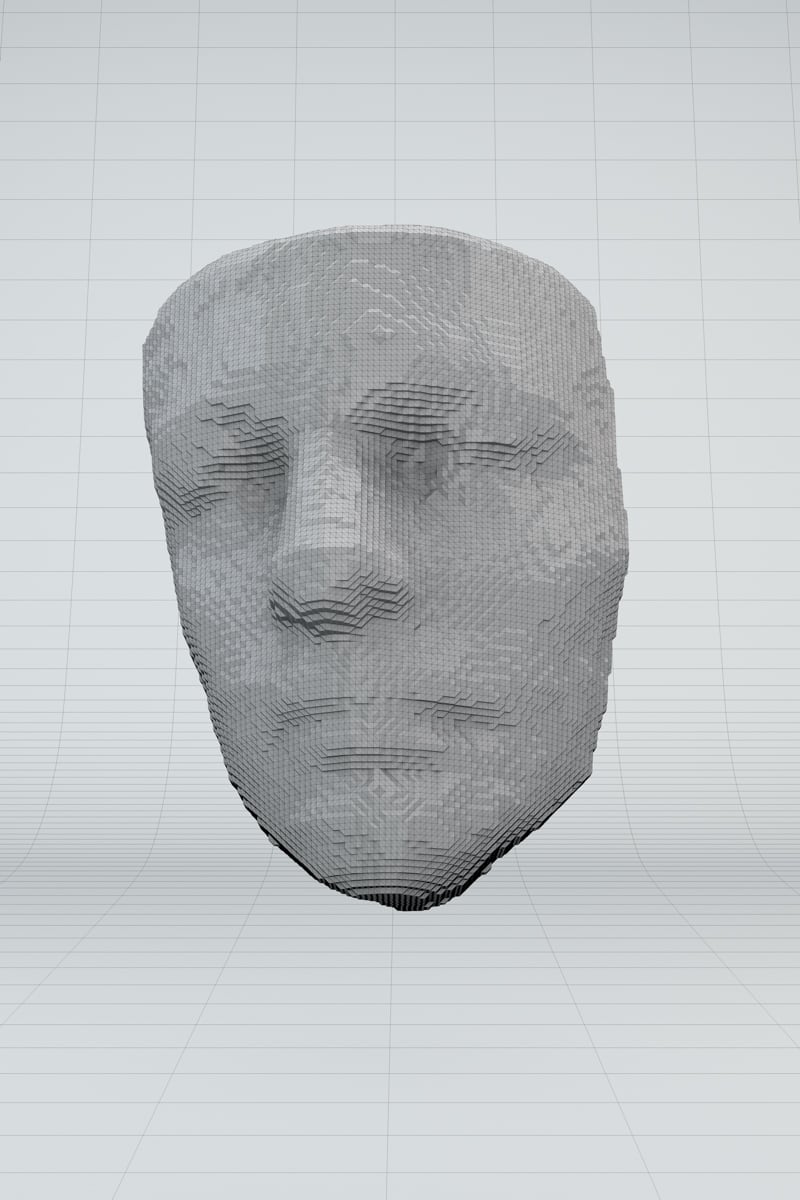

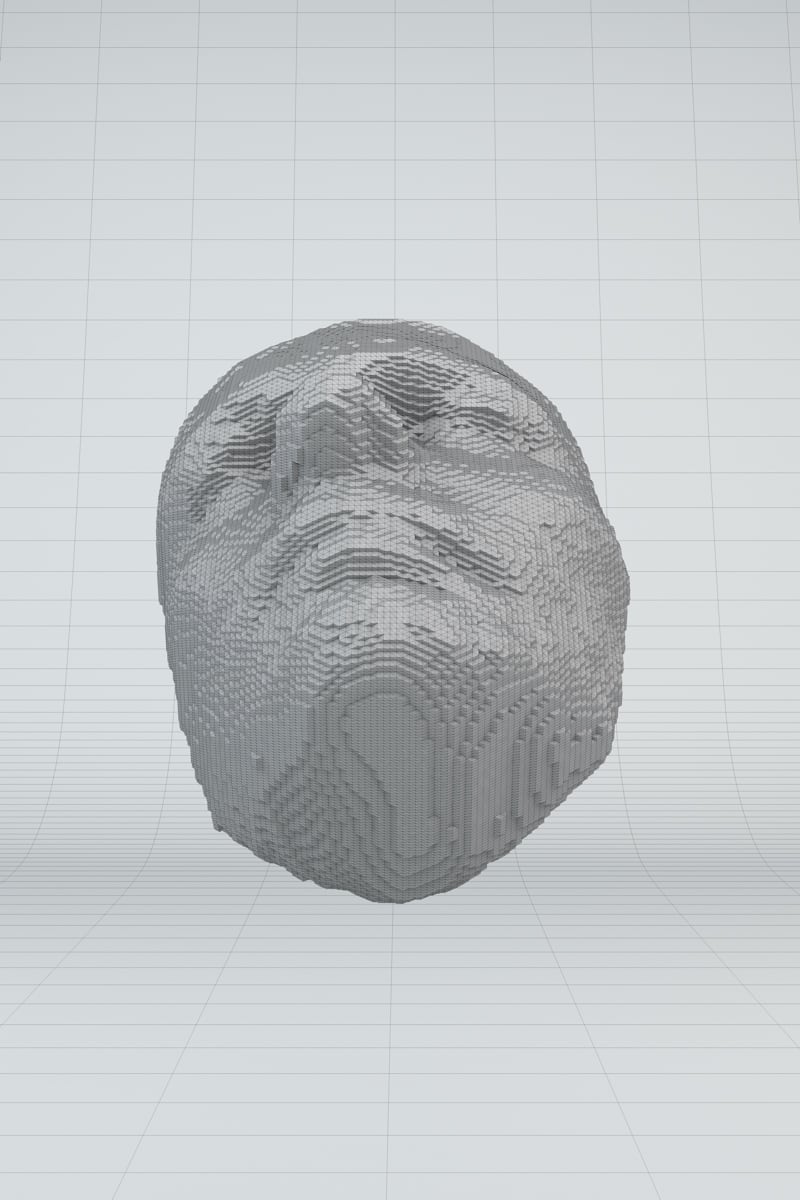

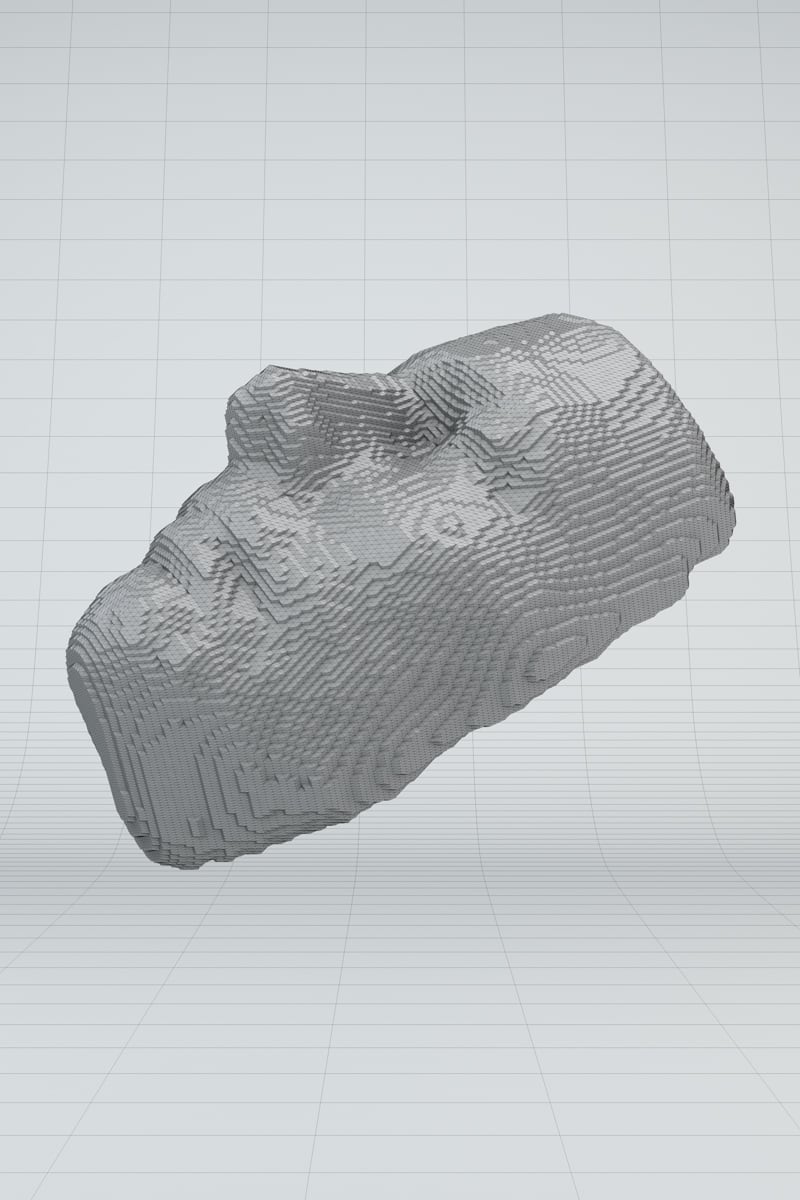

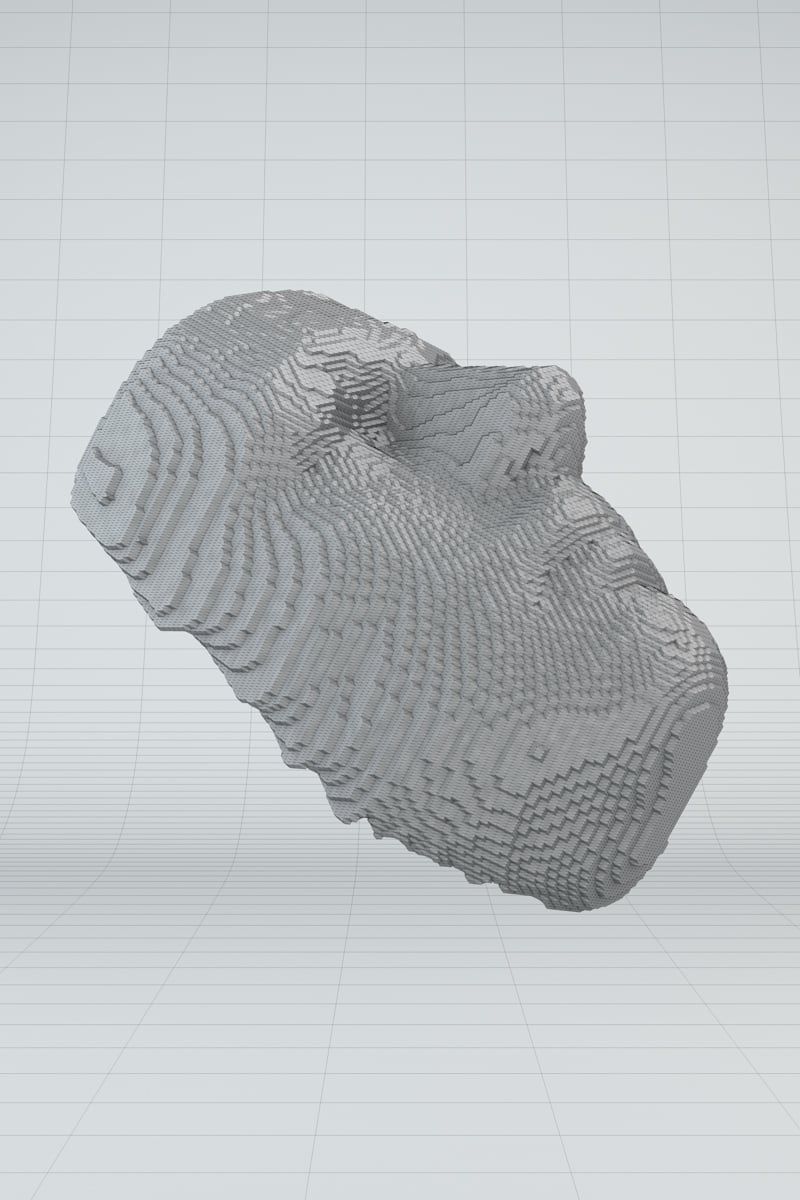

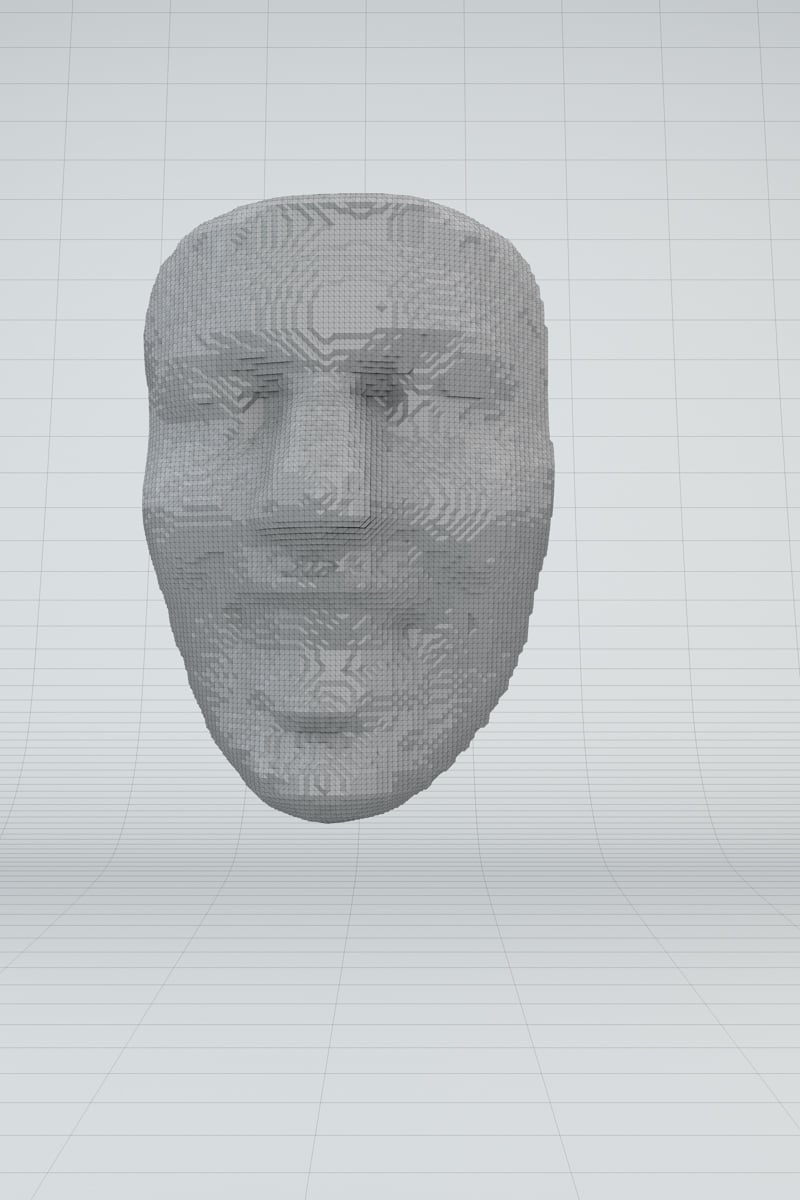

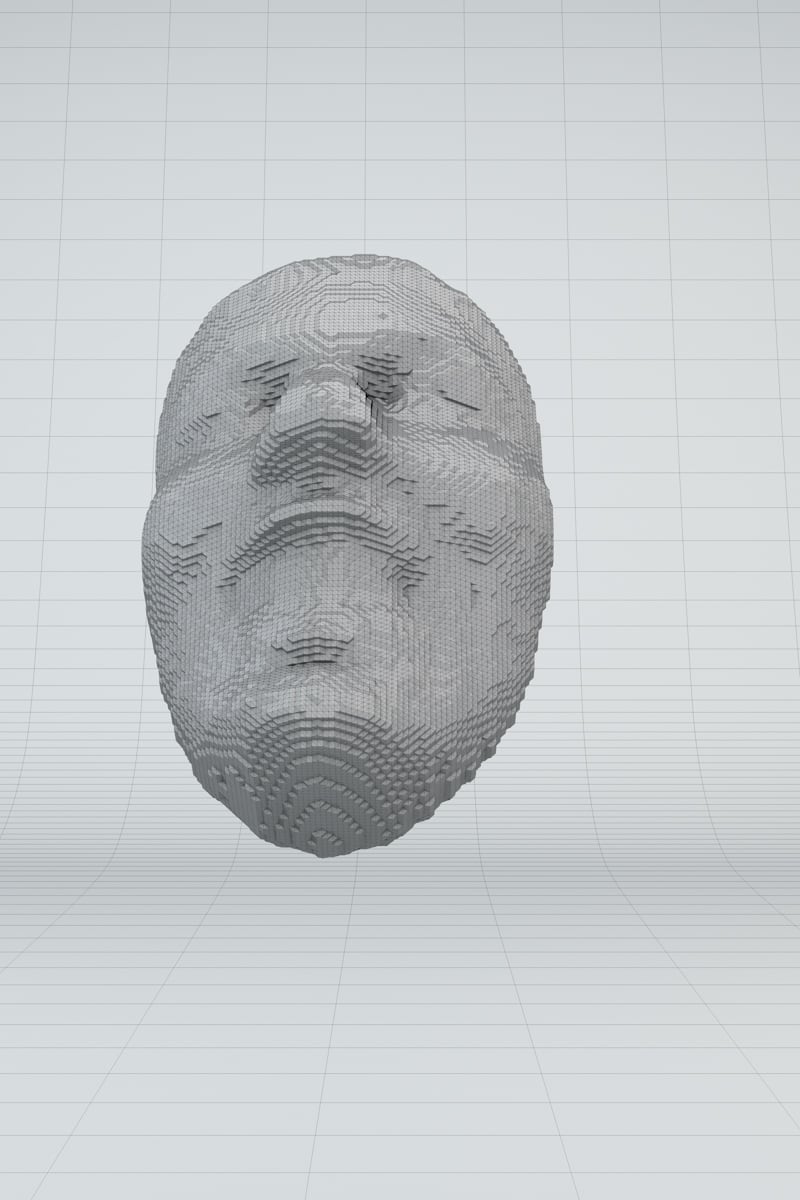

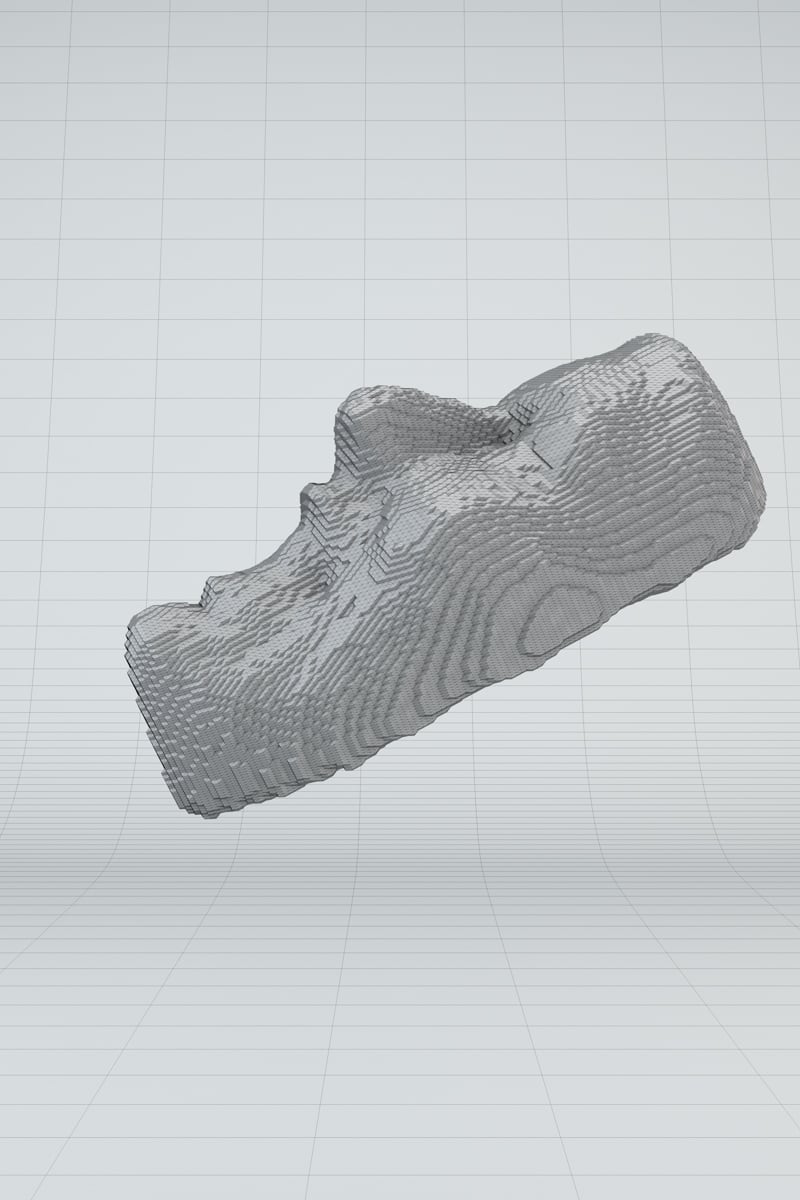

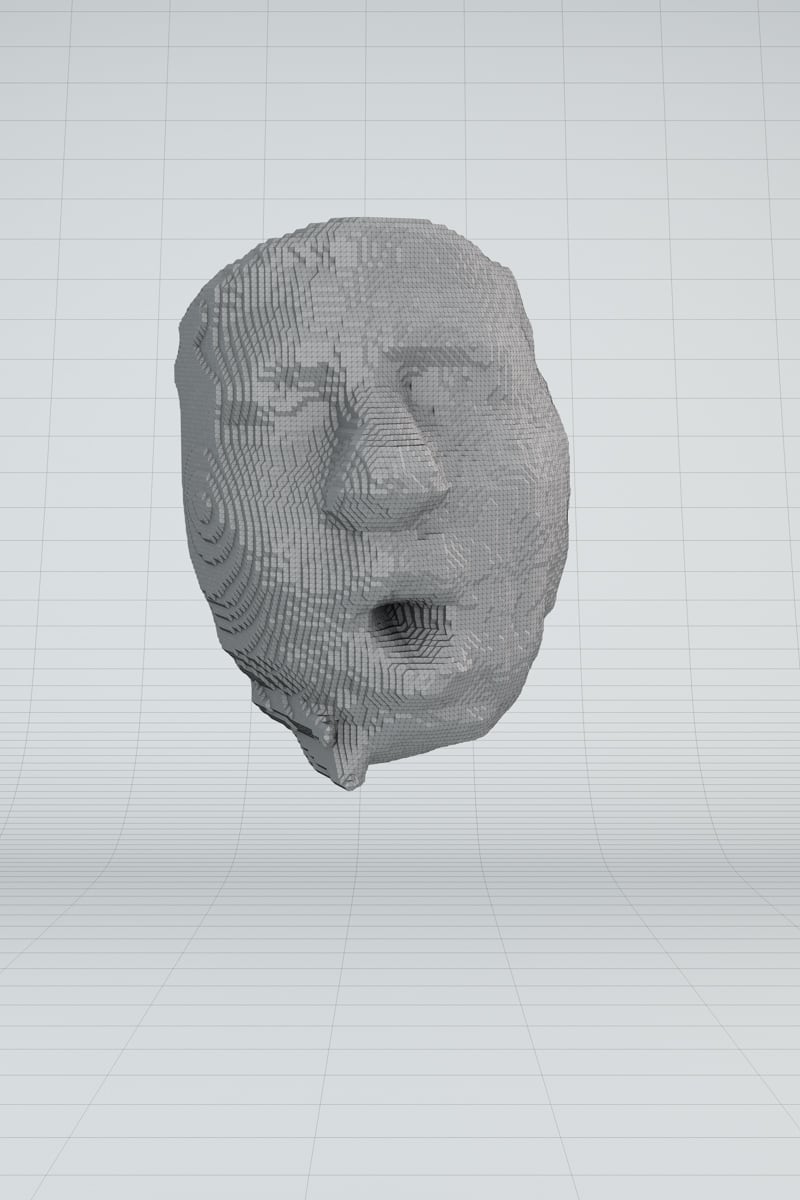

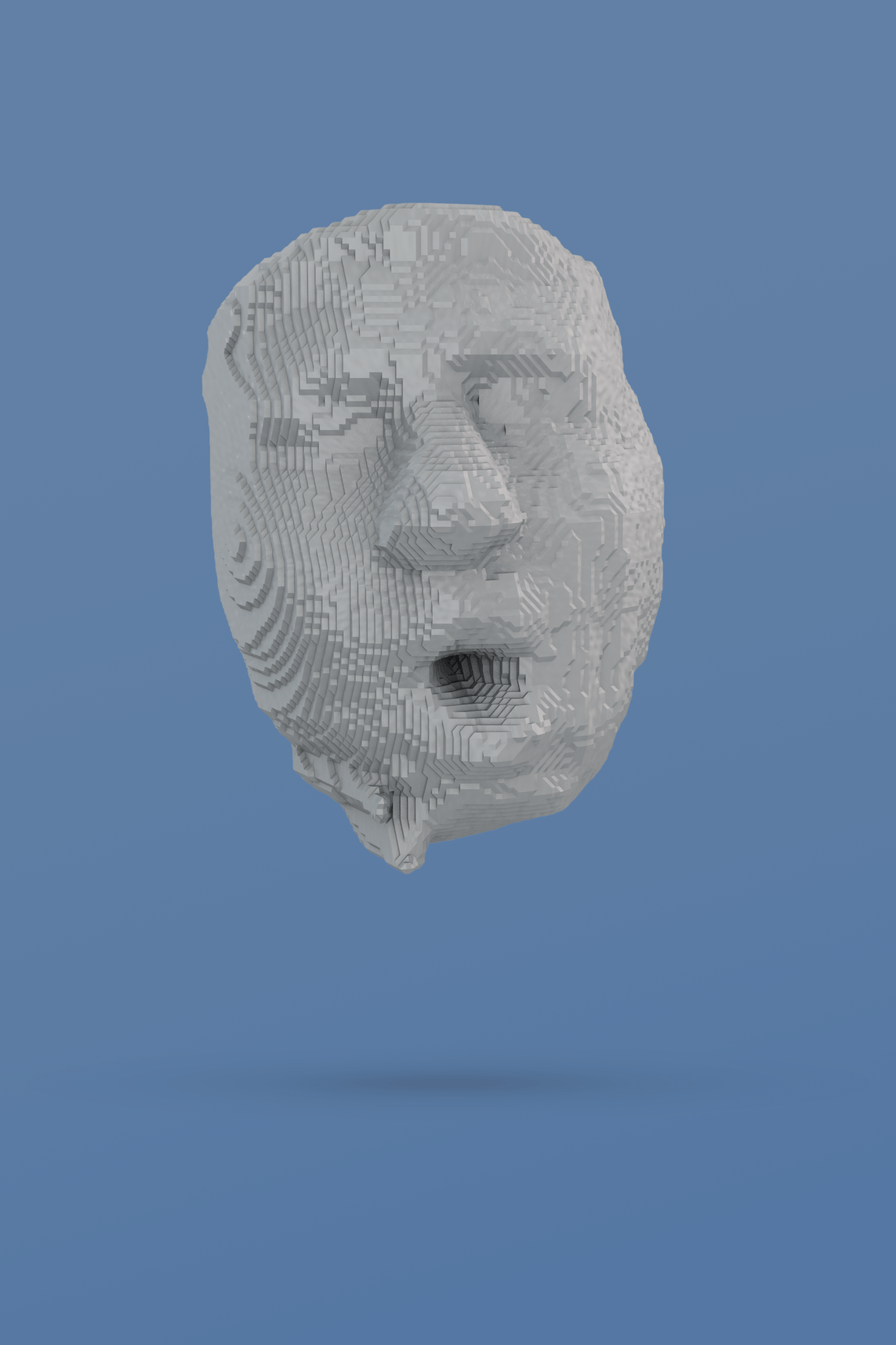

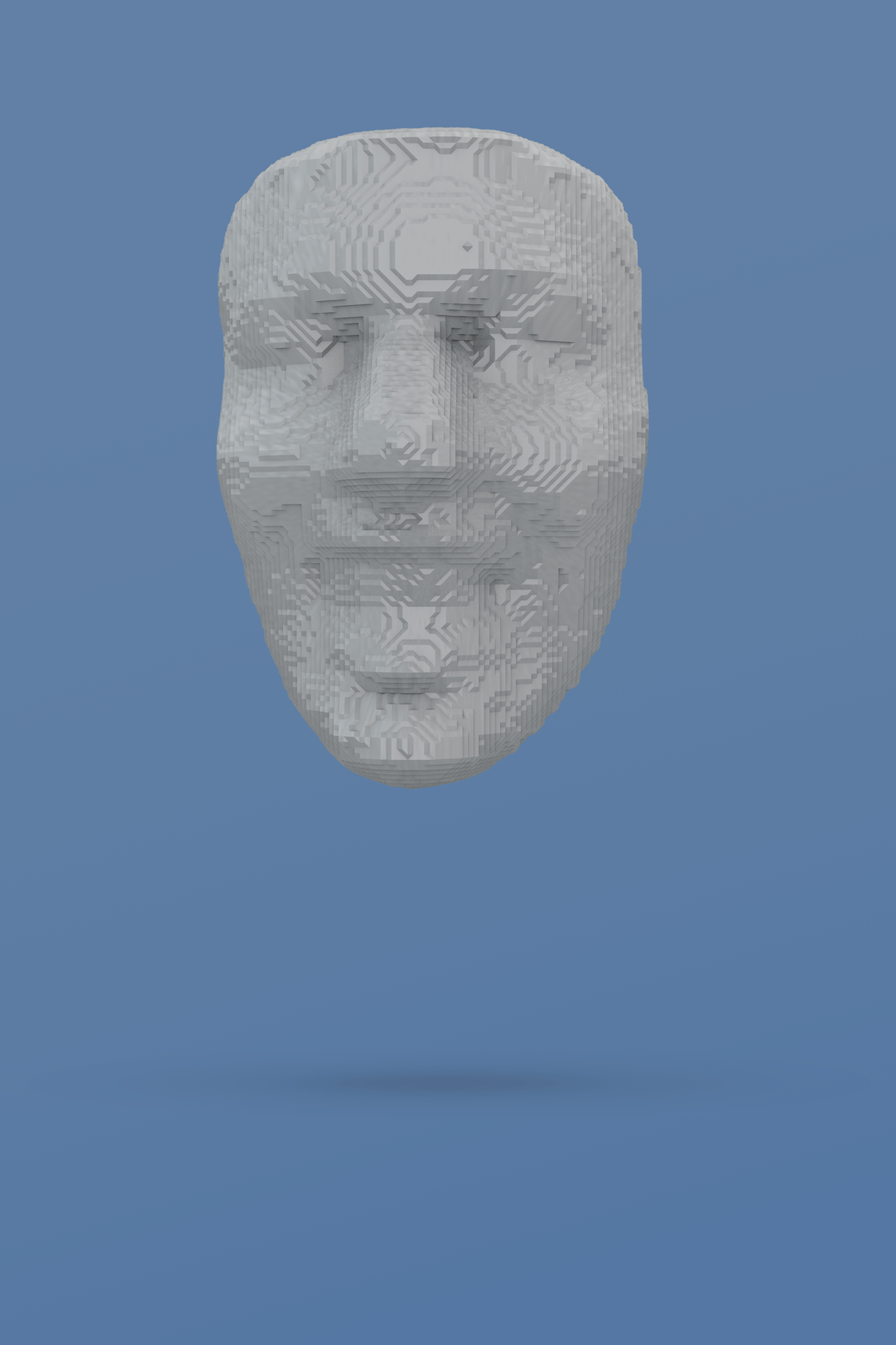

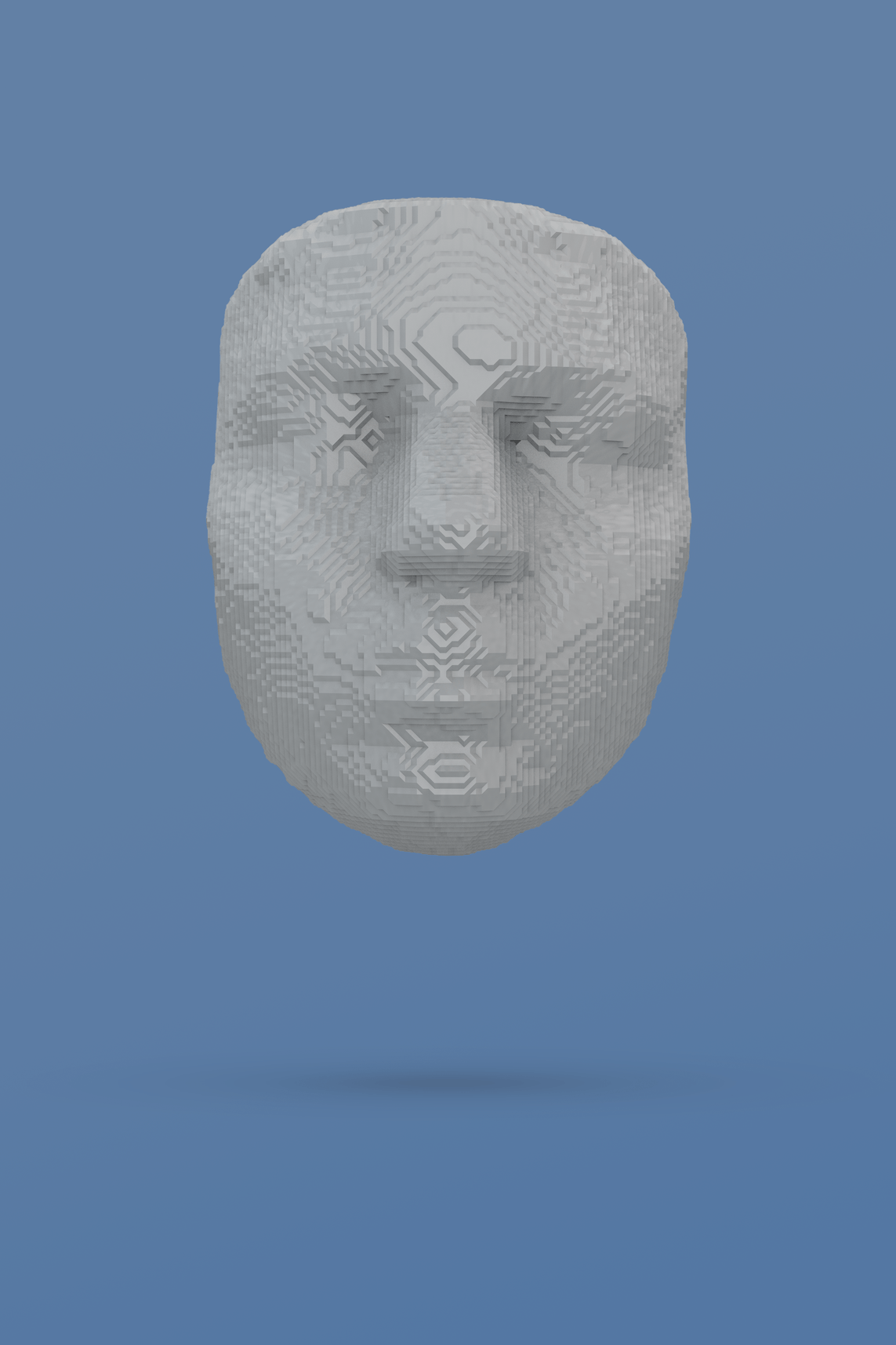

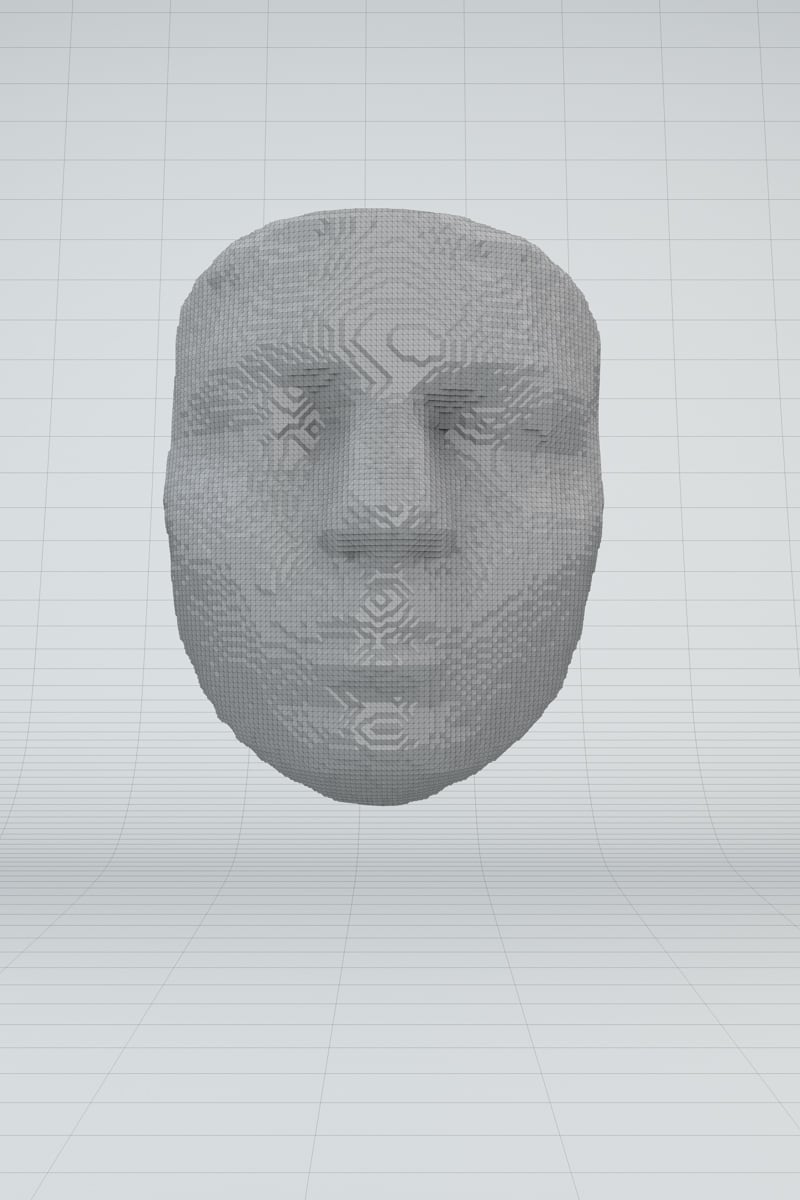

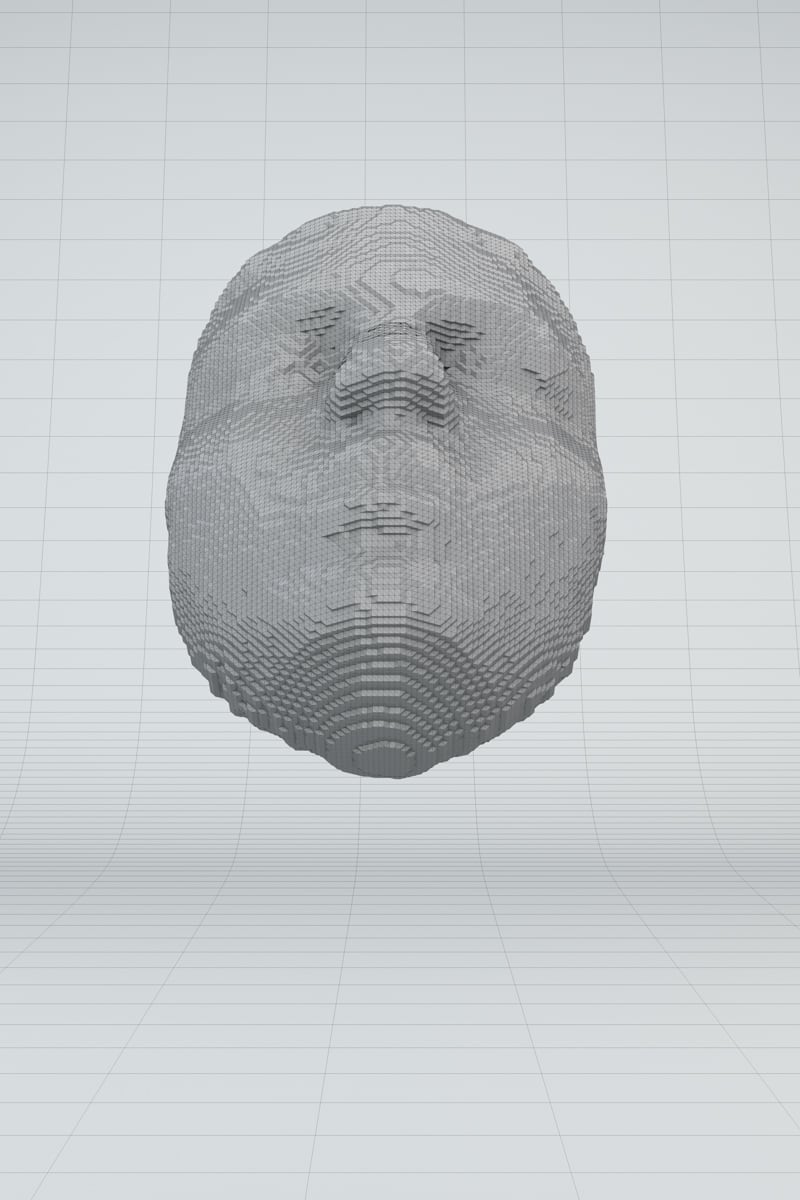

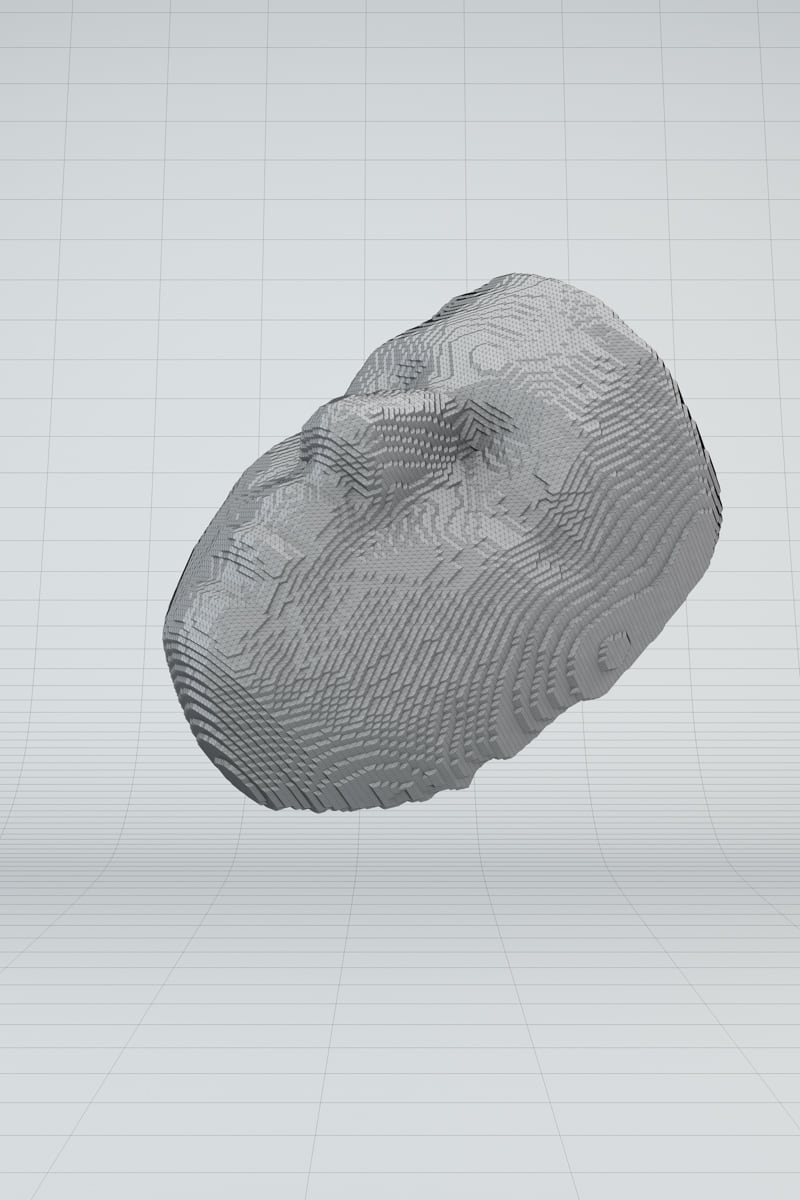

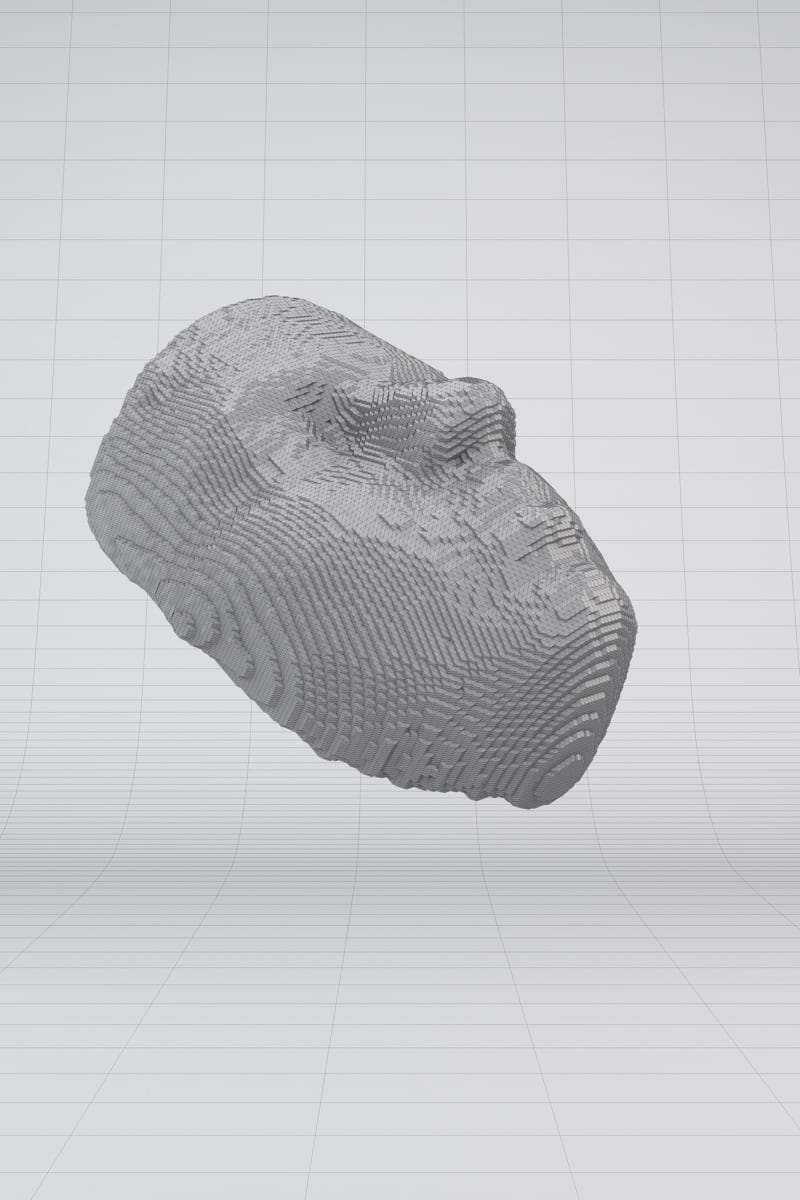

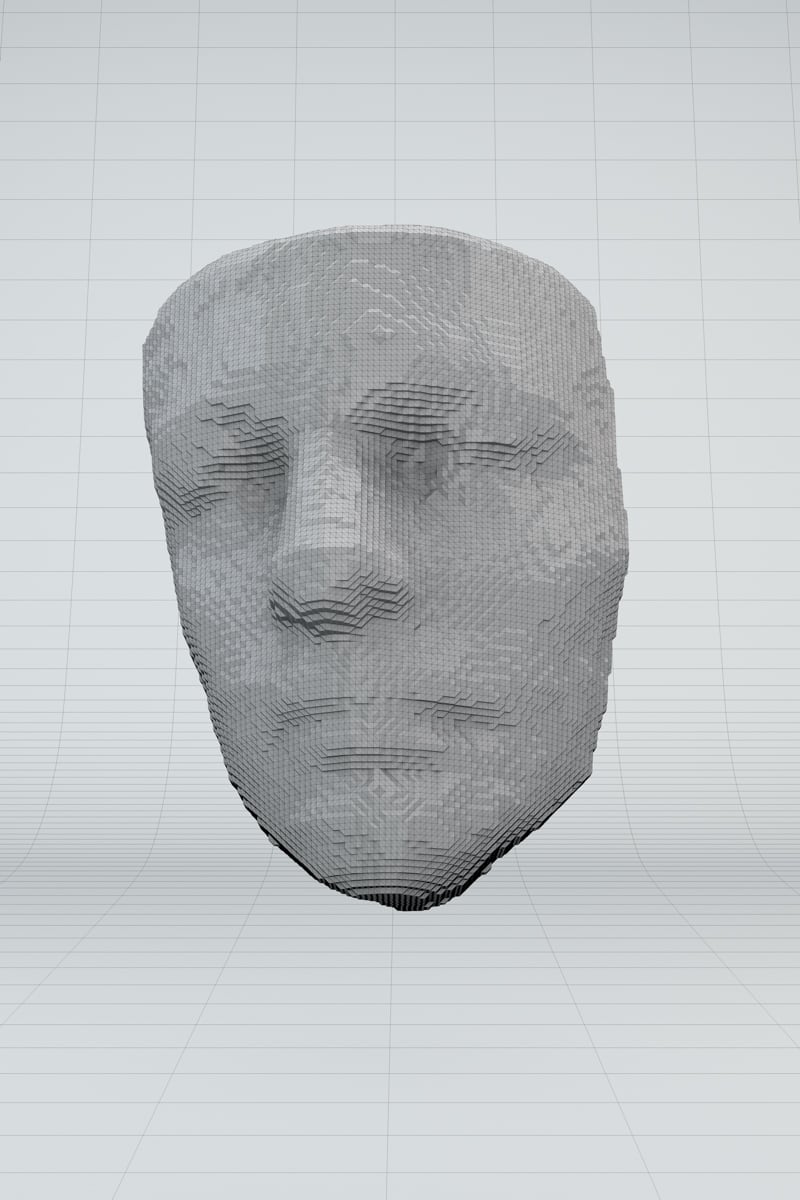

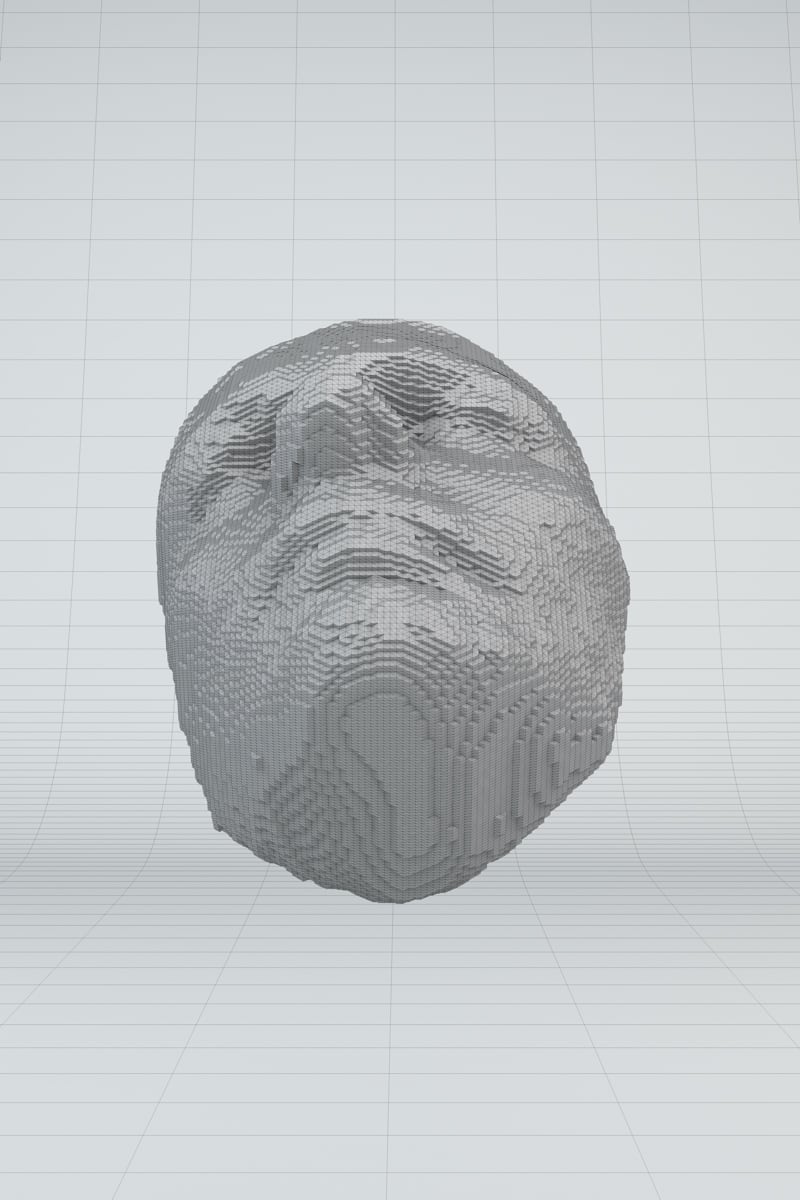

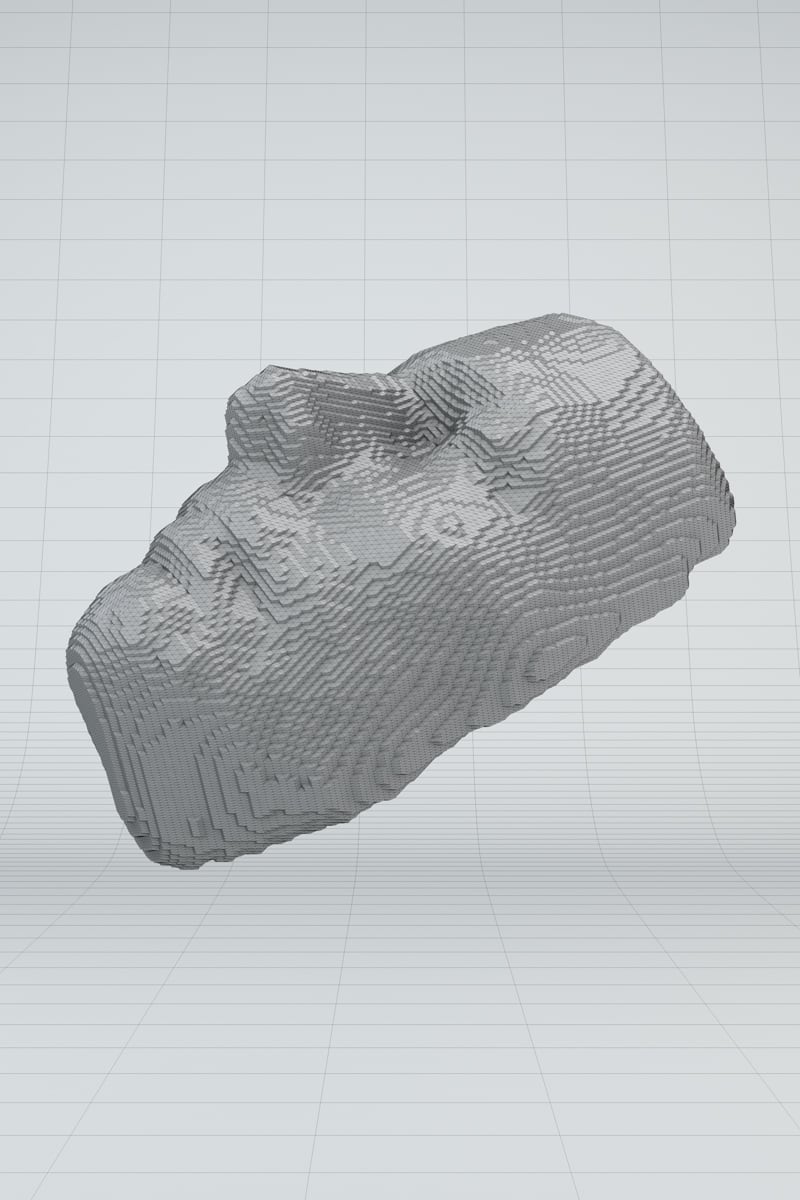

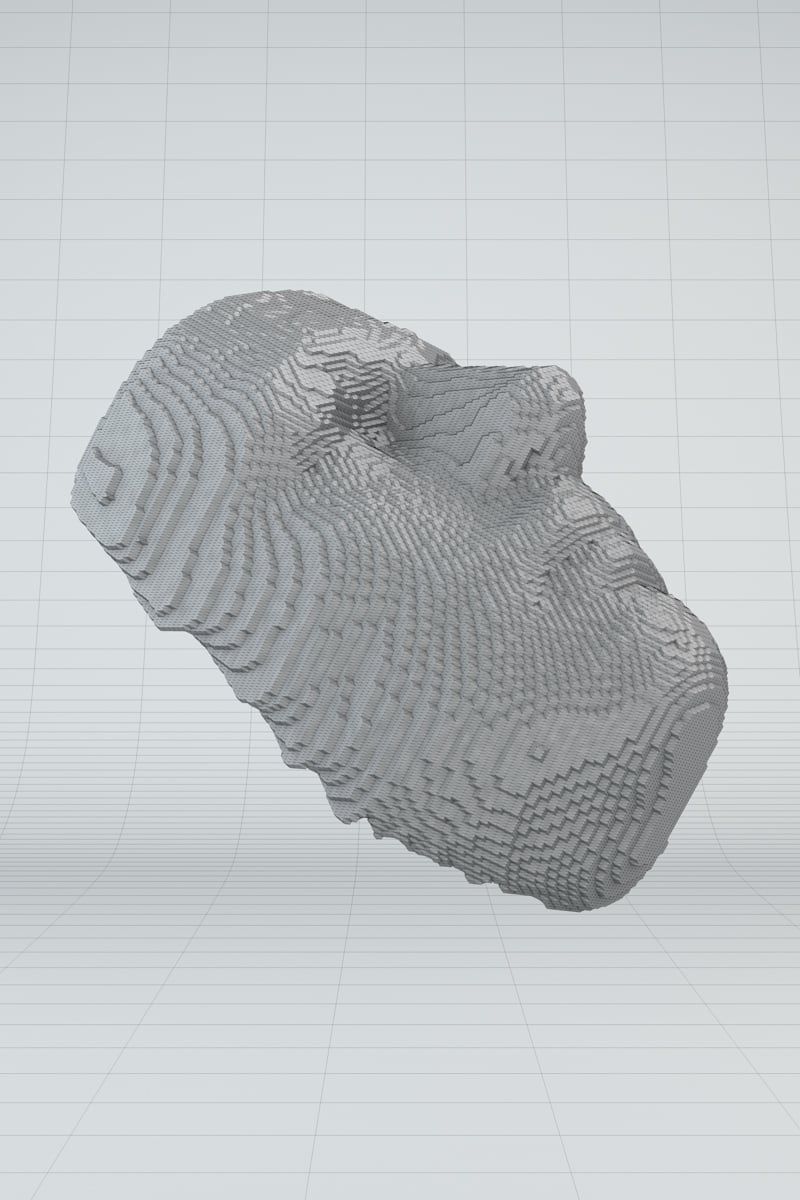

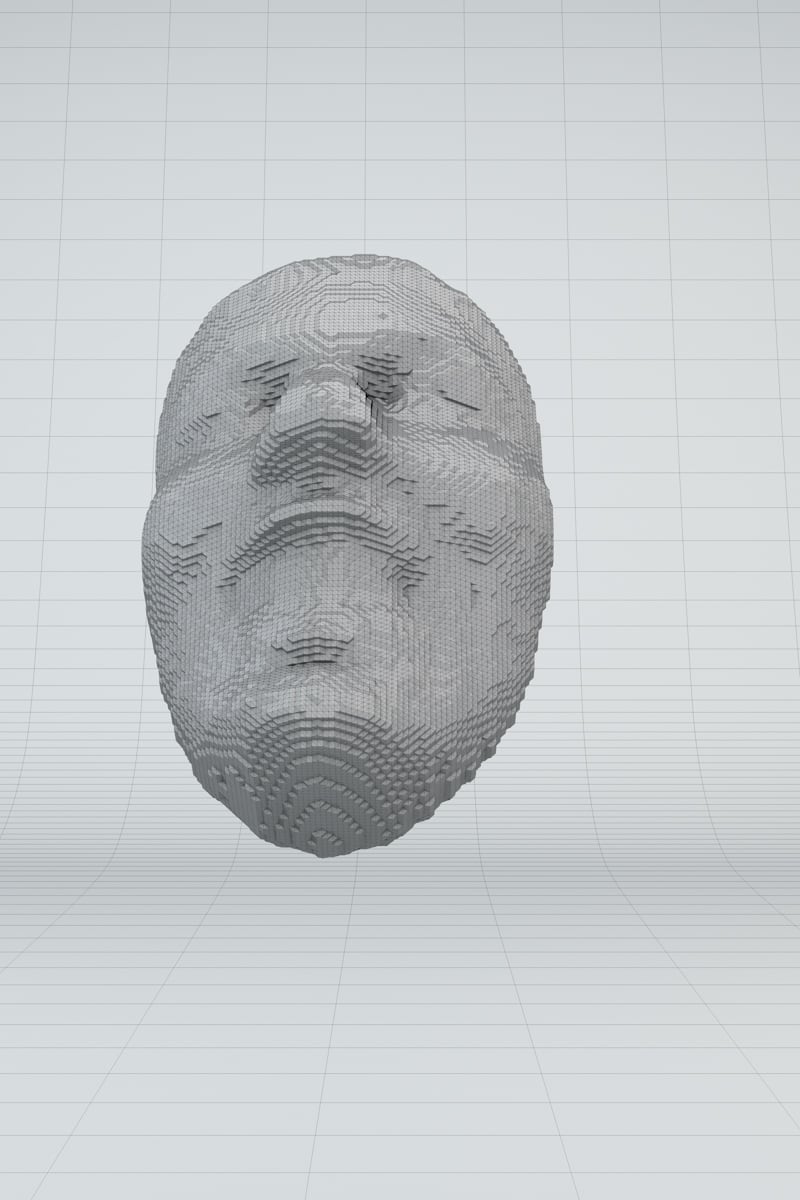

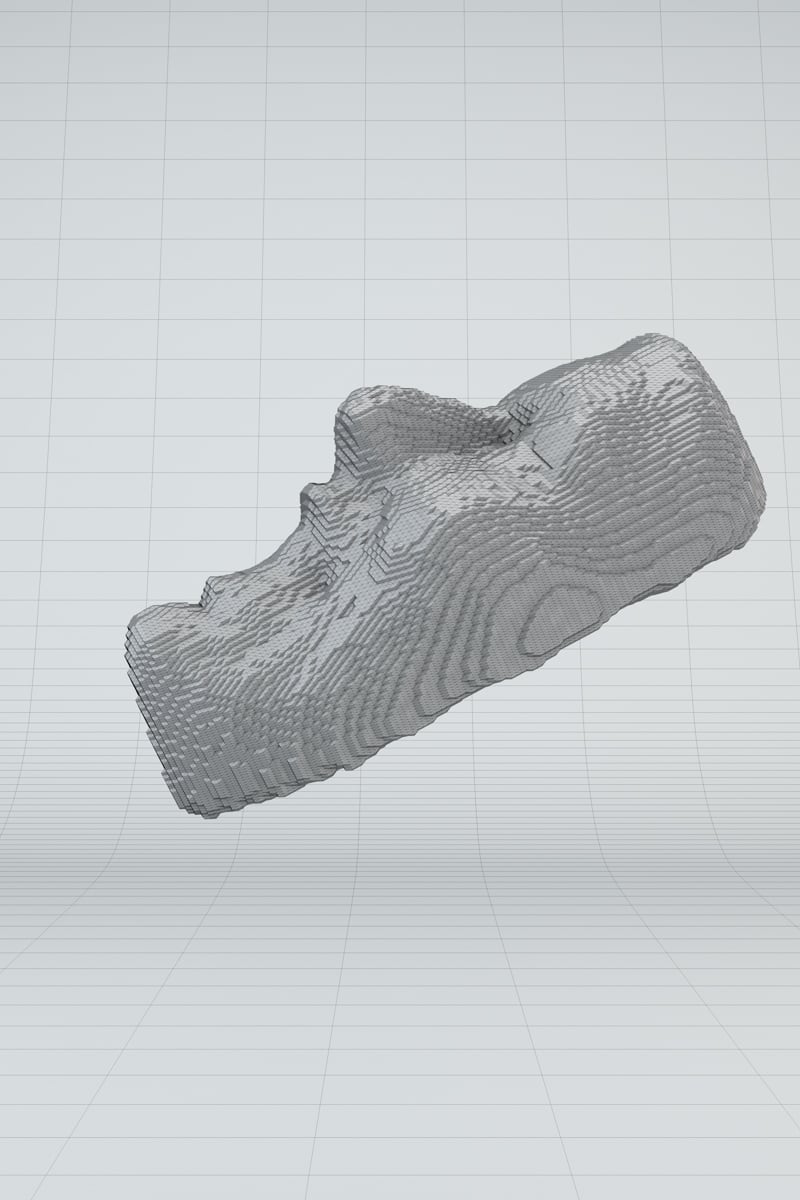

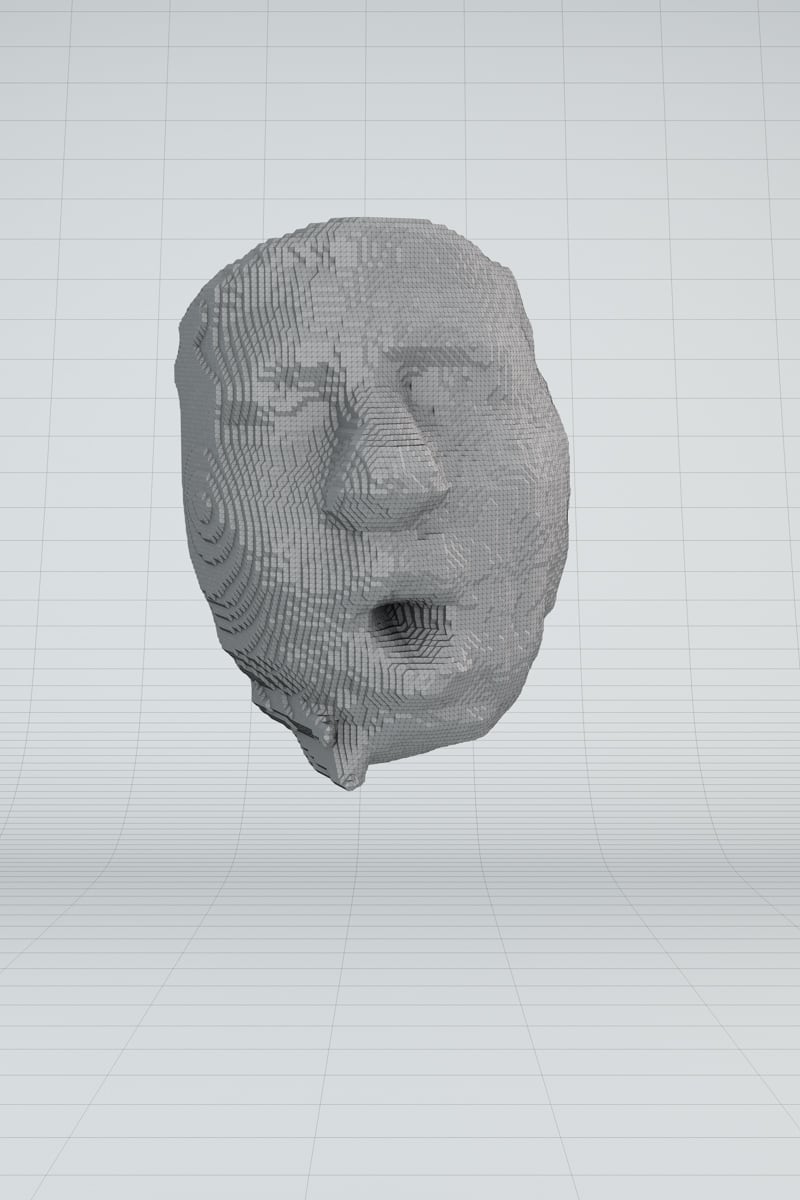

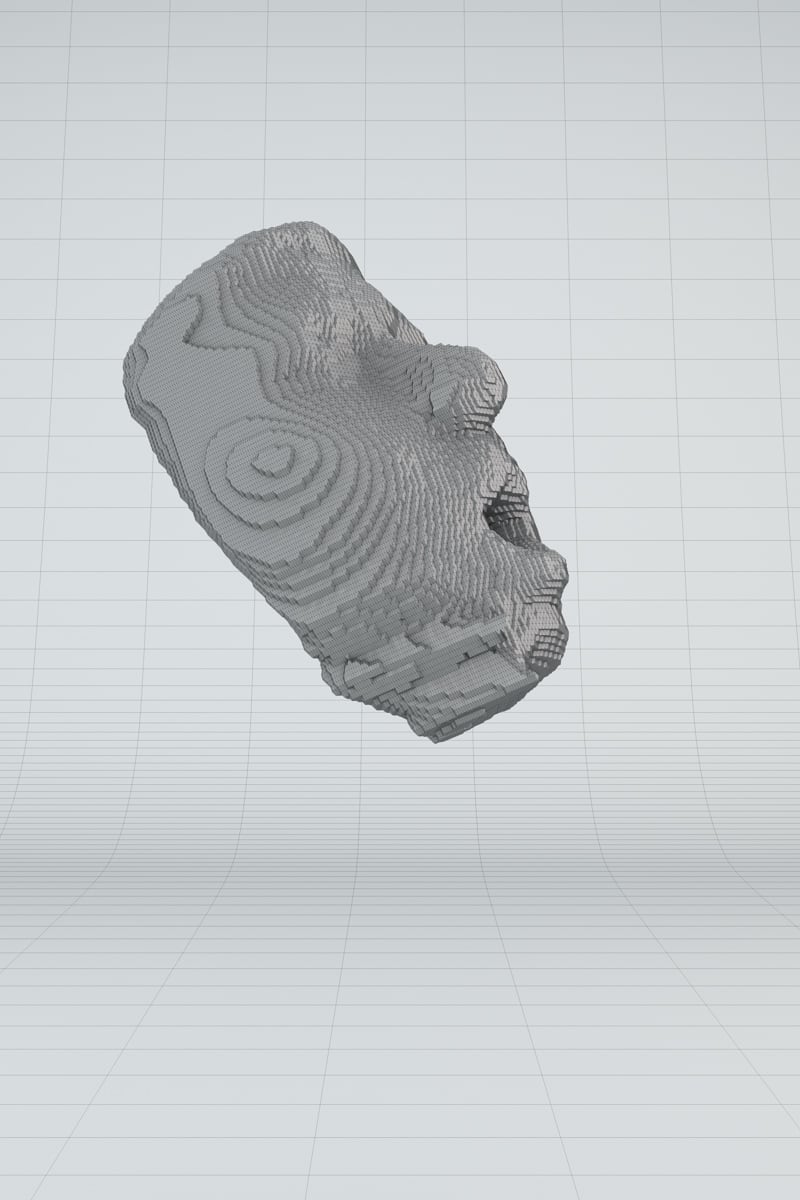

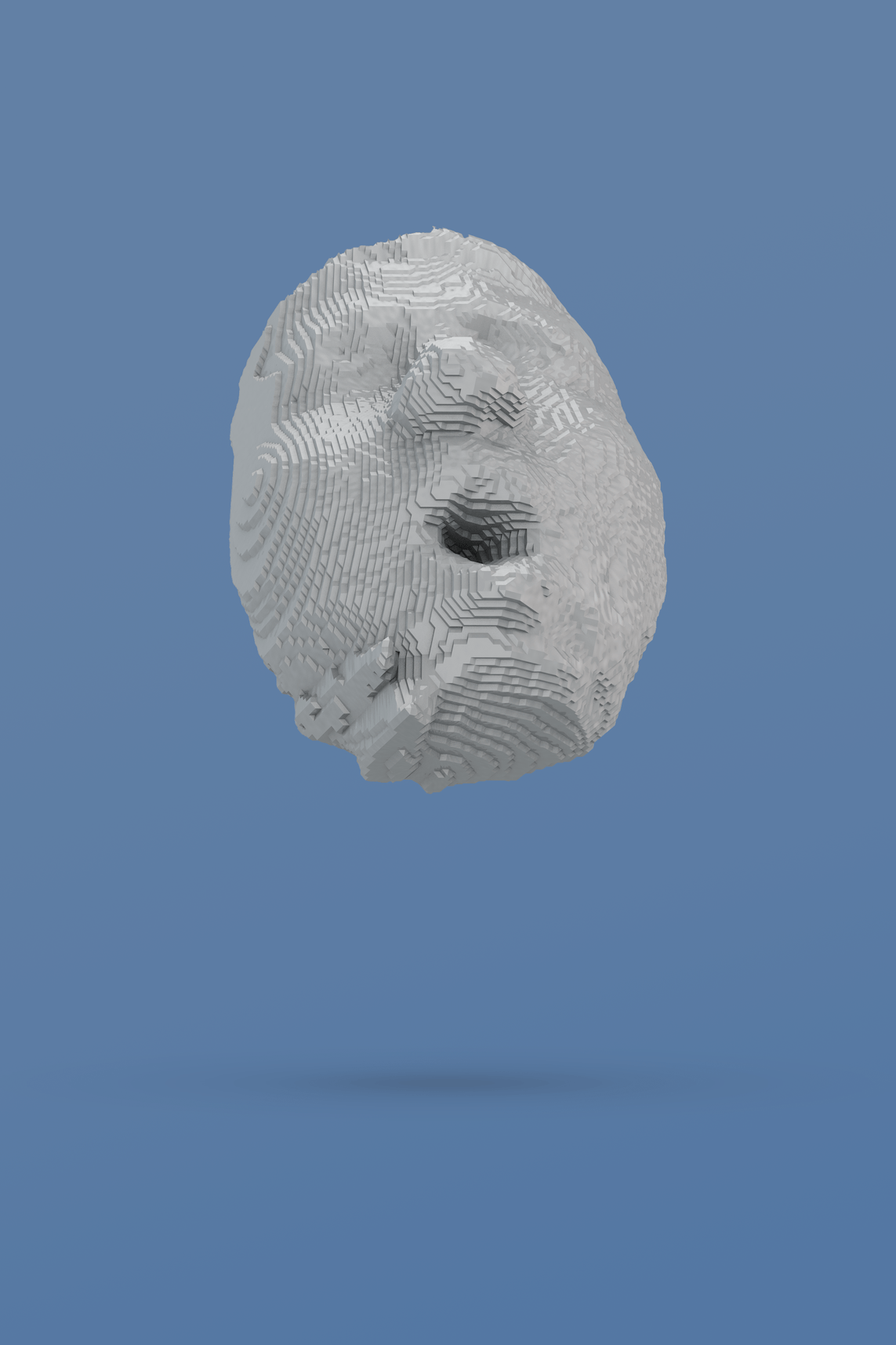

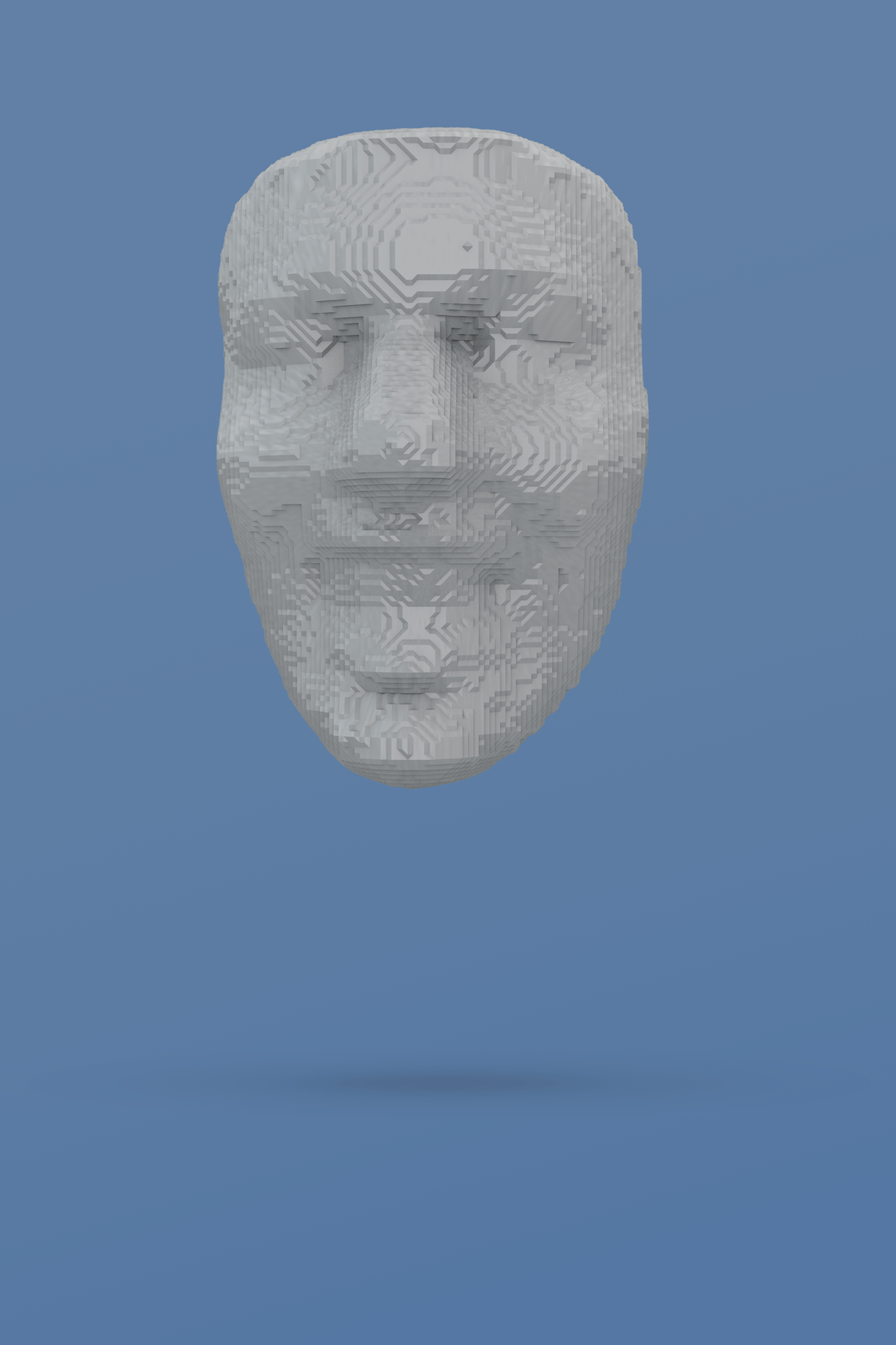

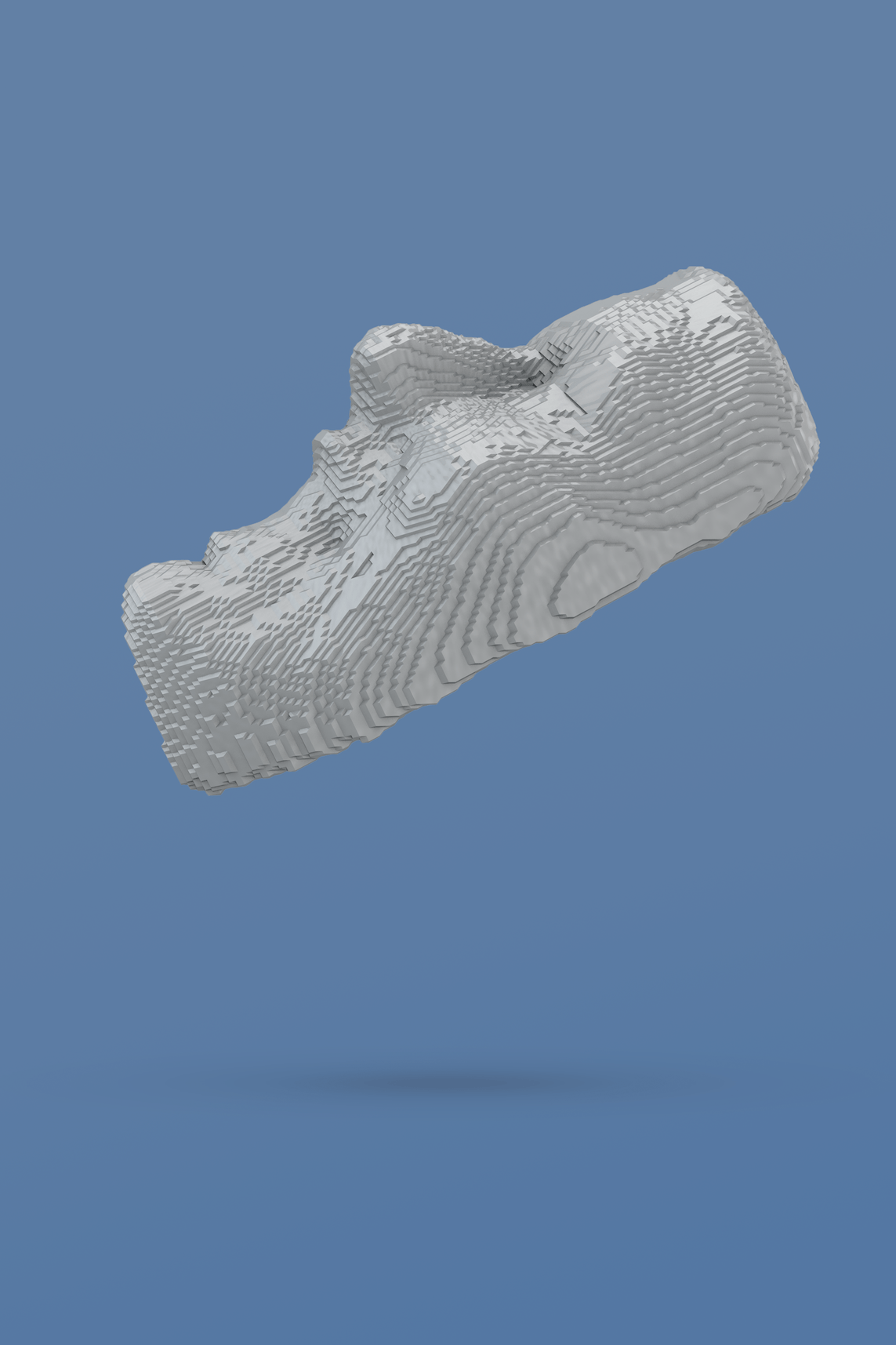

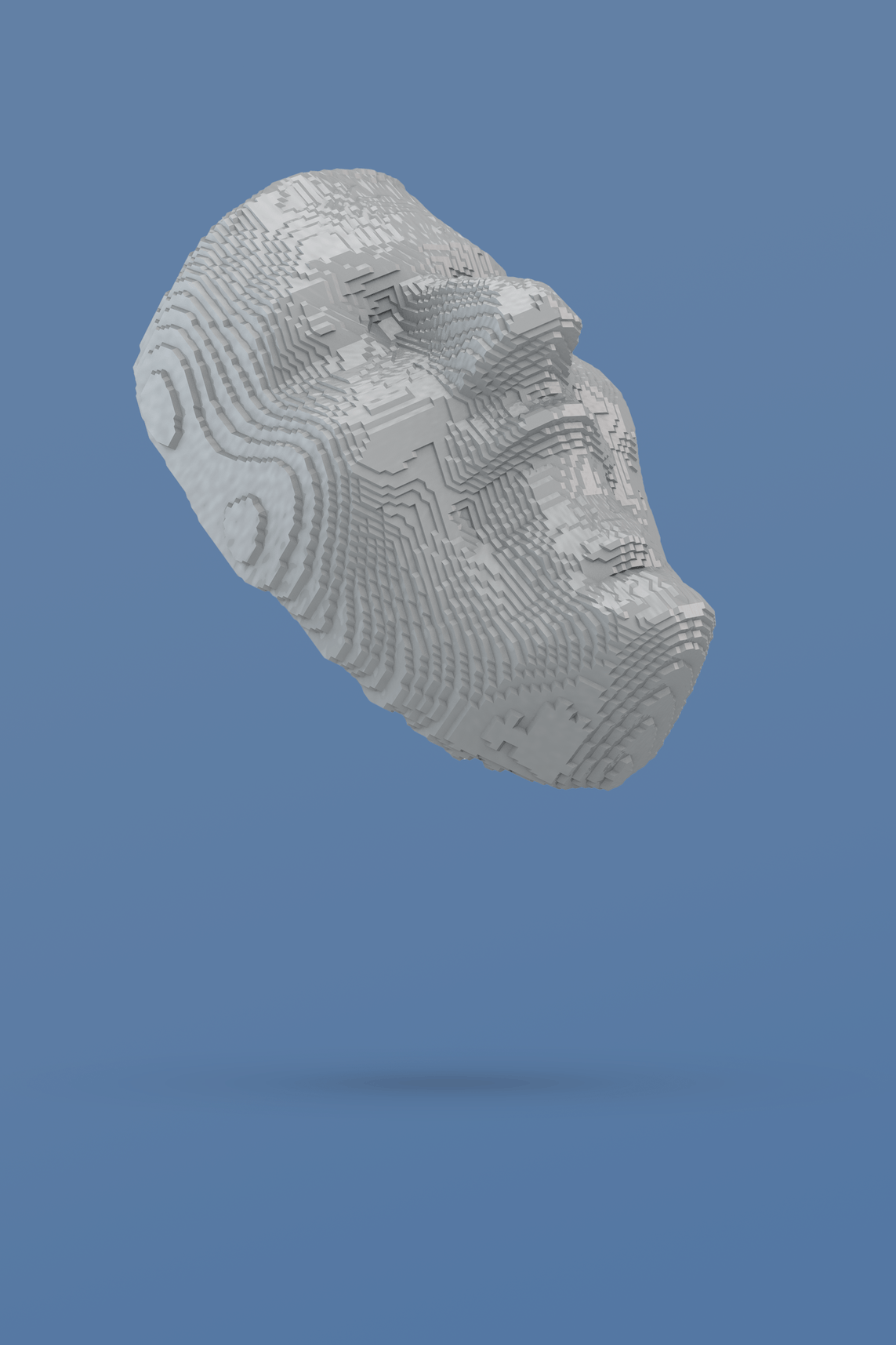

3D Facial Reconstruction using machine learning

3D facial reconstruction has been a fundamental area of computer visualization for decades. Current systems often require the availability of multiple facial images of the same subject, while compensating for connections across a variety of facial poses, expressions, and uneven lighting to arrive at a result.

The project "Large Pose 3D Face Reconstruction from a Single Image via Direct Volumetric CNN Regression" addresses many of these limitations by training a Convolutional Neural Network (CNN) on a suitable dataset consisting of 2D facial images and 3D facial models and scans. The CNN works with only a single 2D facial image as input, requiring neither precise alignment nor dense correspondence between images and can be used to reconstruct the entire 3D facial geometry, including the non-visible parts.

Frame 803/1435

Neutral, feMale, 19 yrs.

Frame 563/1075

Sad, Male, 26 yrs.

Frame 1811/2155

Happy, Male, 34 yrs.

Frame 09/95

Surprise, FeMale, 48 yrs.

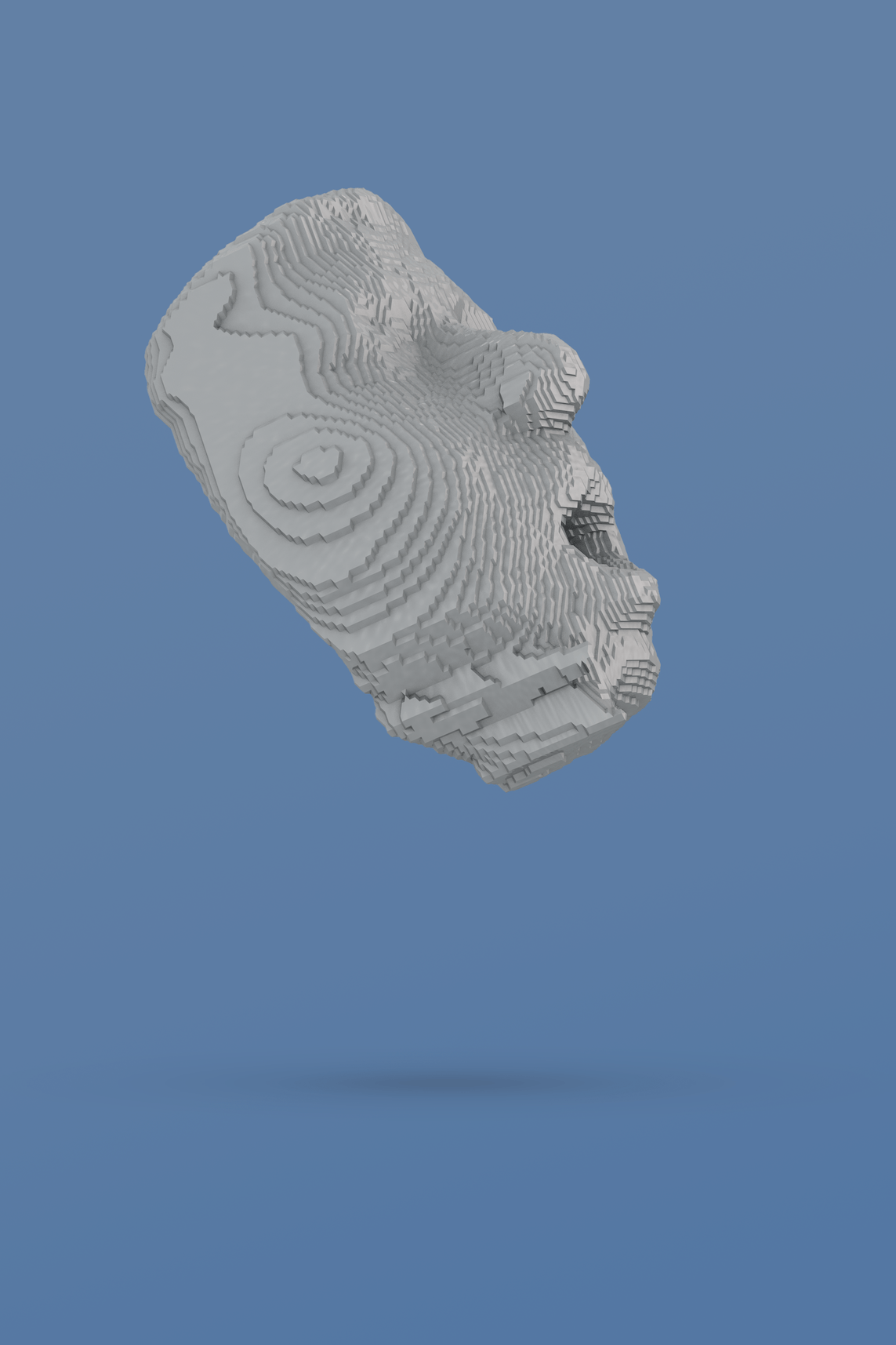

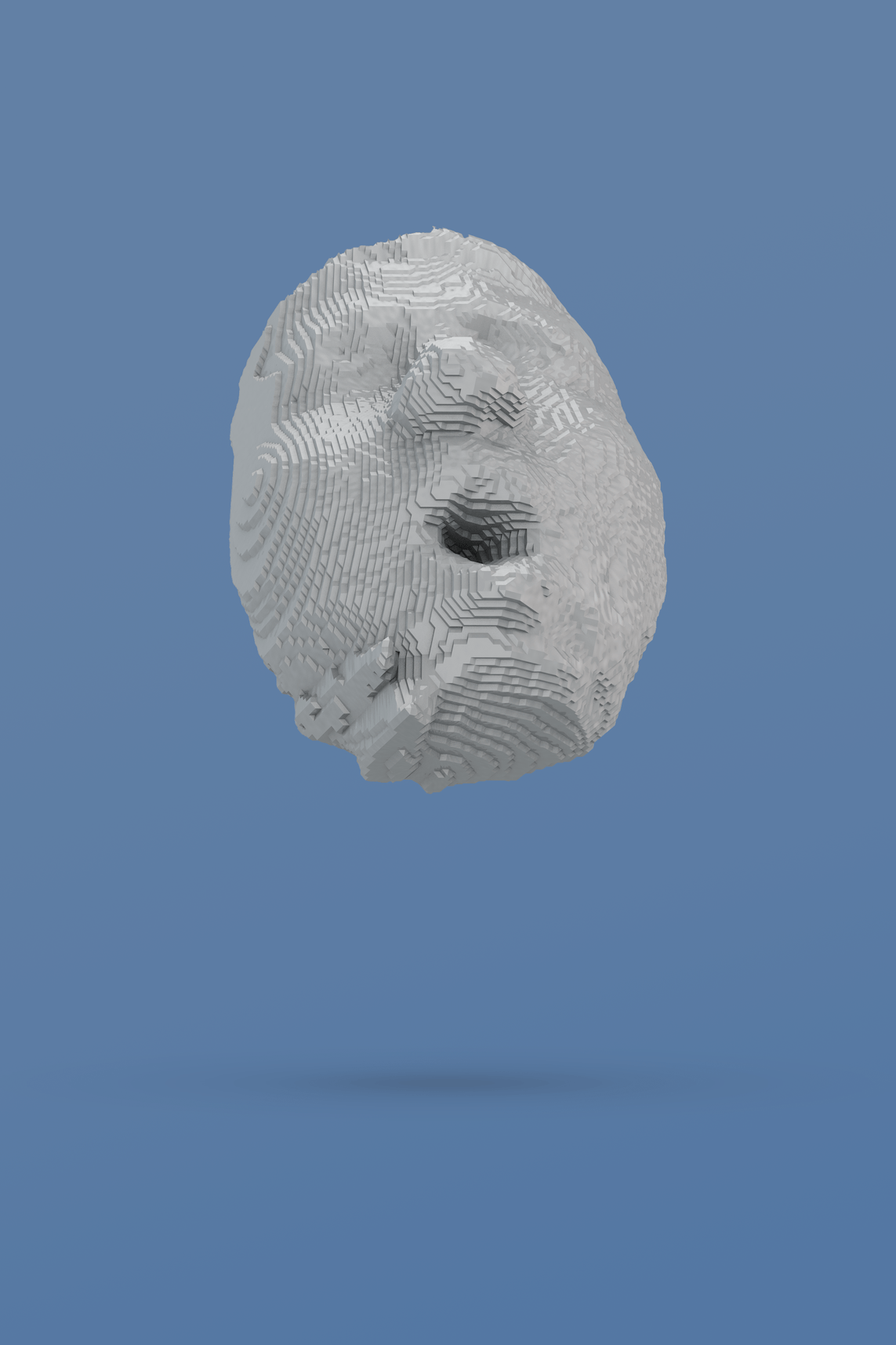

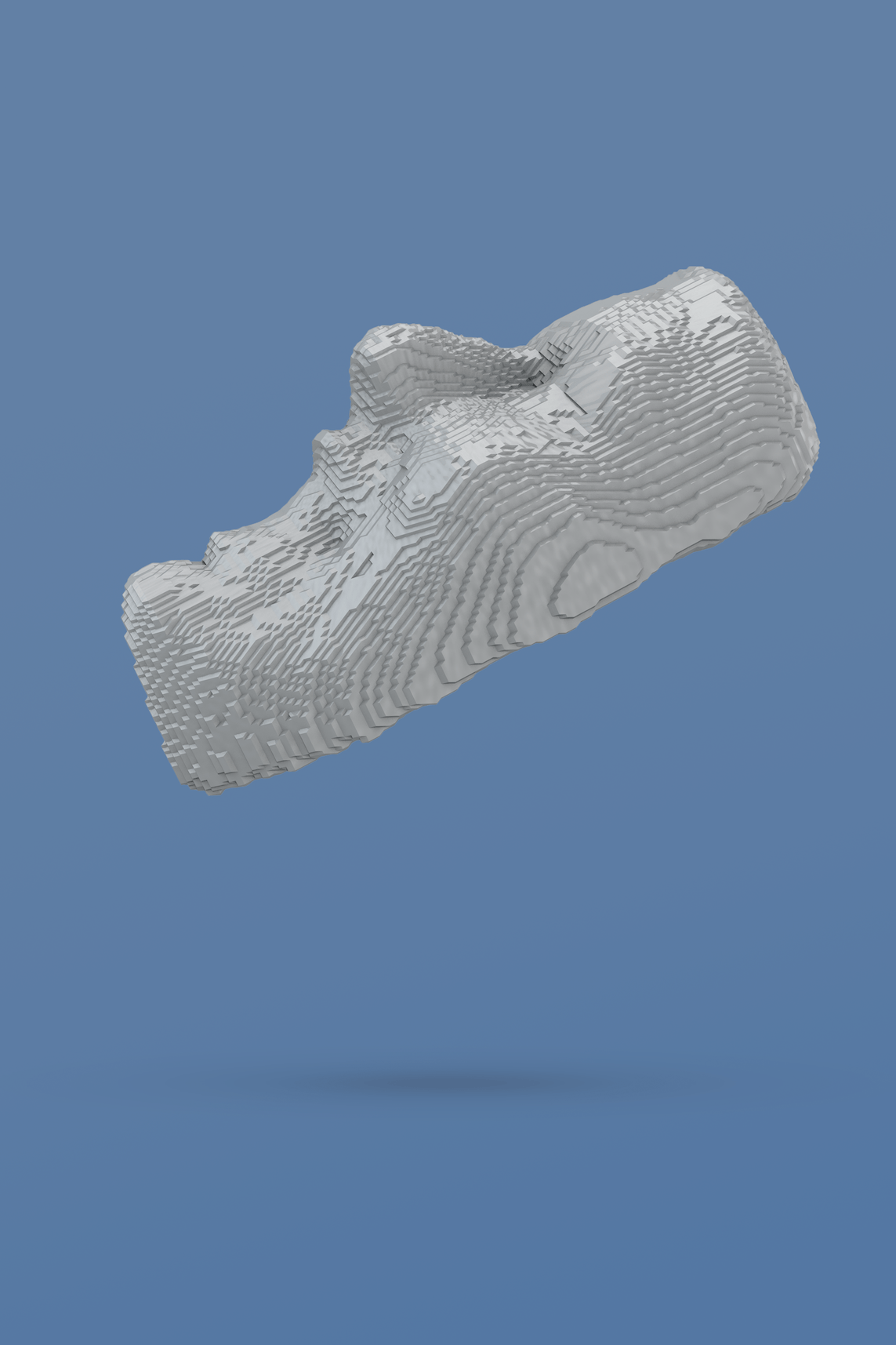

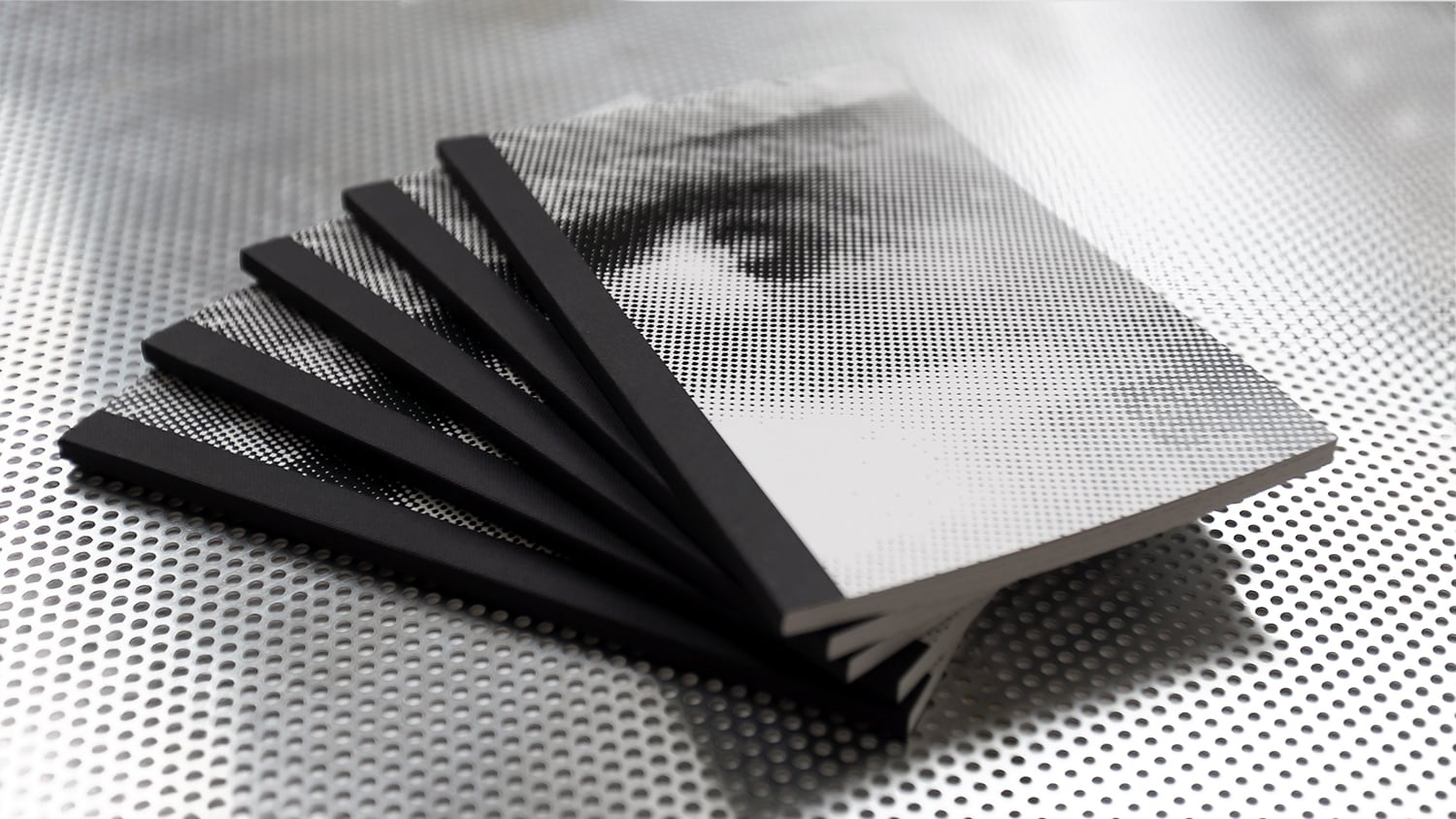

From Digital to Analog:

The Medium is the Message

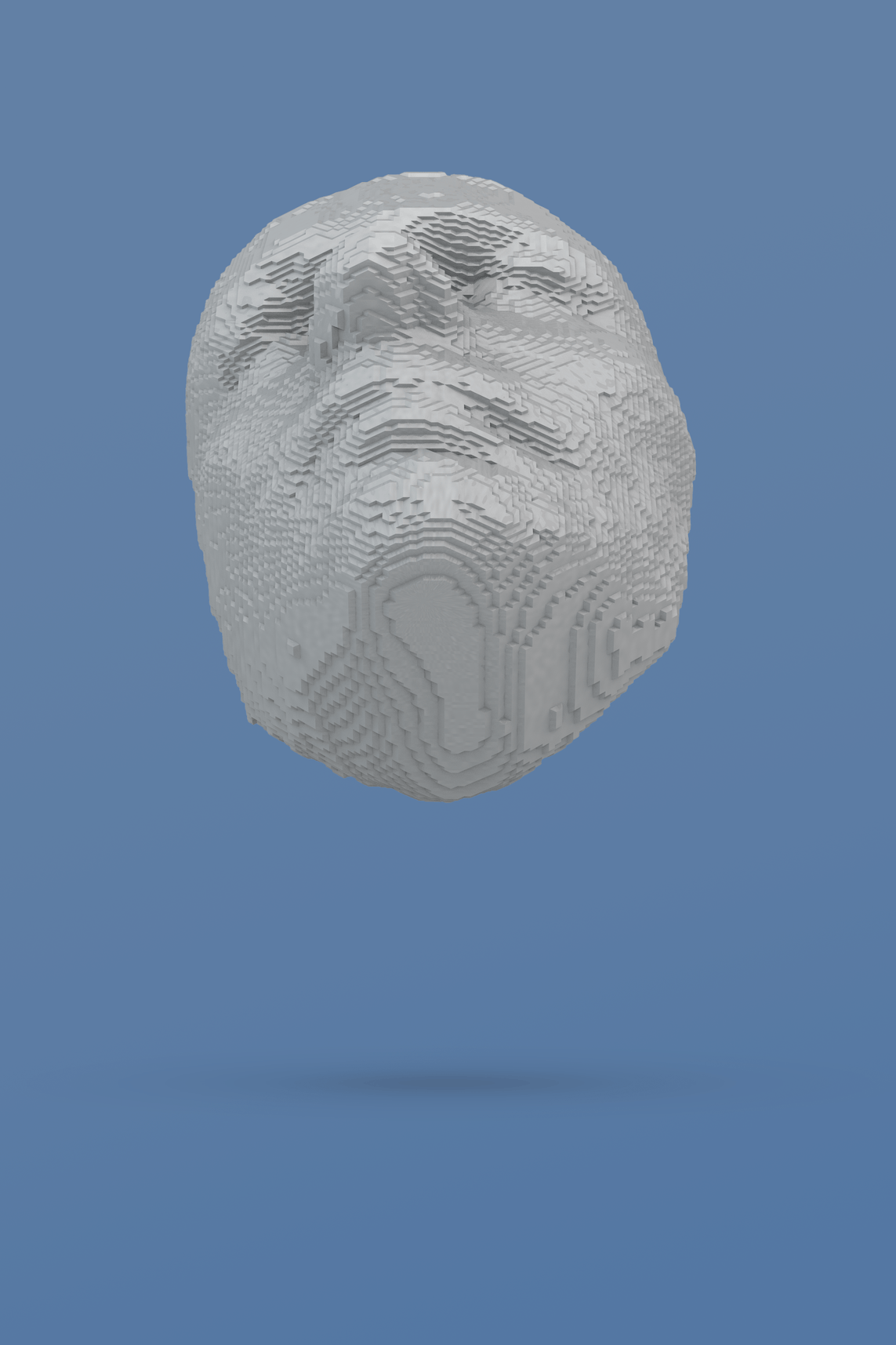

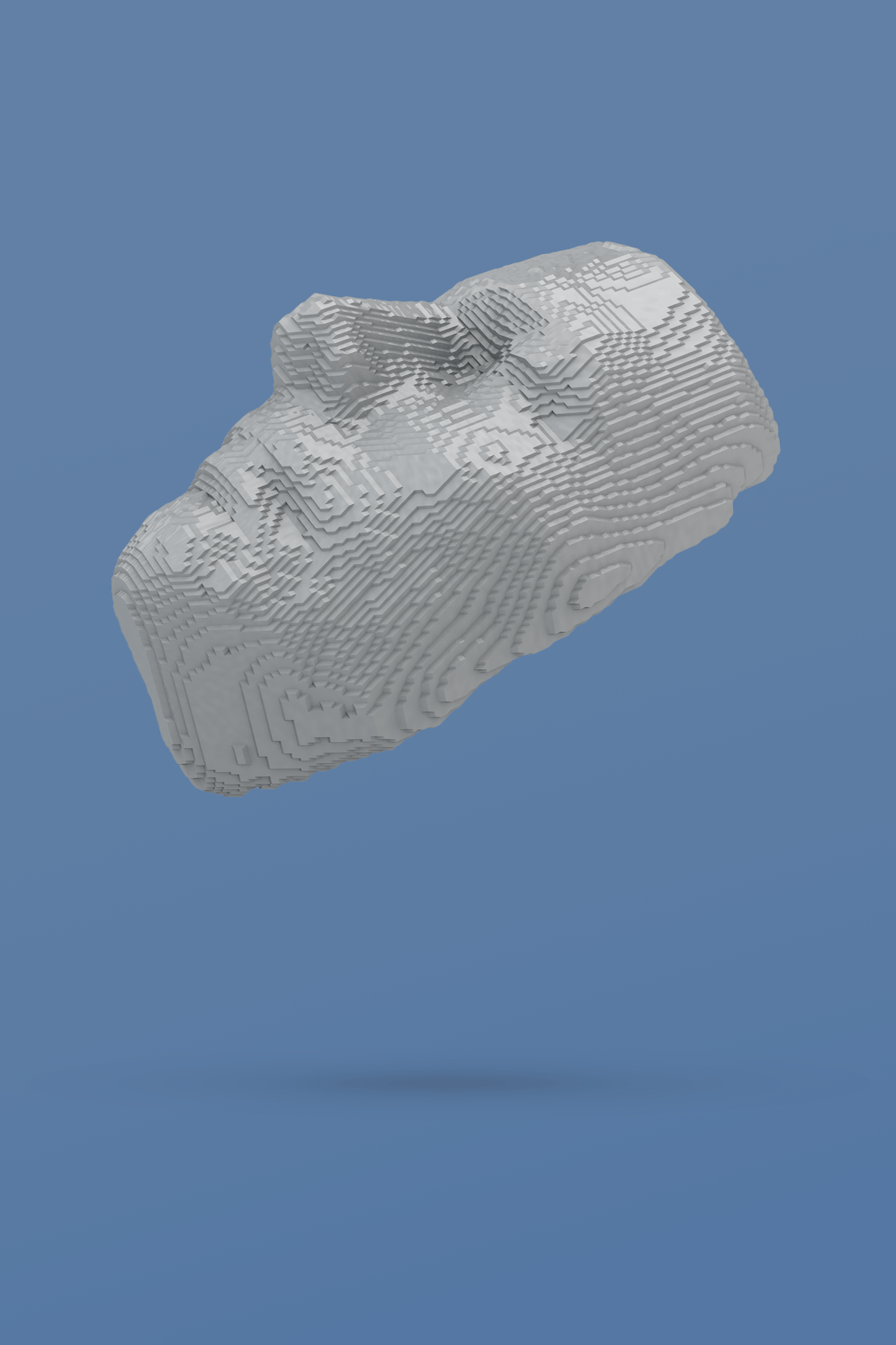

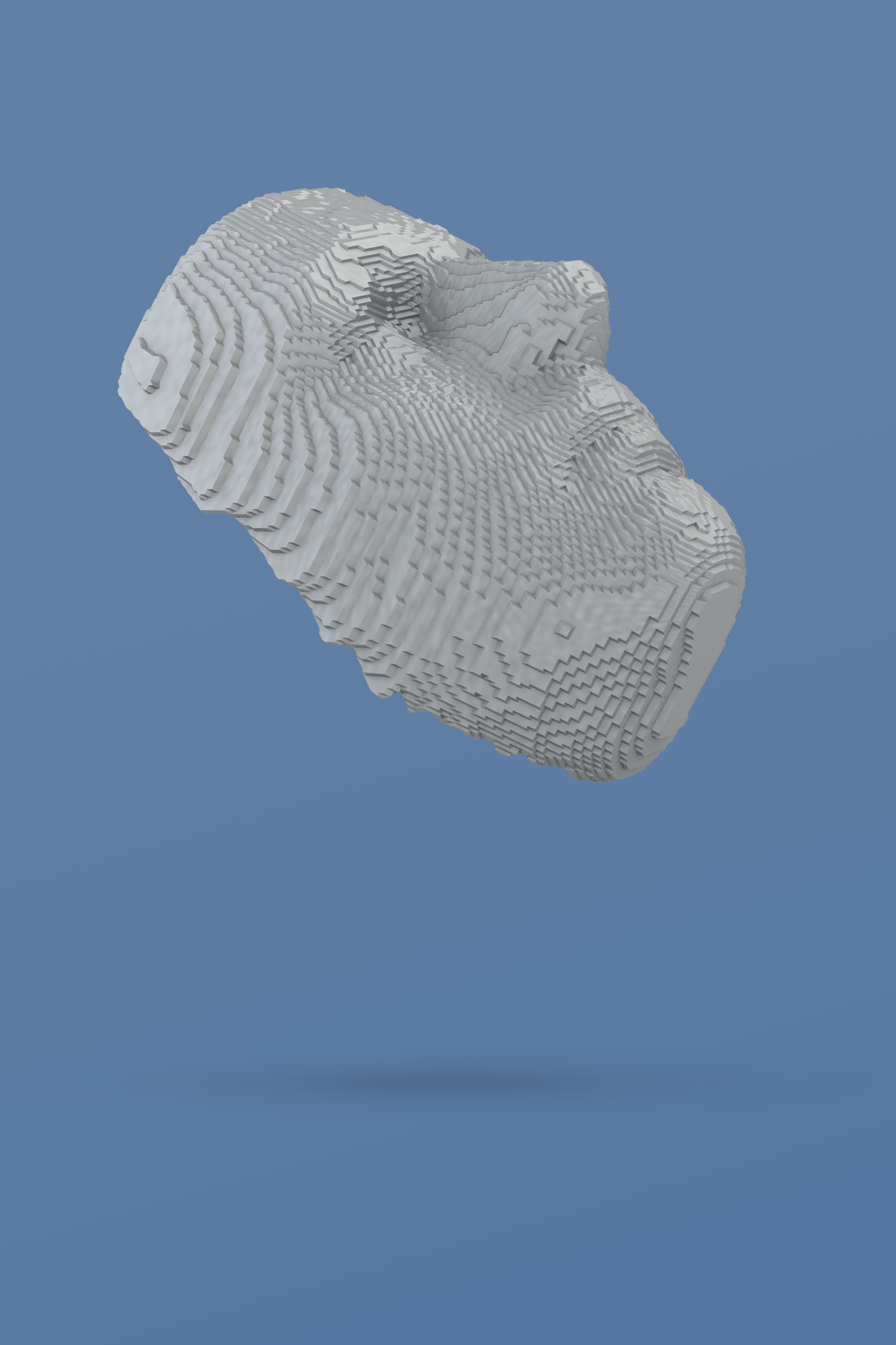

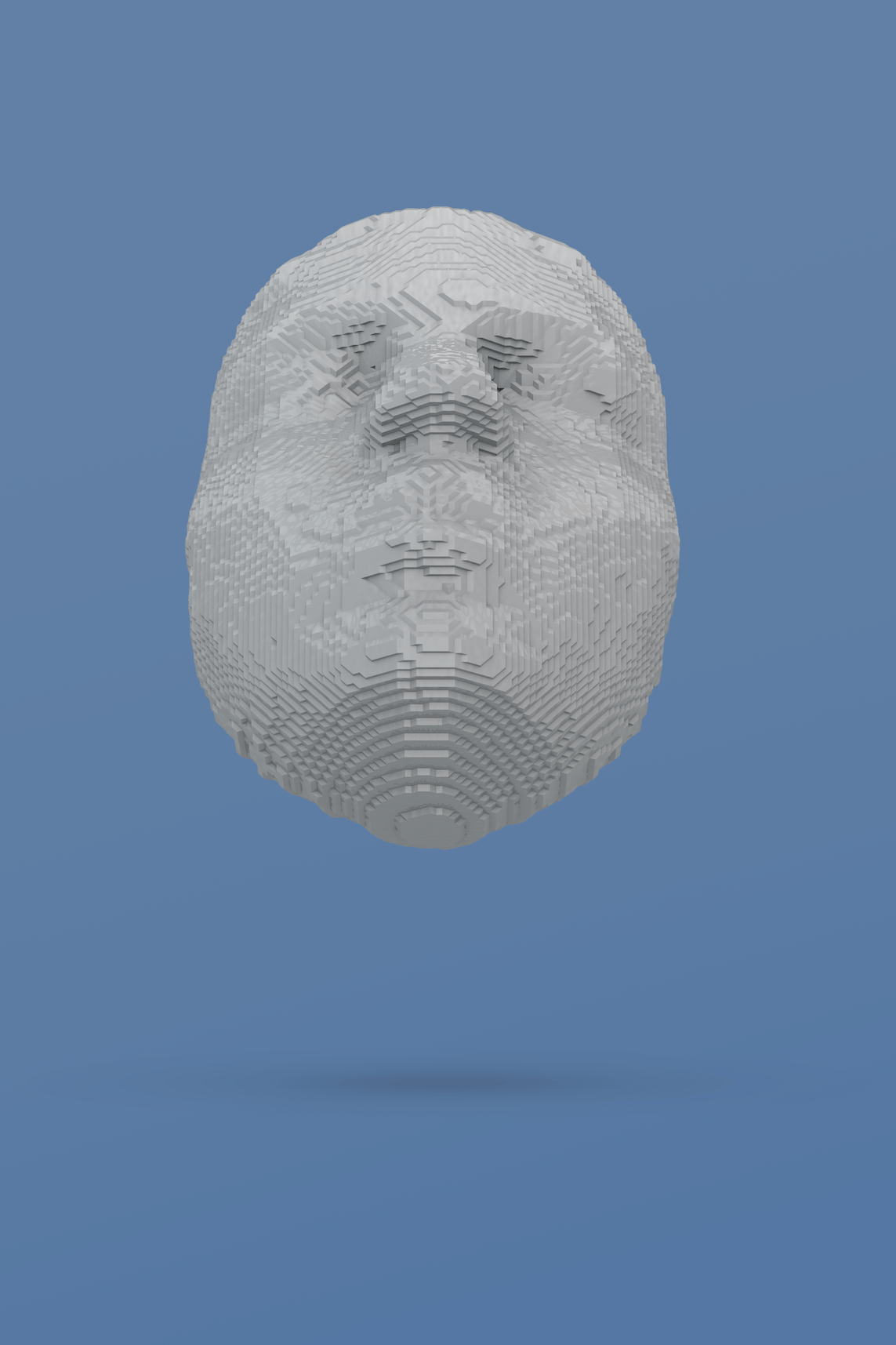

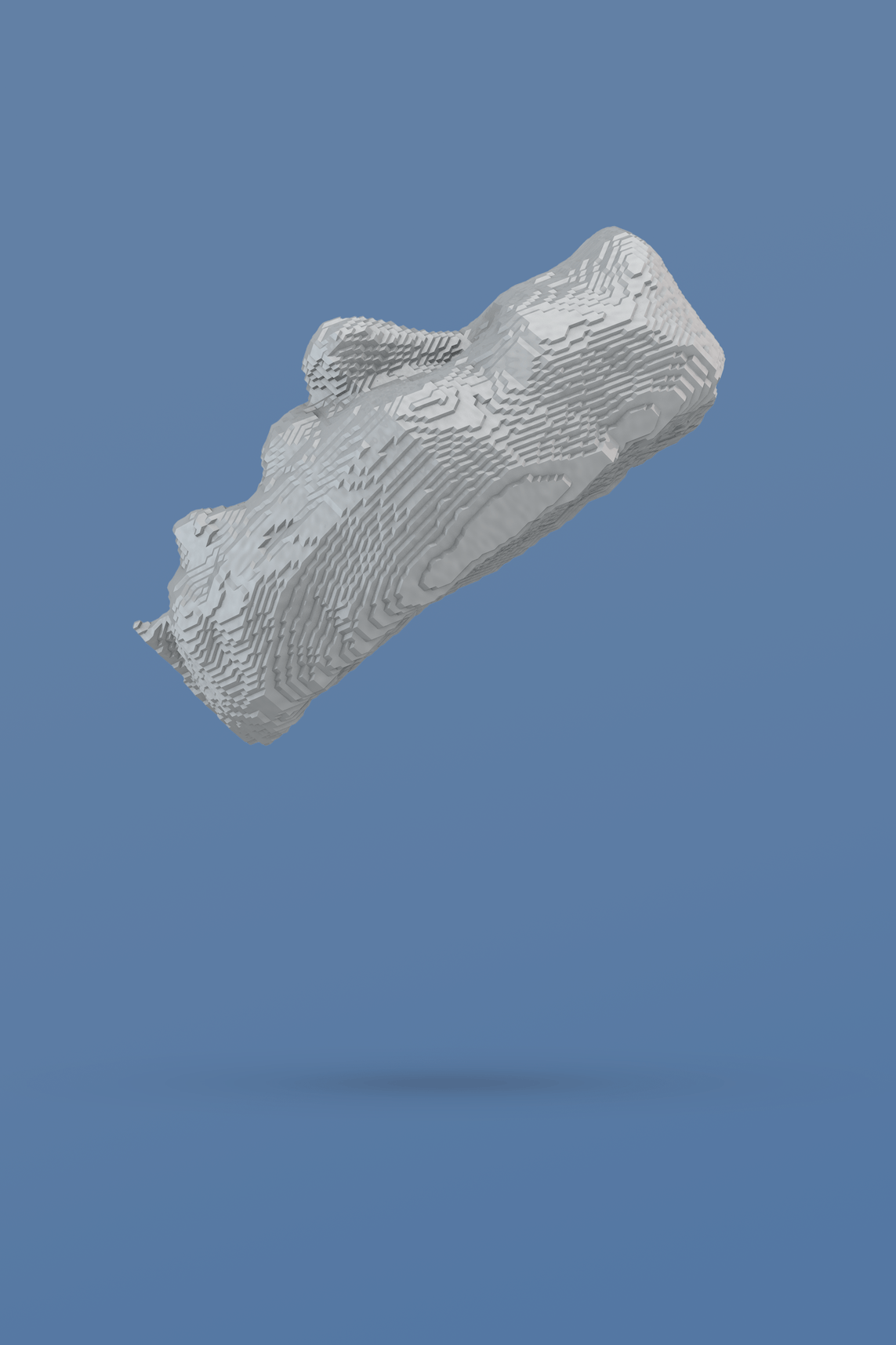

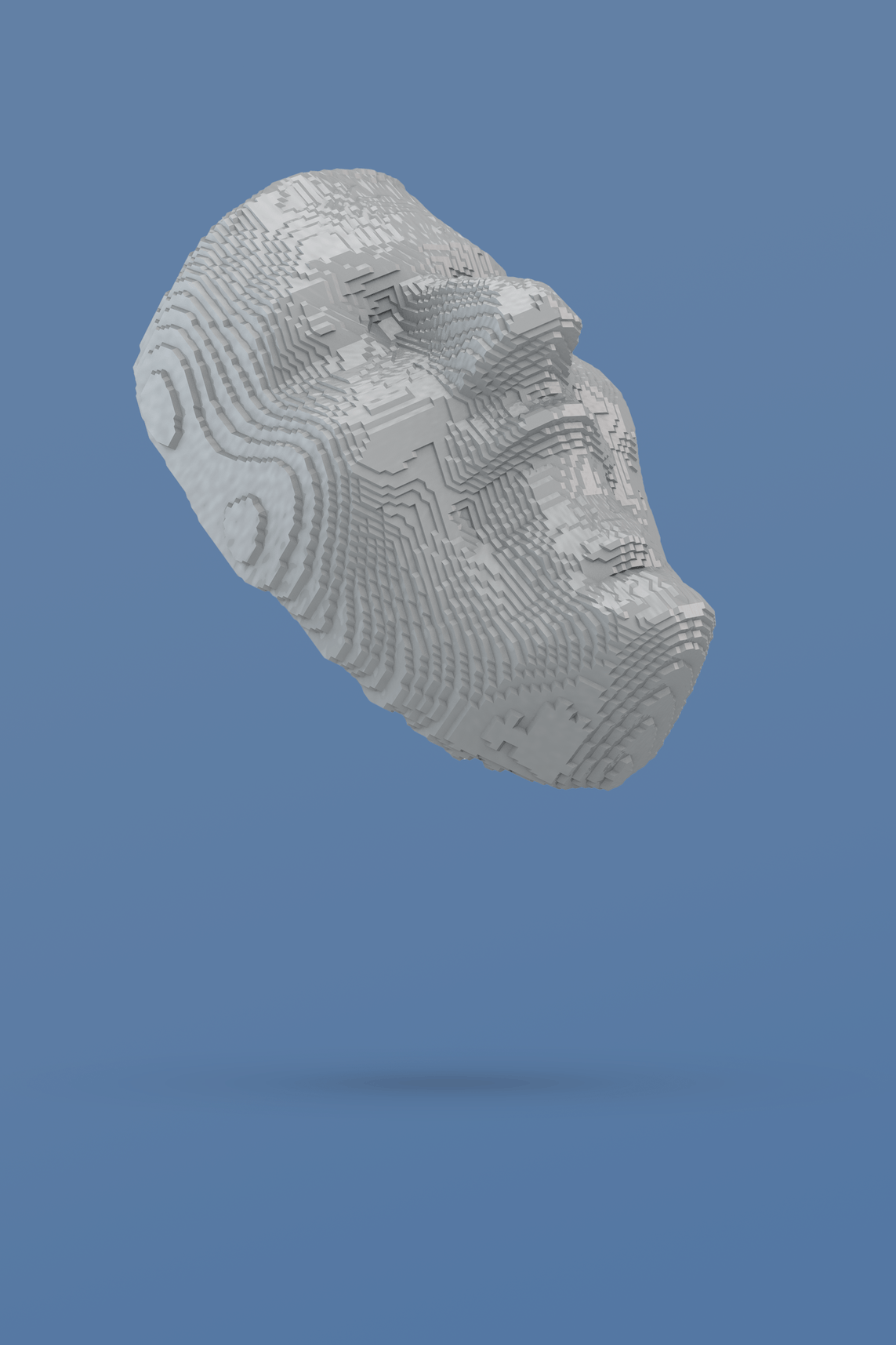

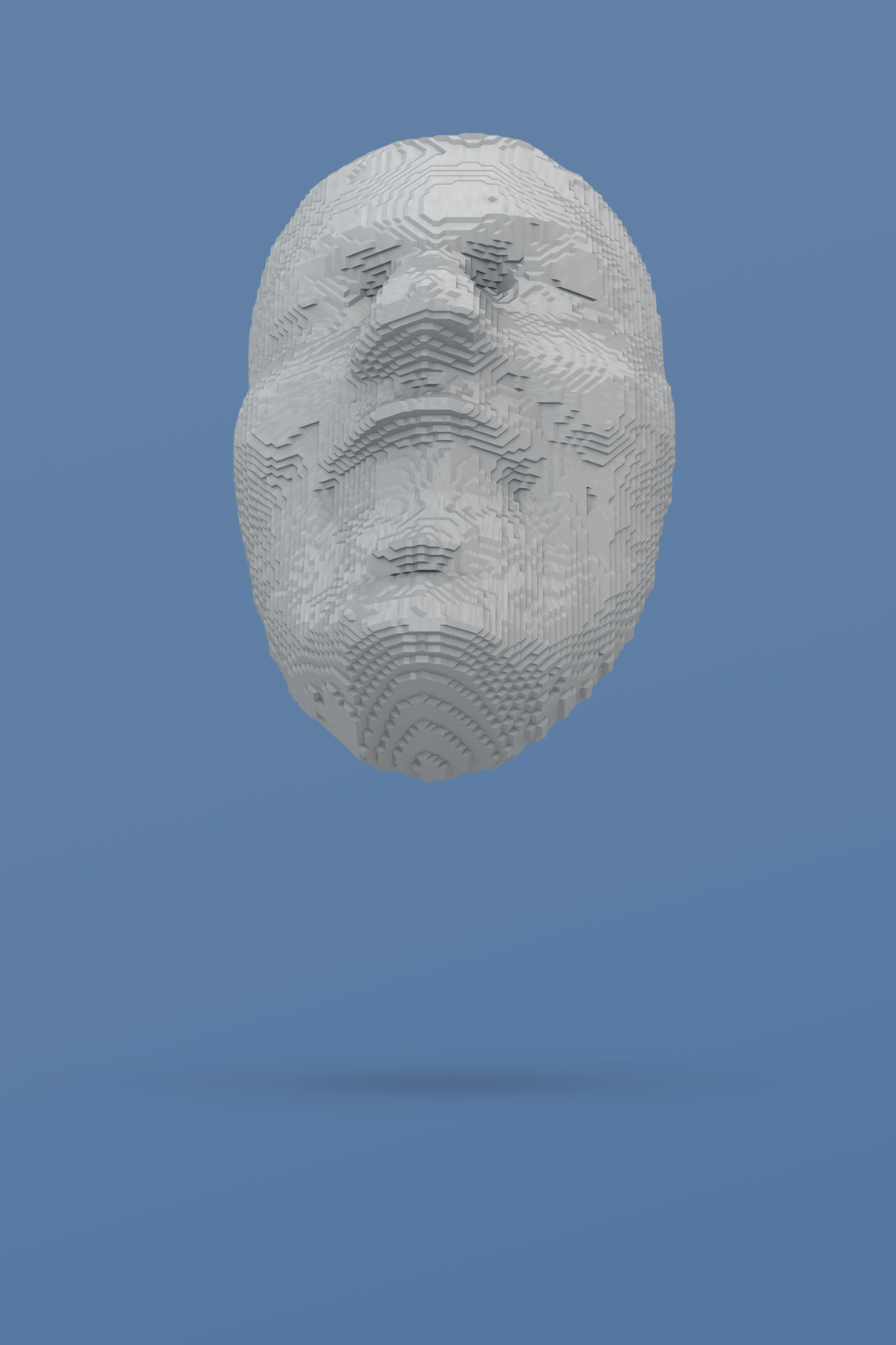

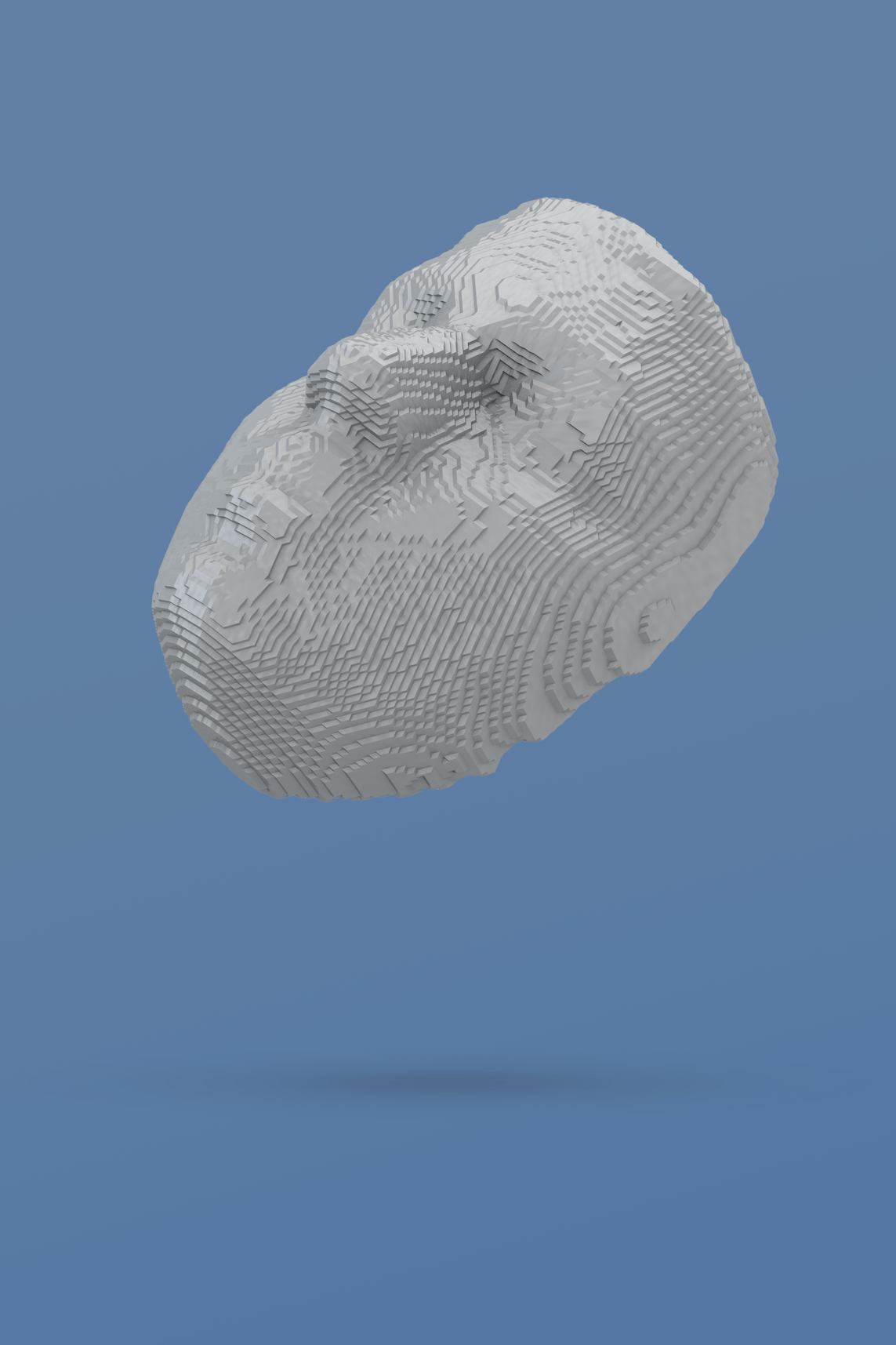

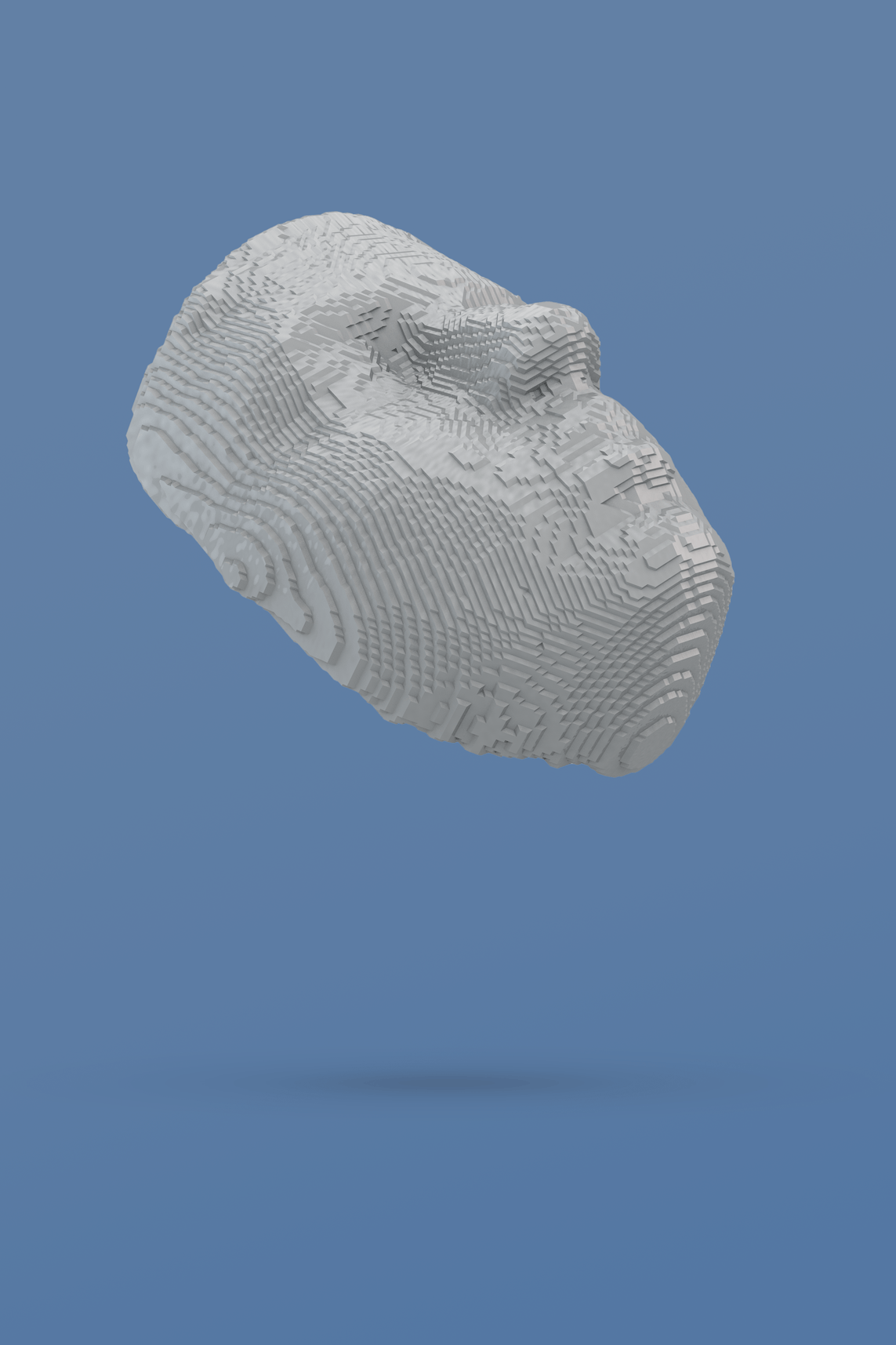

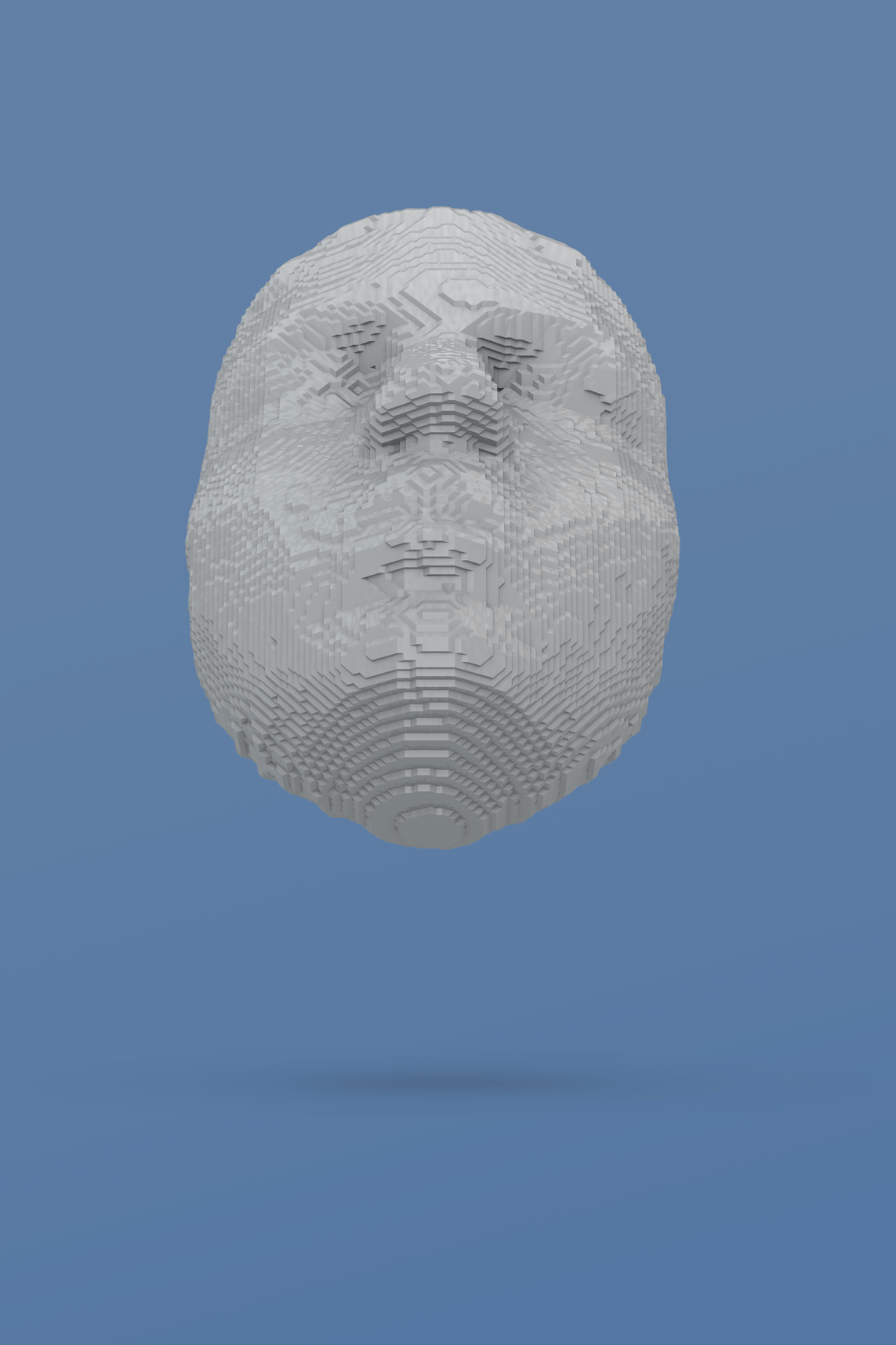

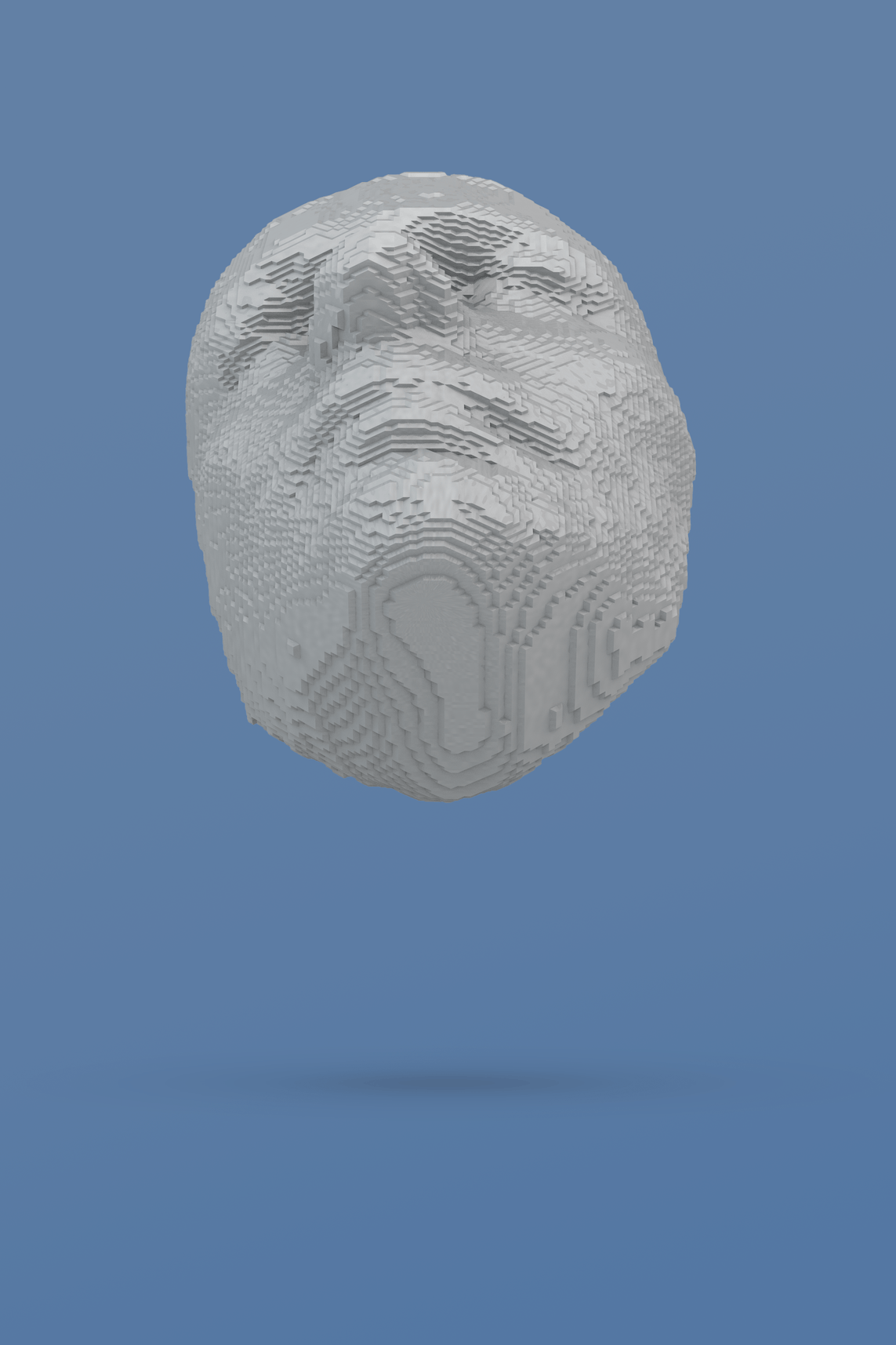

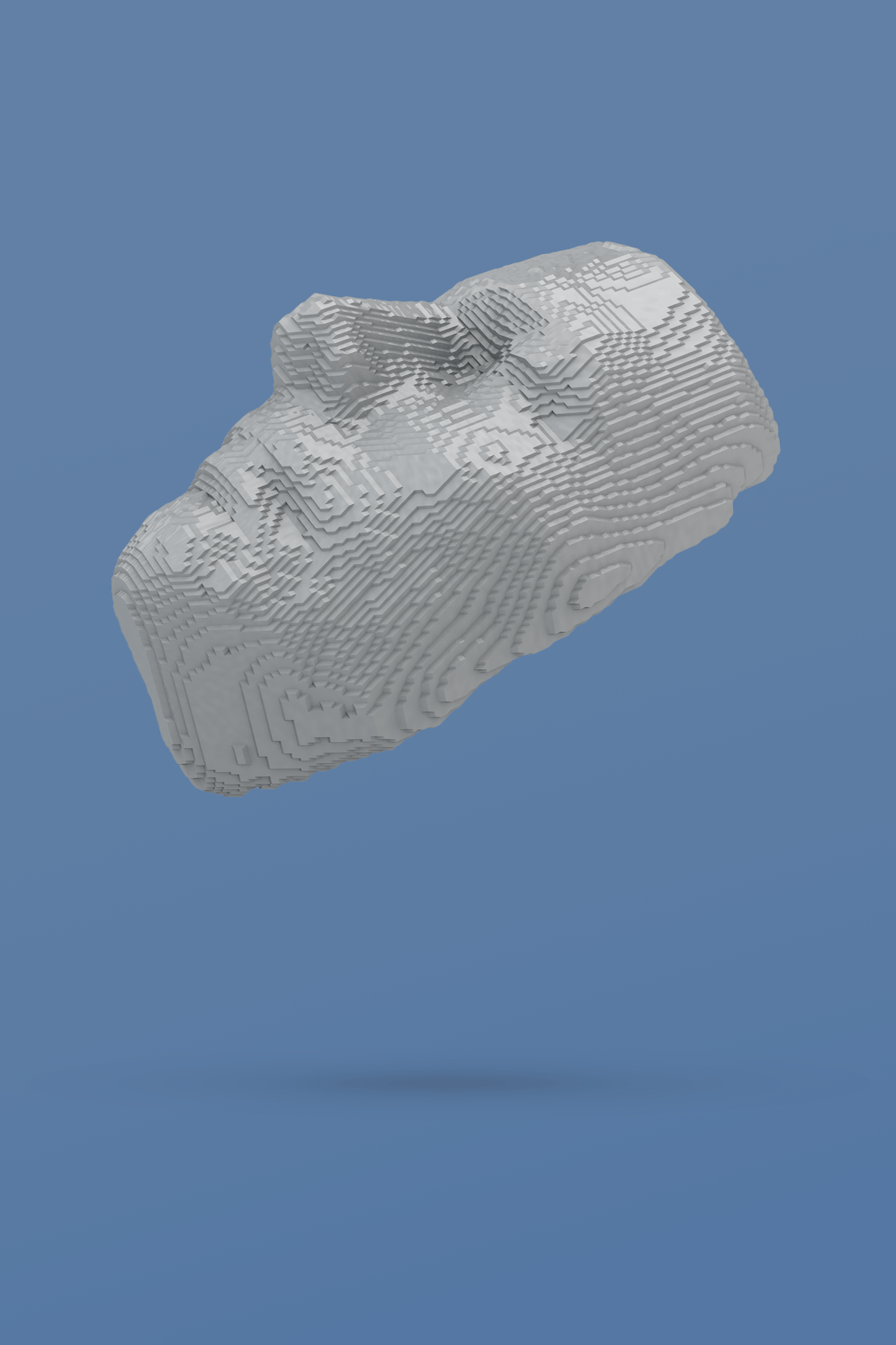

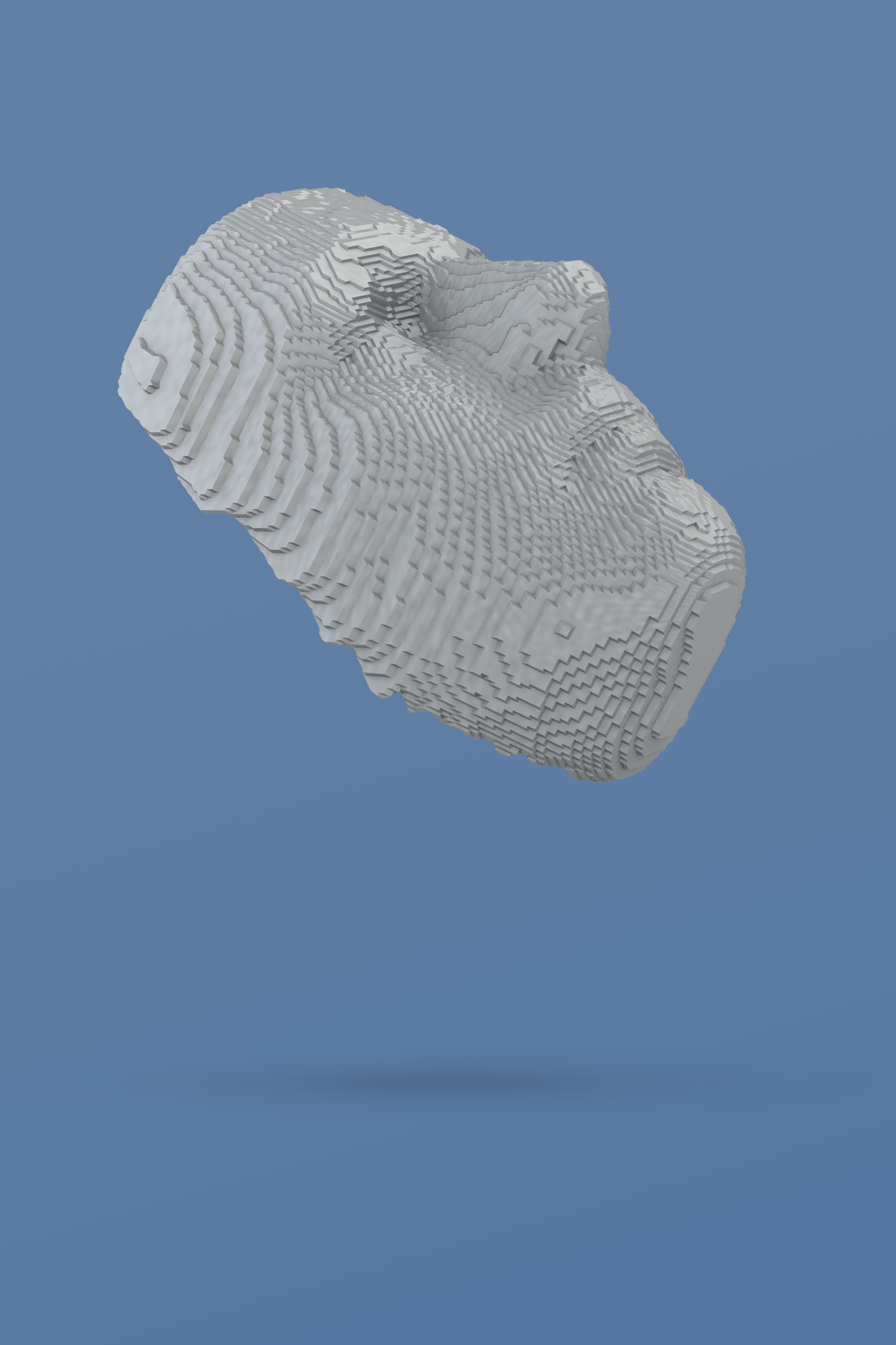

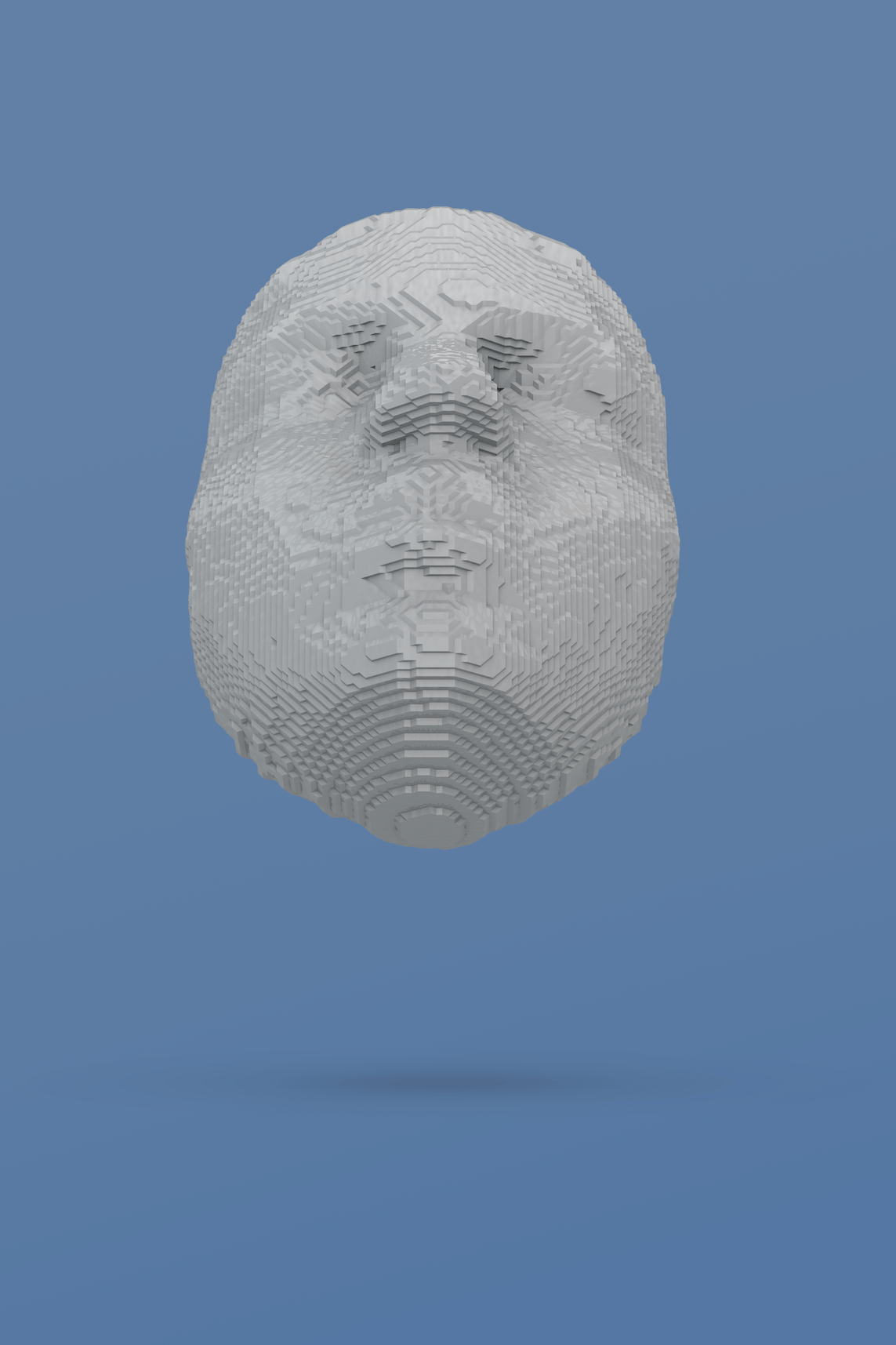

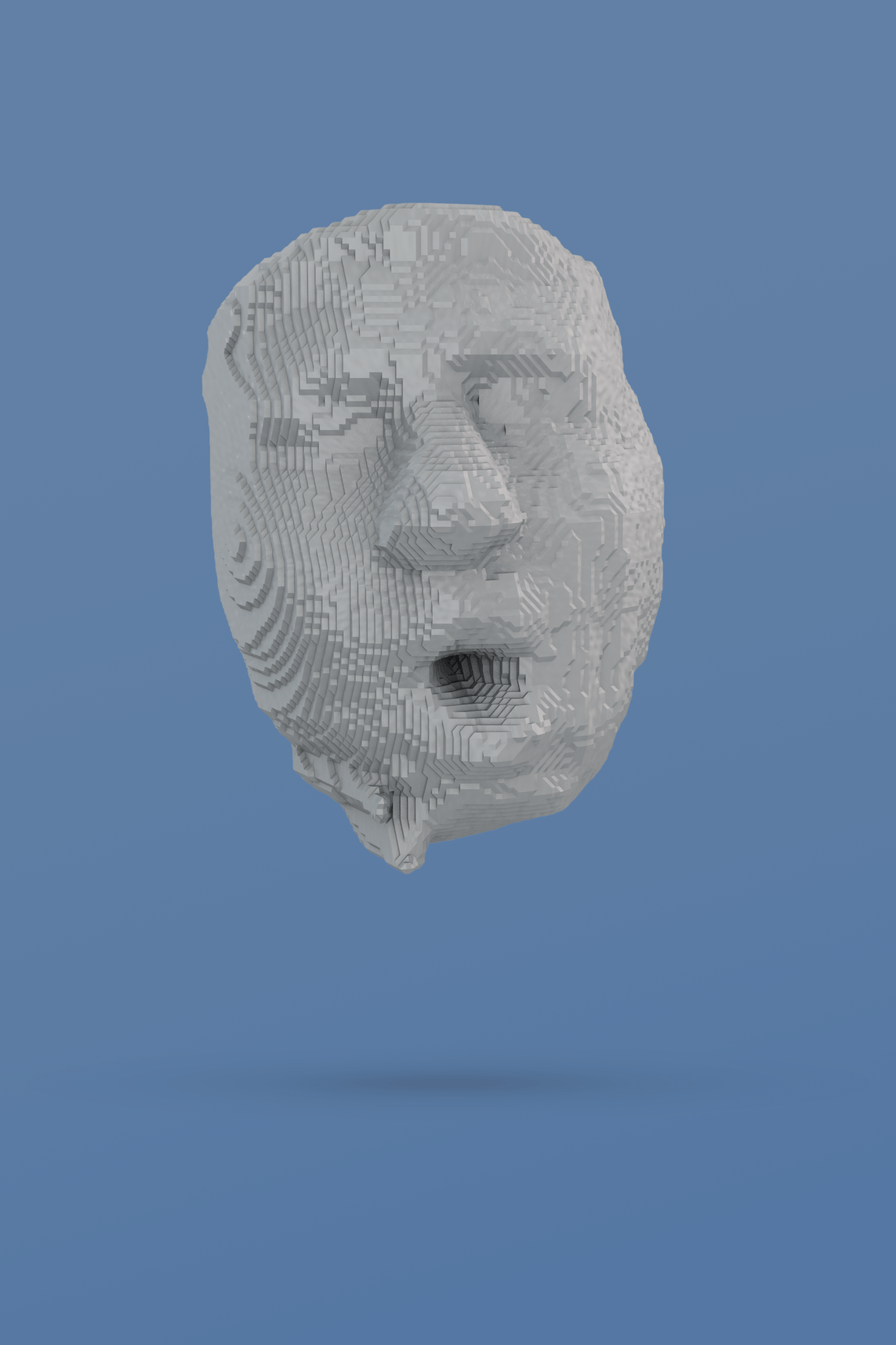

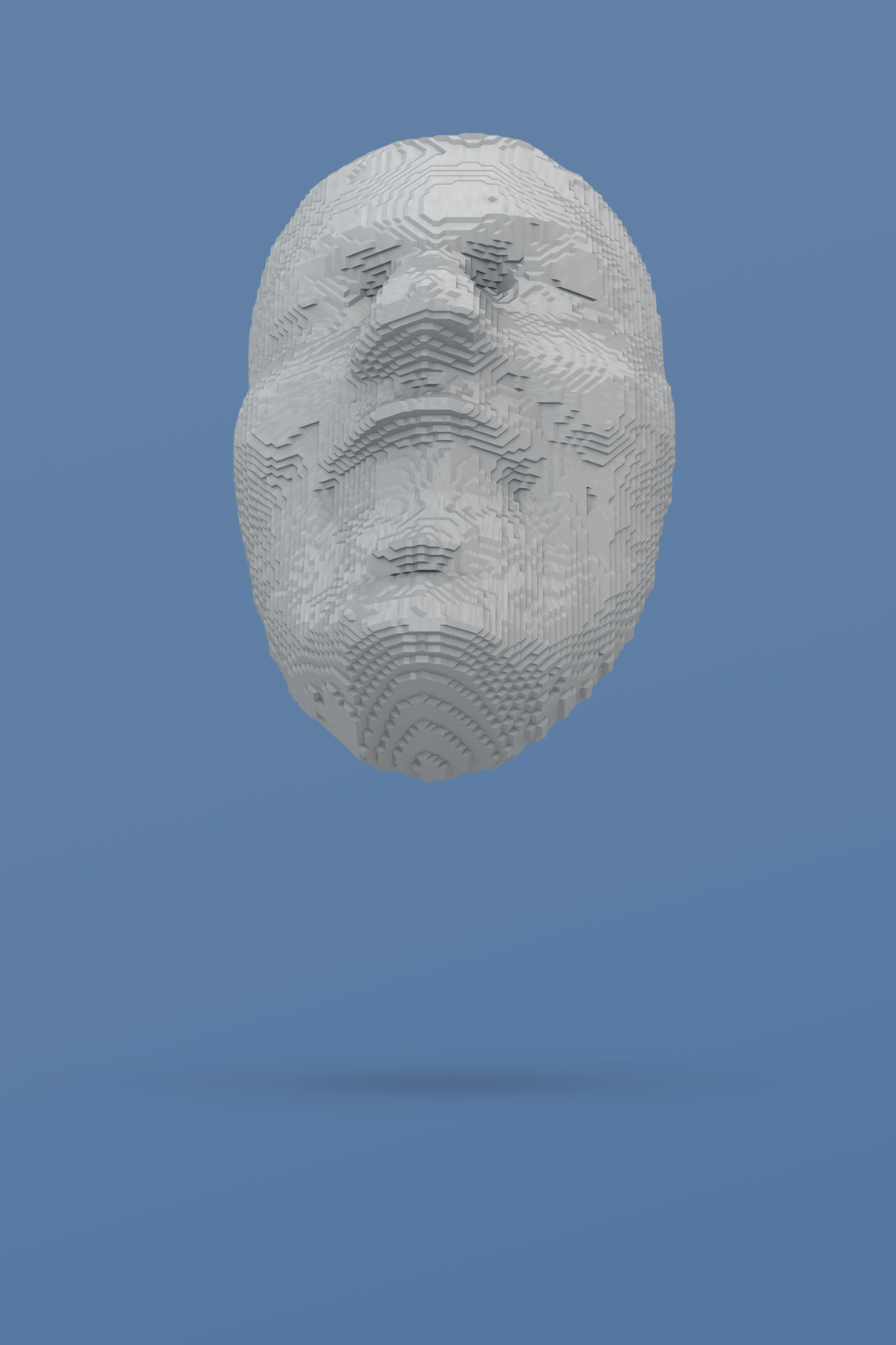

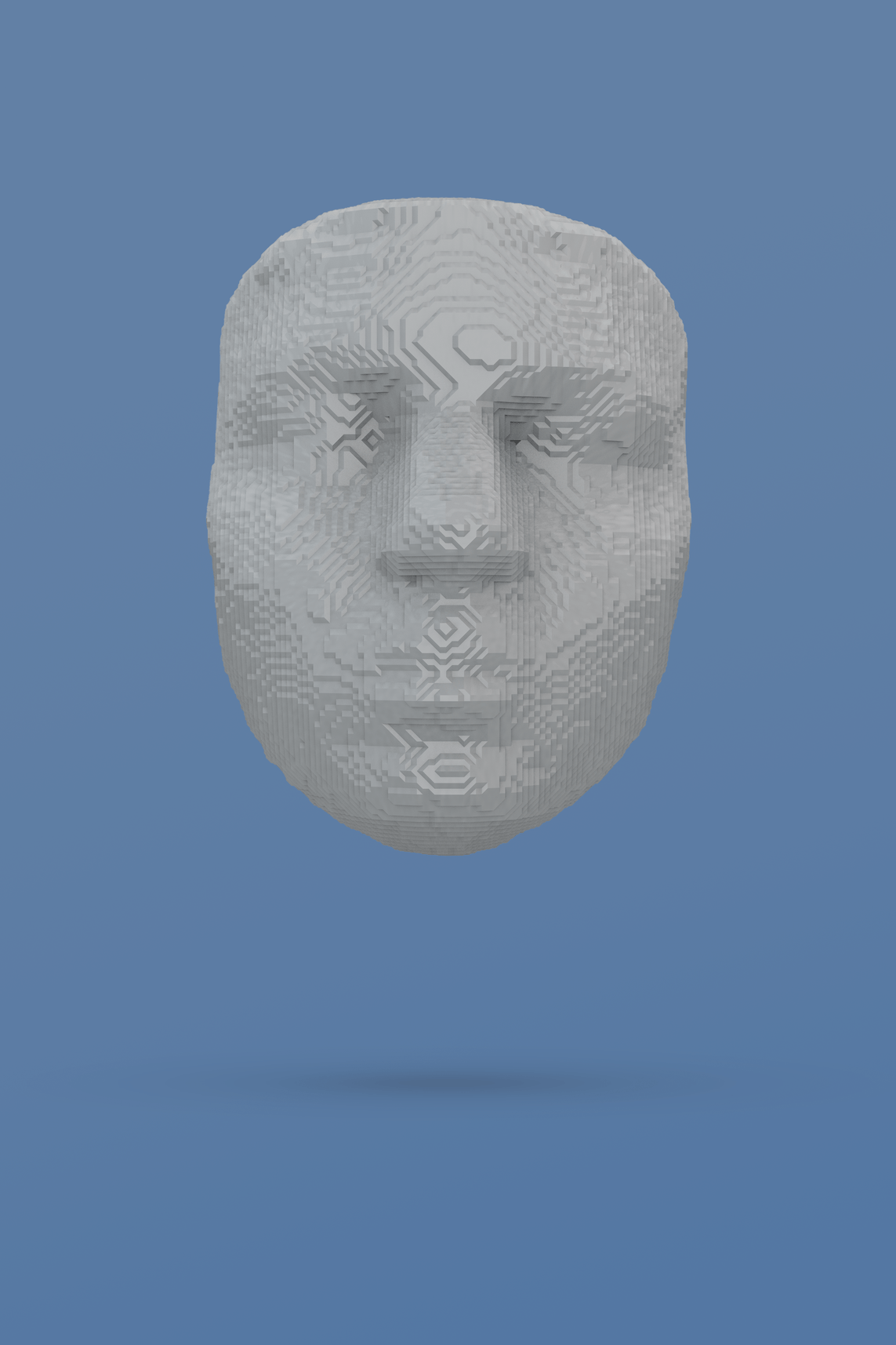

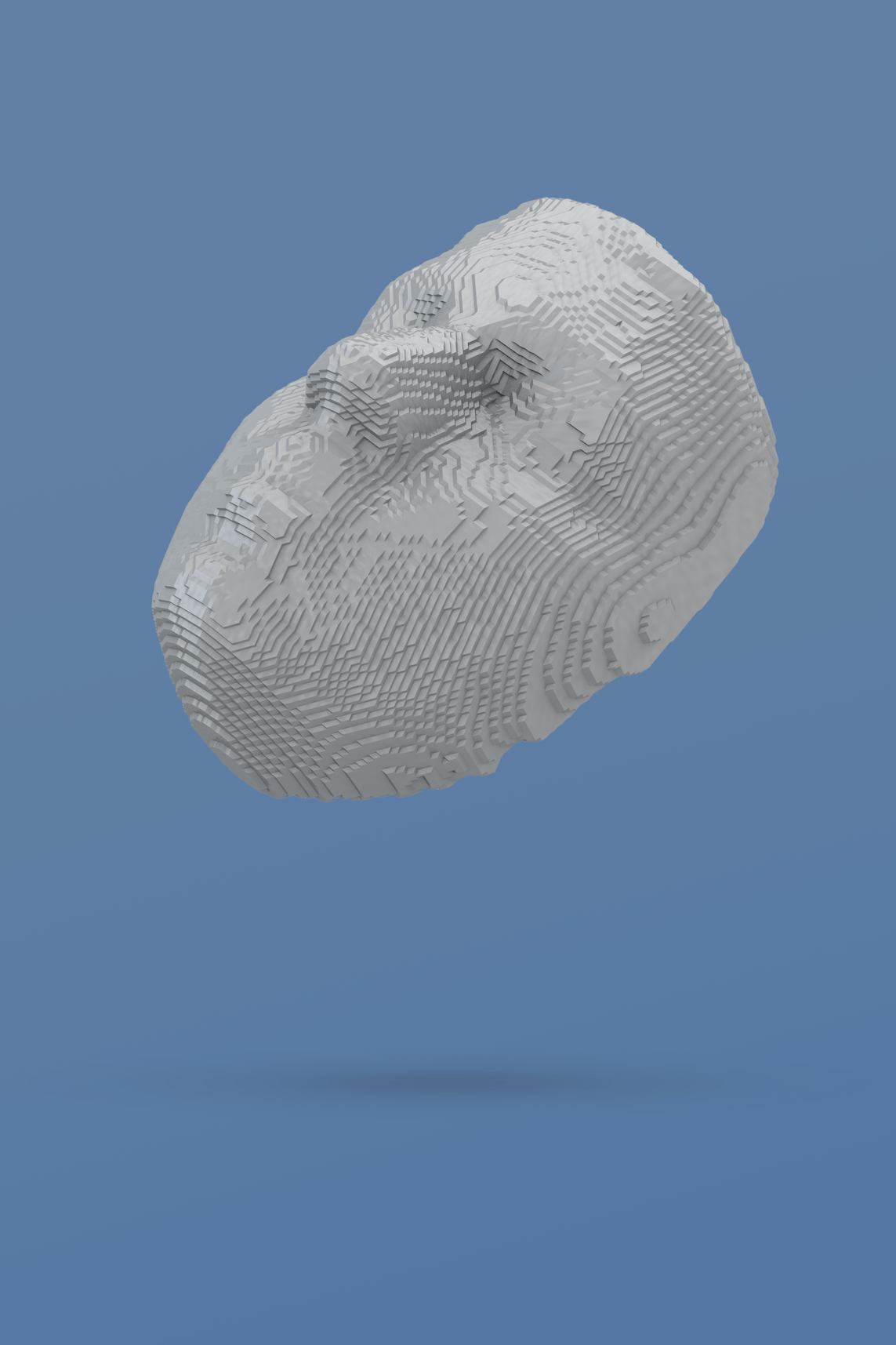

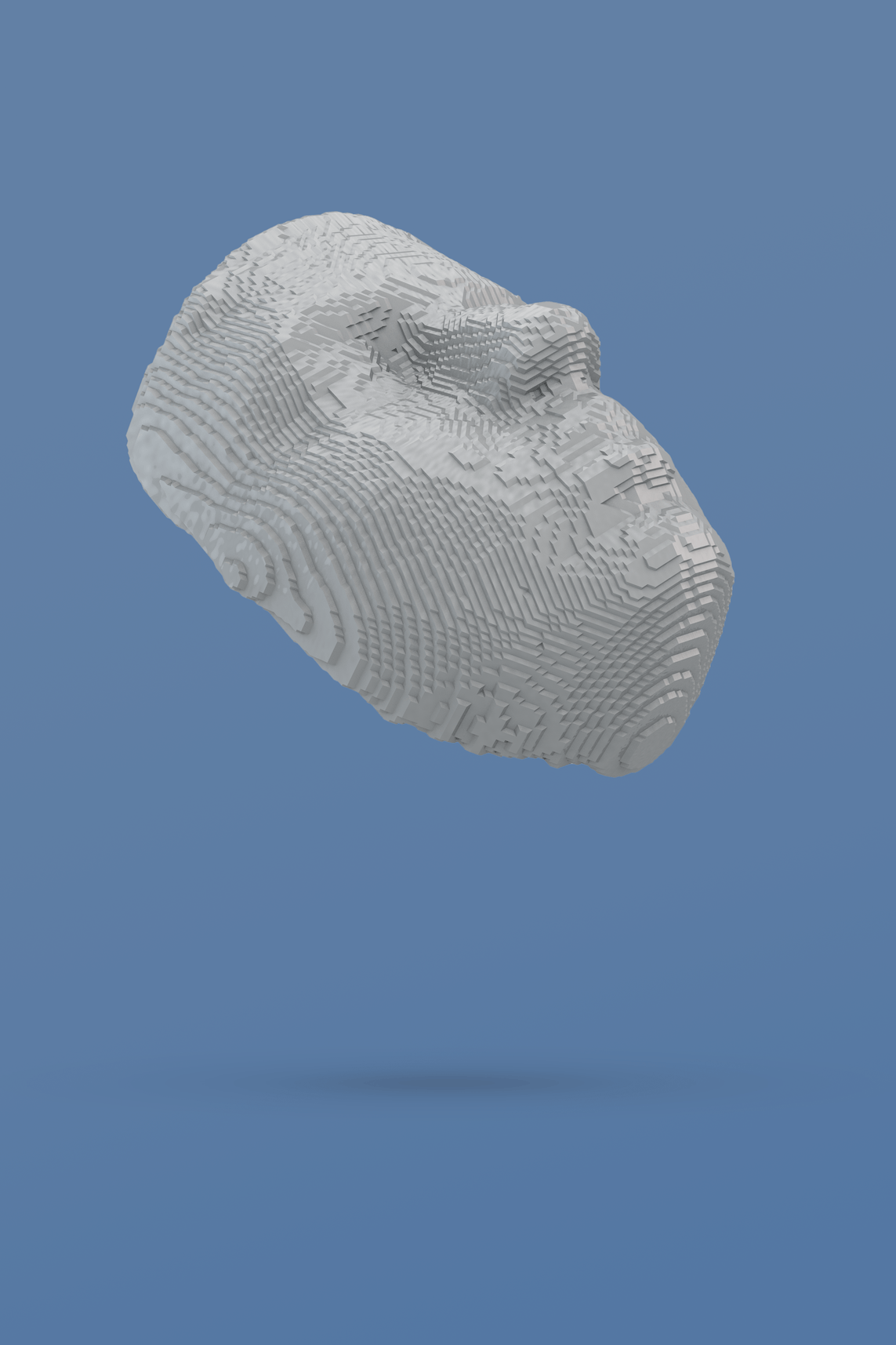

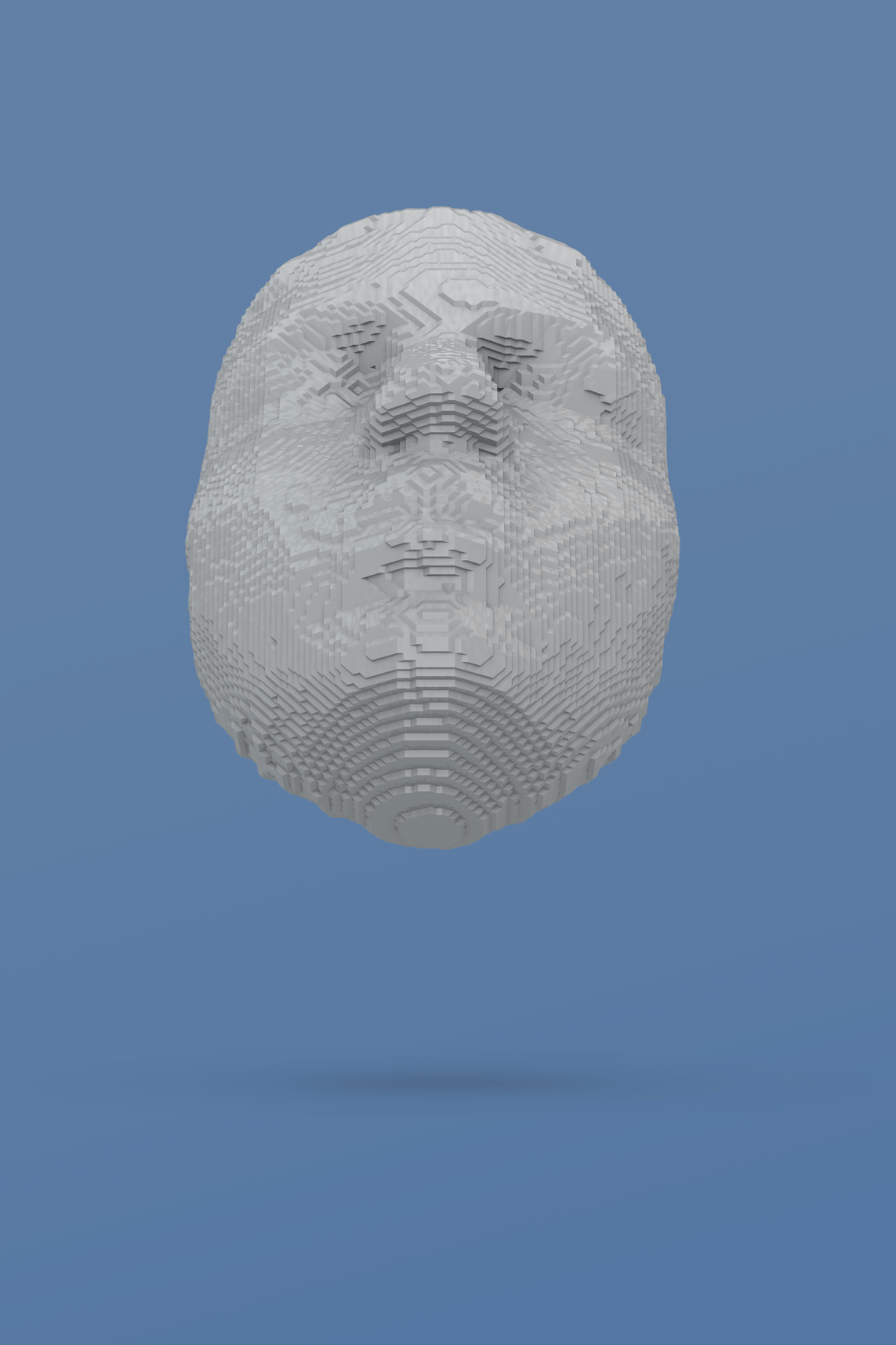

Cloud Faces: The Collection

sad, Male, 26 yrs.

surprise, feMale, 48 yrs.

Happy, Male, 34 yrs.

neutral, feMale, 19 yrs.

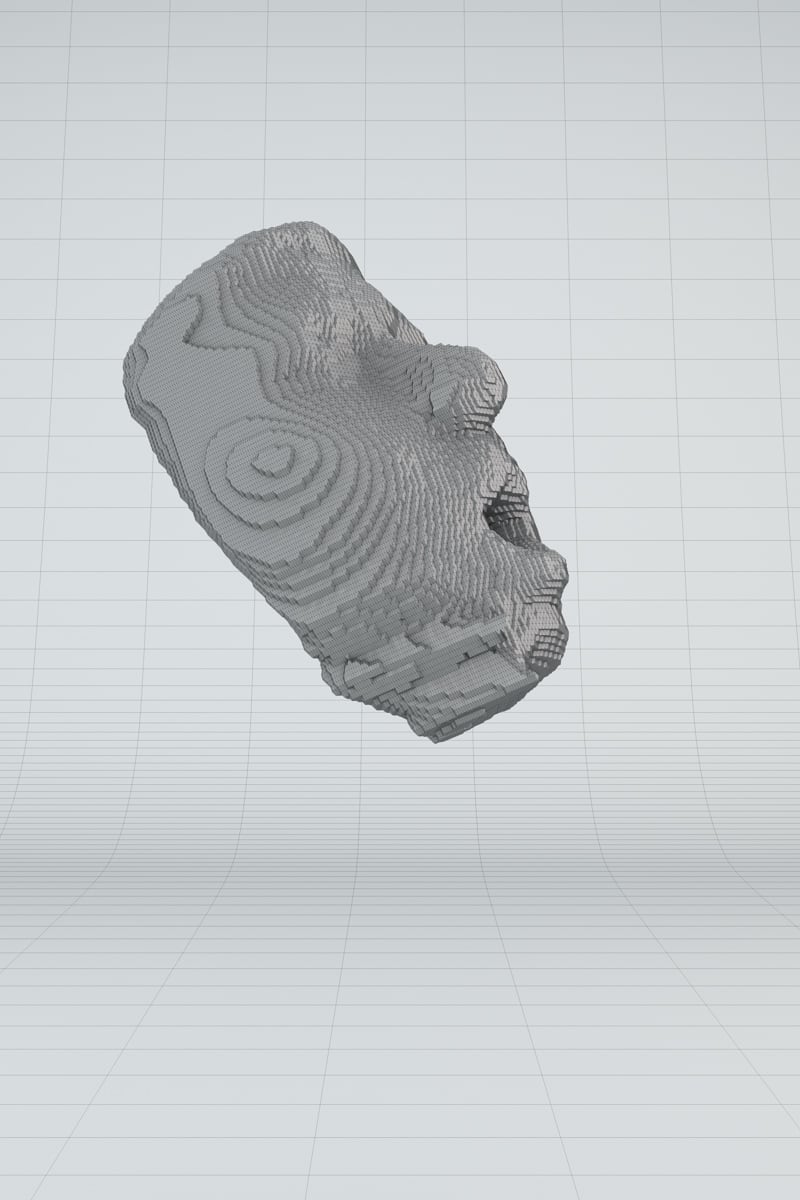

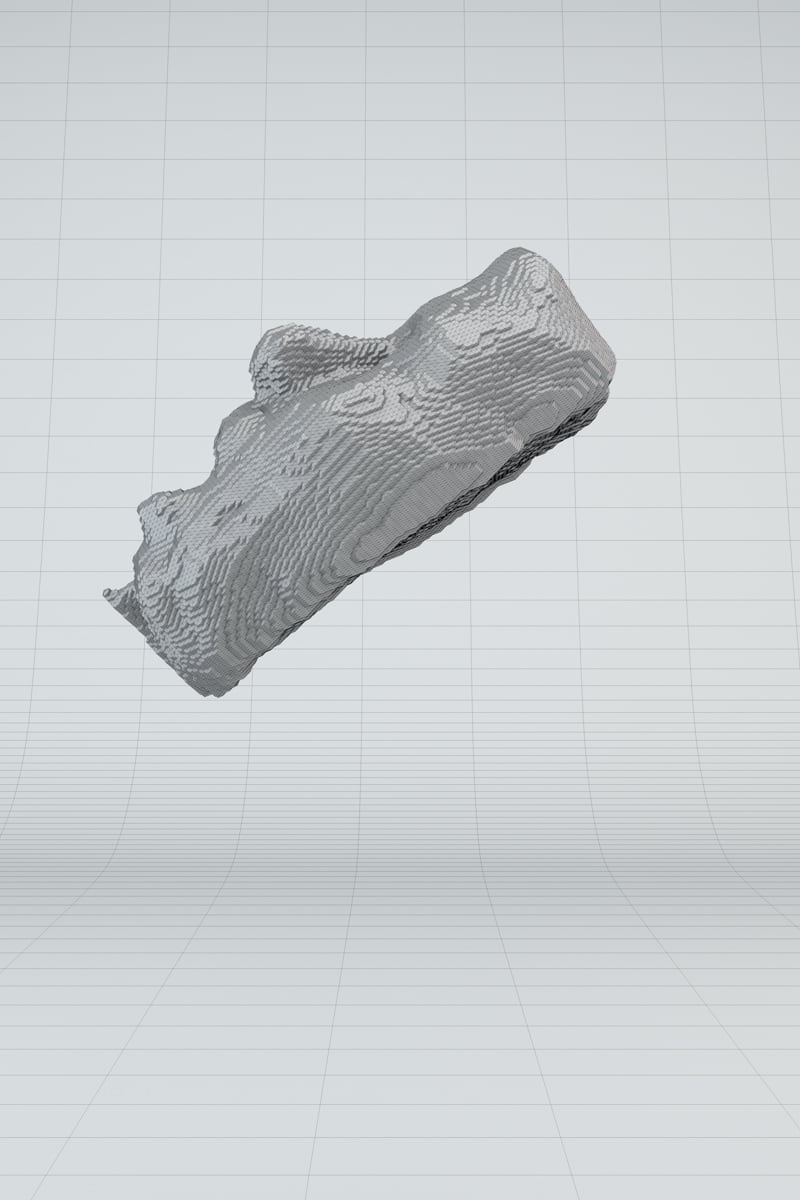

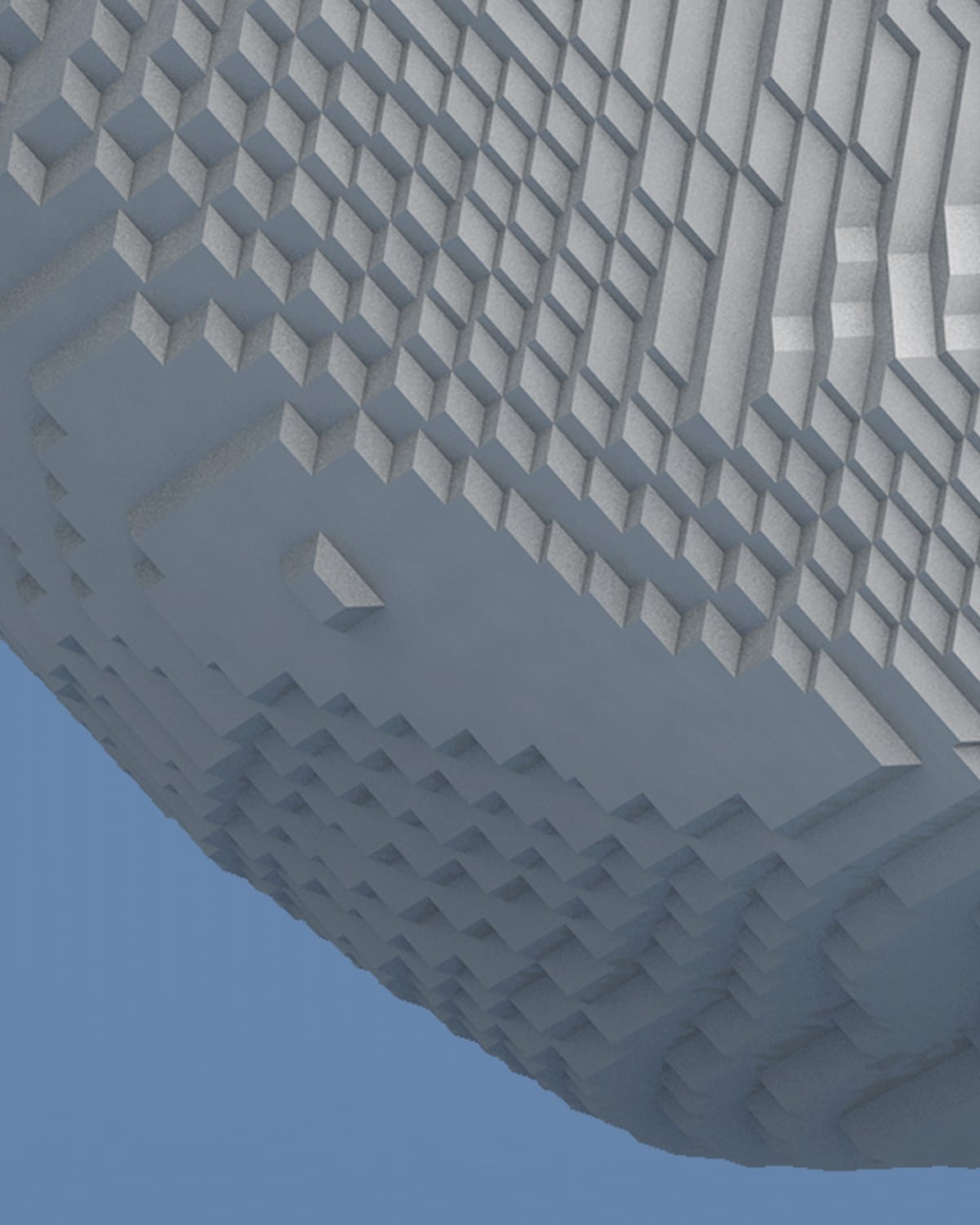

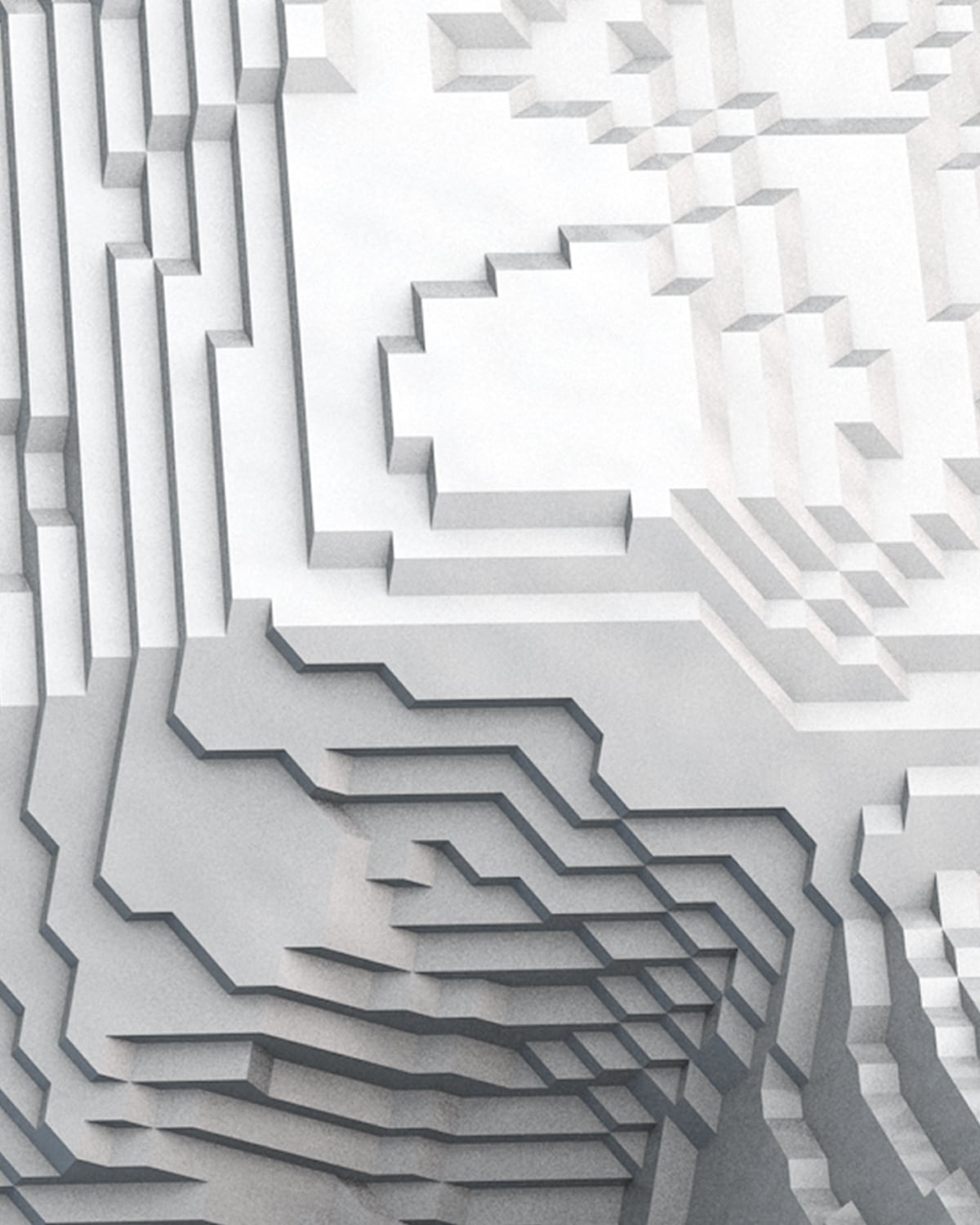

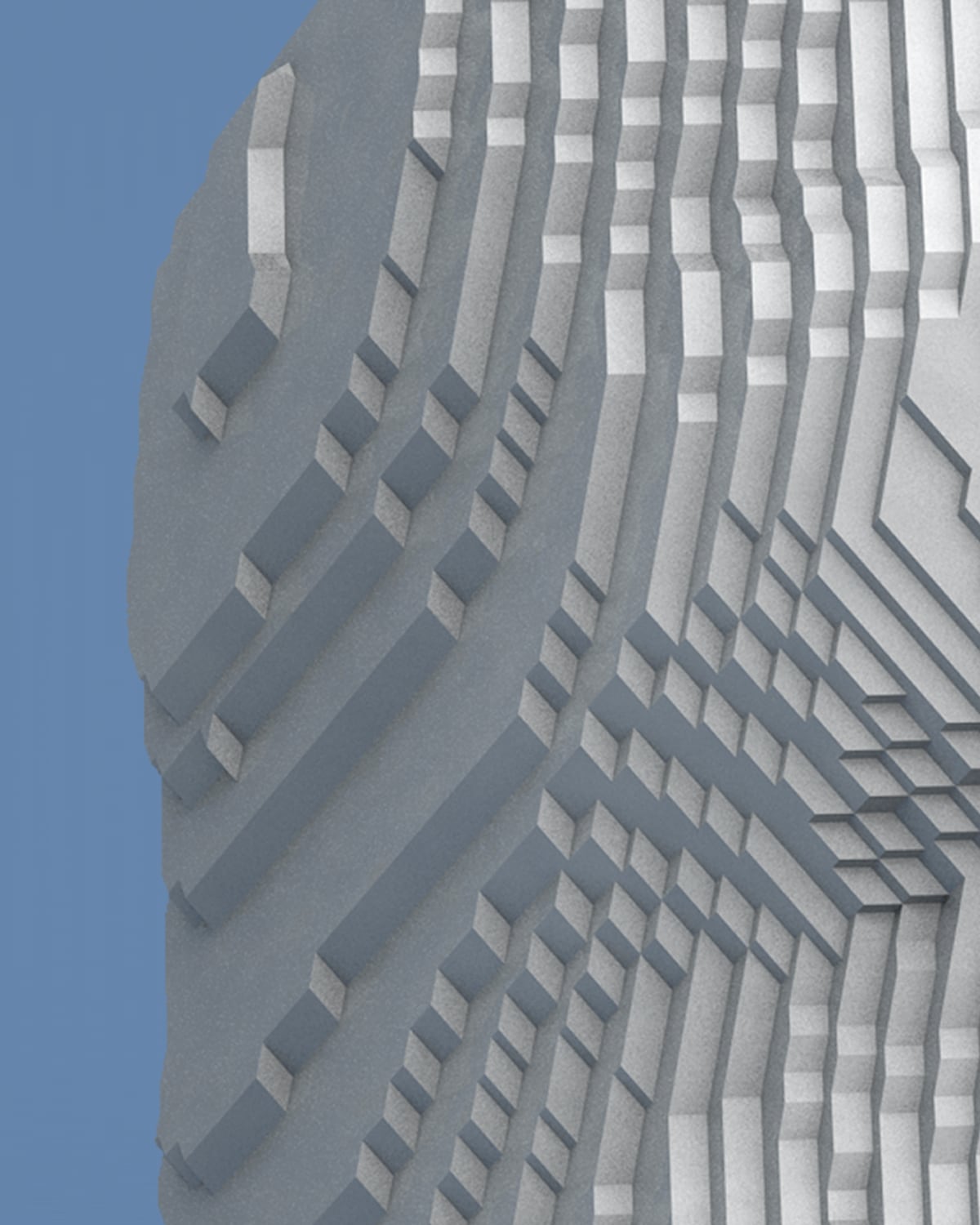

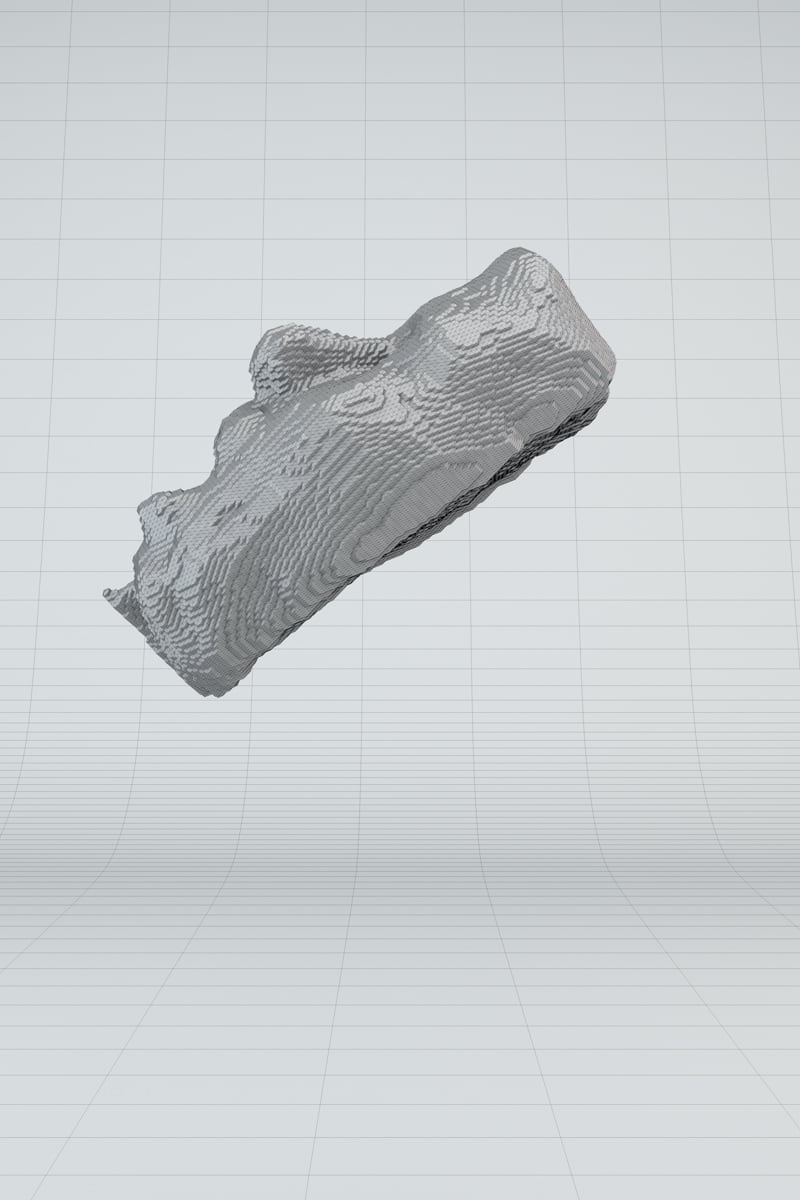

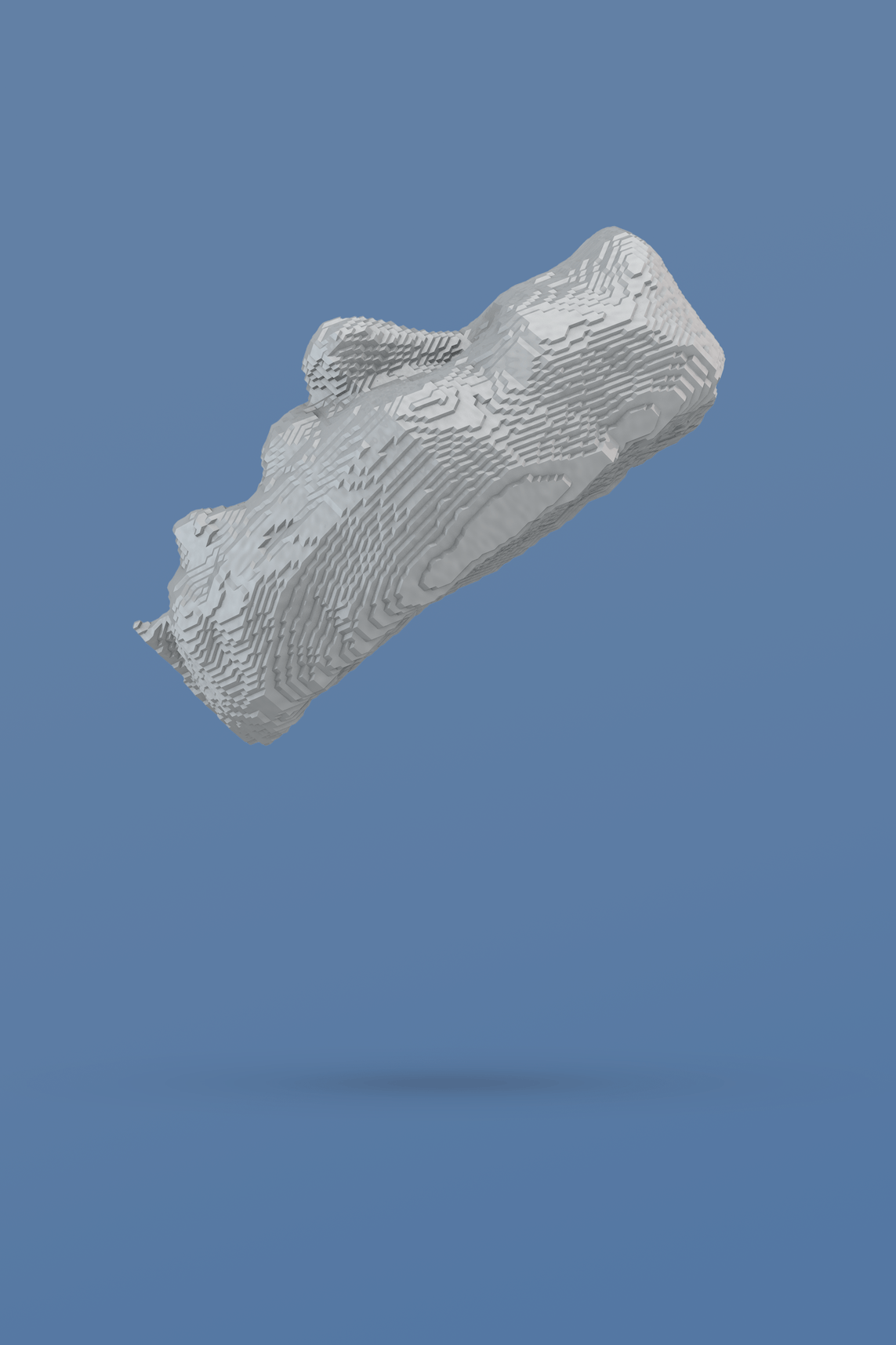

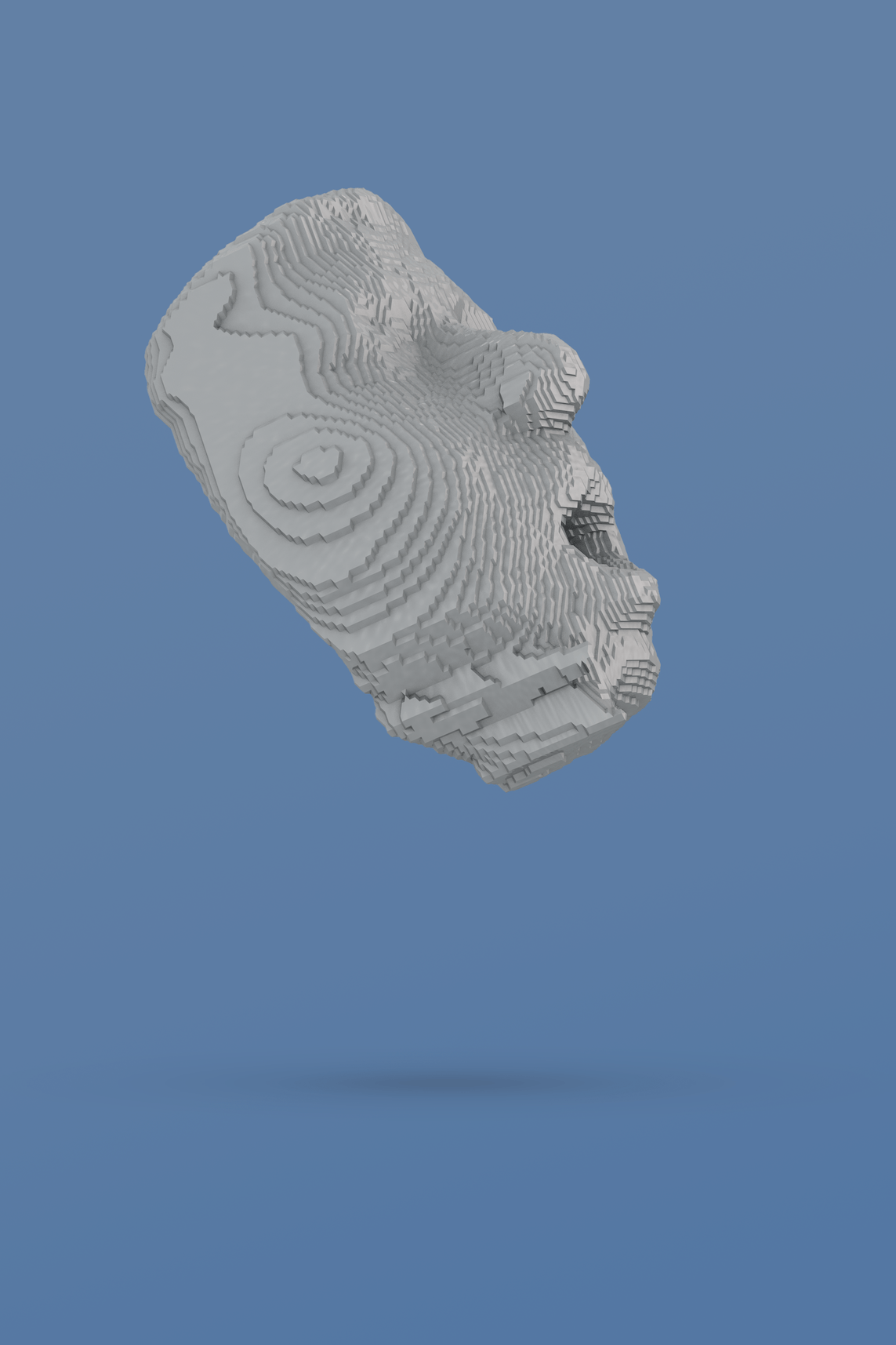

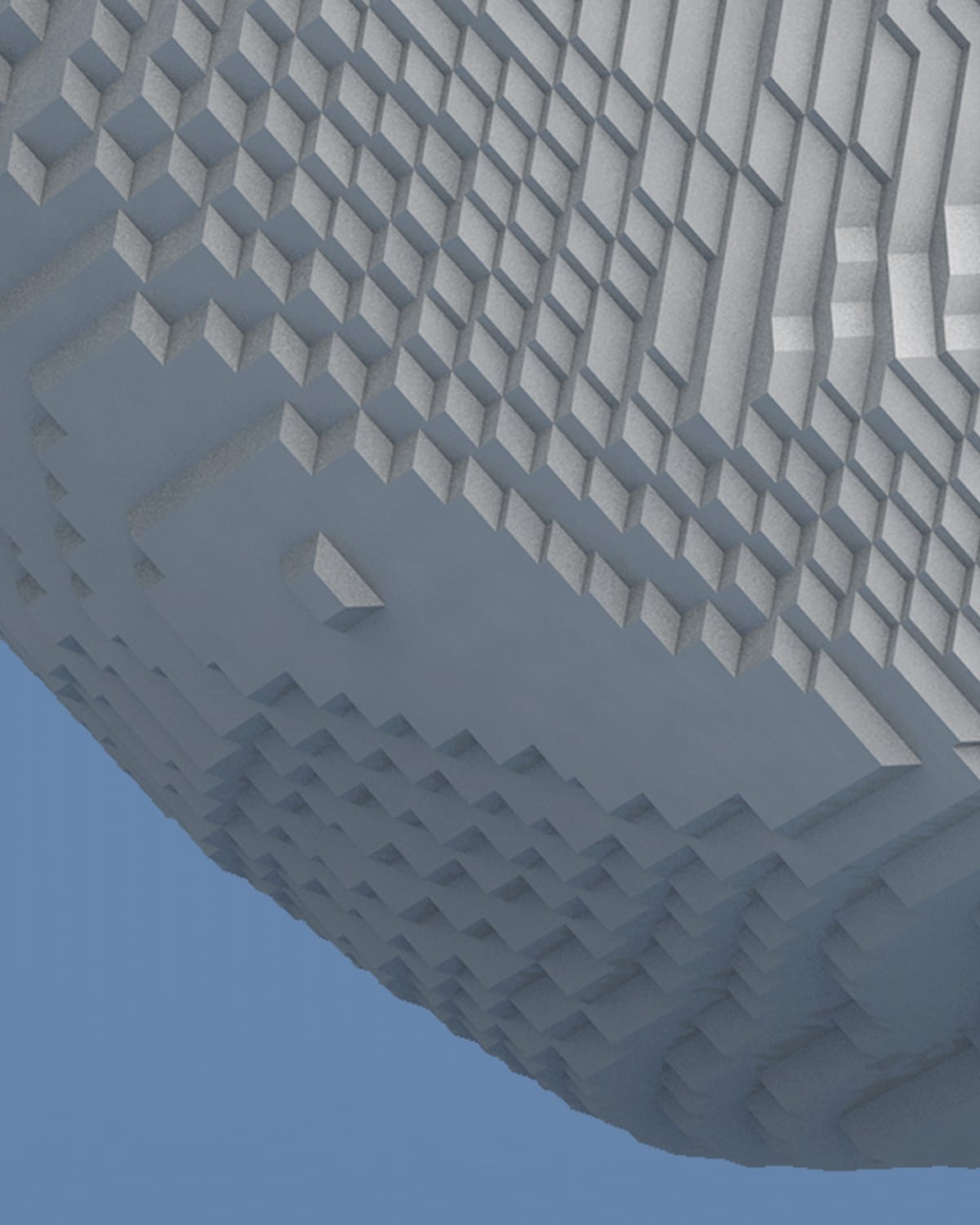

3D Close-ups

of the structural outcome

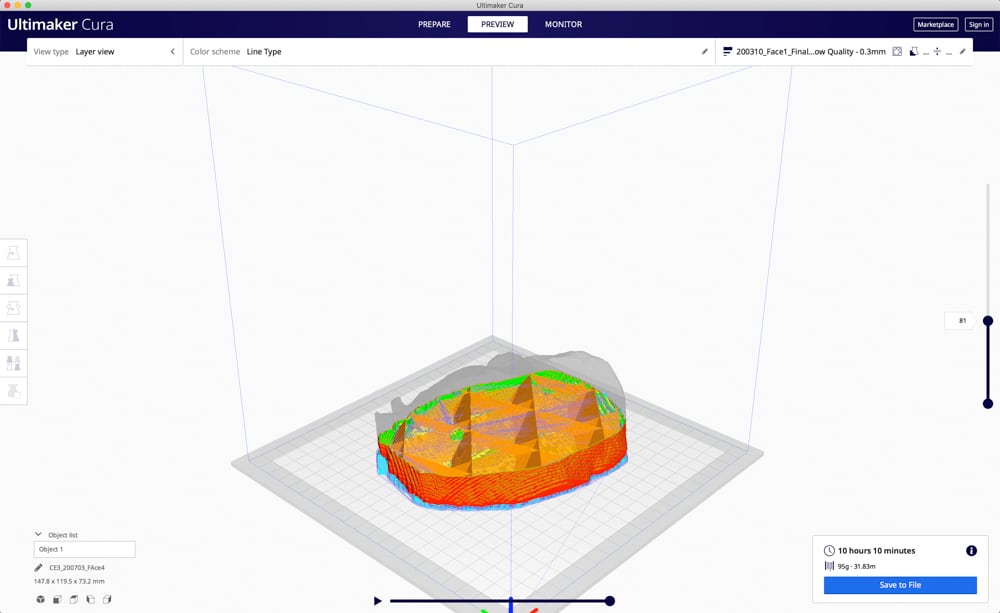

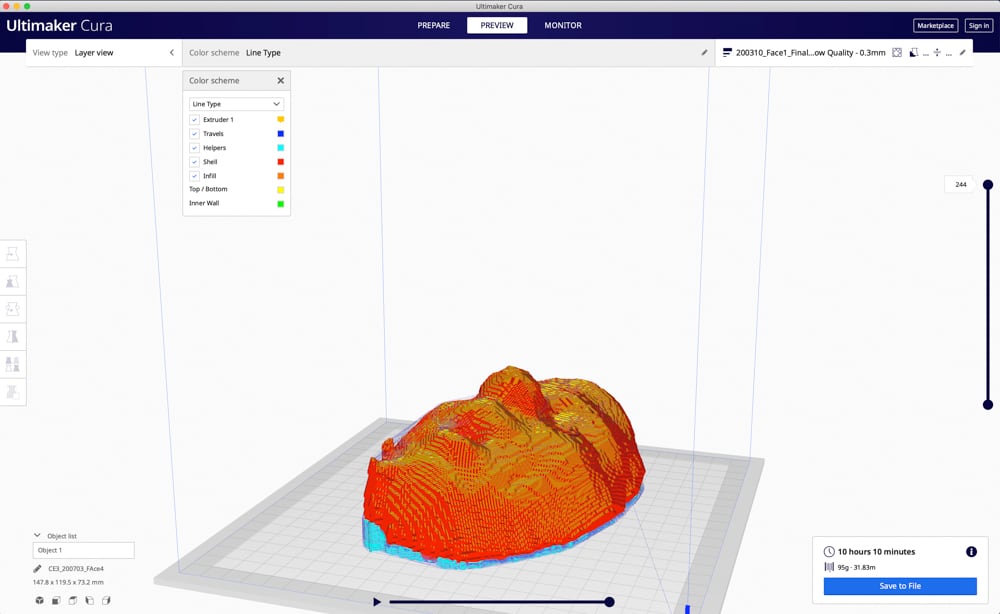

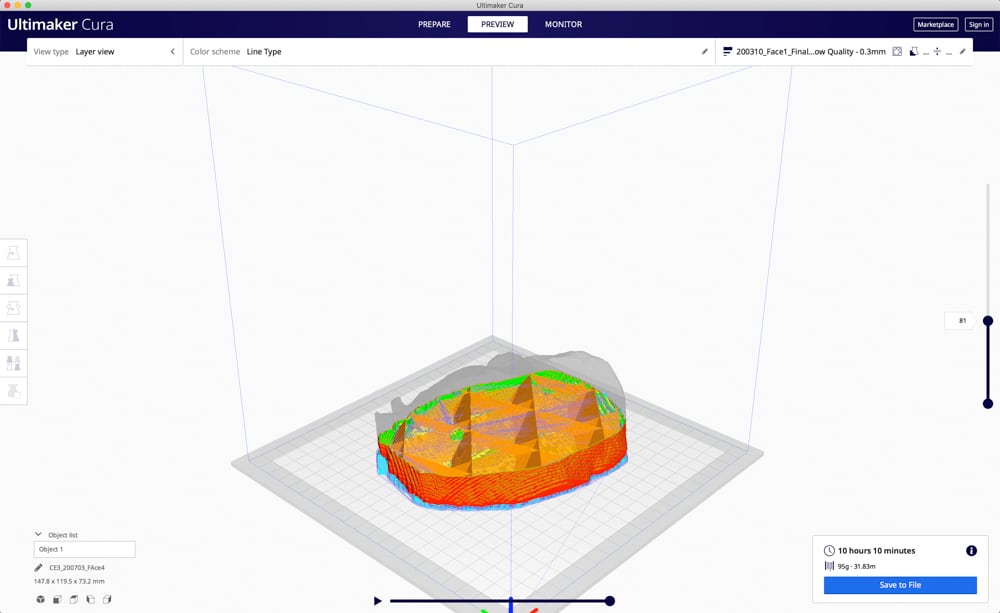

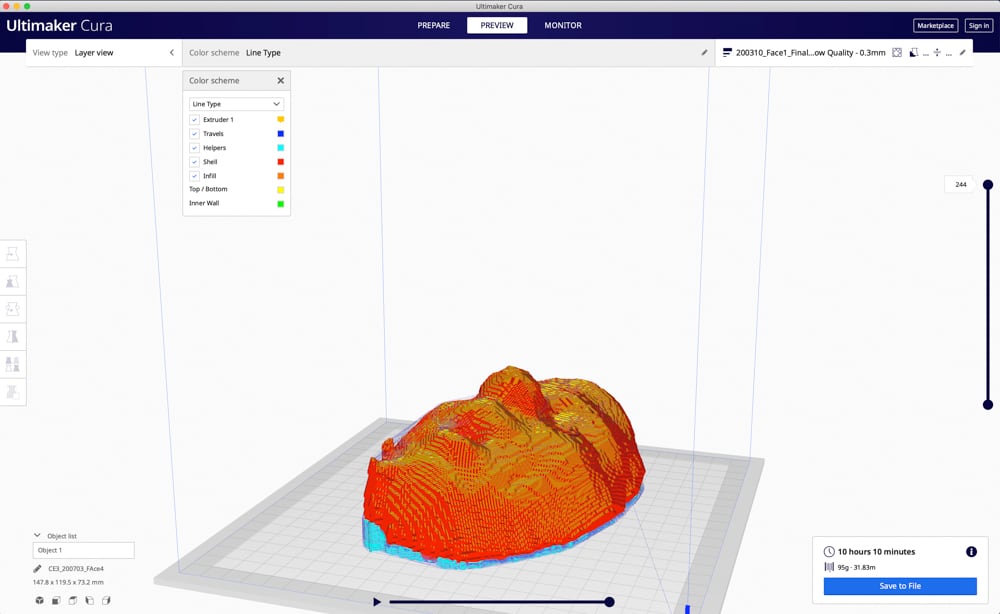

3D Printing

In this stage of the process, the model generated through machine learning undergoes preparation within Cinema 4D to ensure compatibility with 3D printing. Following the meticulous preparation, the model is then sent to the 3D printer for the final printing phase.

Preparing the 3D model IN Ultimaker

Timelapse videos of the printing process

Exhibition at Berlin University of the Arts

During the Rundgang exhibition at the University of the Arts, four 3D-printed cloud faces were shown, accompanied by narratives of their origins, aiming to evoke contemplation and dialogue among visitors regarding the intersection of art, technology, and cultural heritage.

How Artificial Intelligence challenges our perception of reality.

Project

Cloud Faces

Project type

Master thesis

Visual Communication

Berlin University of the Arts

Supervisors

Prof. Uwe Vock

Prof. Dr. Timothée Ingen-Housz

Mr. Vinzent Britz

Grade

Note 1.0 mit Auszeichnung,

ECTS A with distinction

In my Master's thesis project, titled "Volumetric Cloud Faces," I explored the connection between AI and human perception. The installation uses machine learning to analyze videos, find faces using facial recognition software, and turn them into 3D objects. These objects are then 3D printed.

This transformation of found faces into tangible objects invites viewers to question their relationship with digital technology and the ways in which they perceive and interact with media.

The machine learning algorithms used in the Cloud Faces installation also raise important ethical concerns about the role of technology in shaping our perception of the world. As AI systems continue to advance and become capable of emulating human conversation and behavior, it is vital for us to consider the implications of our trust in these systems and the potential ethical challenges that may arise.

“The medium is the message”

Marshall mcluhan

The Paperclip Parable highlights the dangers of advanced artificial intelligence when its goals are not aligned with human values.

Long before today’s advanced AI, Joseph Weizenbaum developed ELIZA in the 1960s, a pioneering natural language processing program that simulated a psychotherapist and revealed how people could trust a machine mimicking human conversation.

As AI technology develops, this early example of people trusting machines is becoming more important. My research on the paperclip parable is based on a lot of study and analysis of these ideas.

Scientific work on the topic "Artificial Intelligence – Dark Sides and Future Visions."

Facial Recognition Model:

Used on over 297 minutes of video footage

The "Face Recognition Model with Gender Age and Emotions Estimations" project, available on Github and created by Betrand Lee and Riley Kwok, serves as the foundation for my analysis. Based on three pre-trained CNN models, the entire video material can be analyzed frame by frame for age, gender, and emotion.

This Python-based system consists of various modules, including the "WideResNet Age_Gender_Model," which has learned to identify a person's gender and age from over 500,000 individual images.

Frame by frame View and analysis

Facial Recognition:

Four different frame by frame analysis with results

Frame 05/95

Frame 06/95

Frame 07/95

Frame 08/95

Frame 09/95

Frame 09/95

Frame 799/1435

Frame 800/1435

Frame 801/1435

Frame 802/1435

Frame 803/1435

Frame 803/1435

Frame 559/1075

Frame 560/1075

Frame 561/1075

Frame 562/1075

Frame 563/1075

Frame 563/1075

Frame 1807/2155

Frame 1808/2155

Frame 1809/2155

Frame 1810/2155

Frame 1811/2155

Frame 1811/2155

3D Facial Reconstruction using machine learning

3D facial reconstruction has been a fundamental area of computer visualization for decades. Current systems often require the availability of multiple facial images of the same subject, while compensating for connections across a variety of facial poses, expressions, and uneven lighting to arrive at a result.

The project "Large Pose 3D Face Reconstruction from a Single Image via Direct Volumetric CNN Regression" addresses many of these limitations by training a Convolutional Neural Network (CNN) on a suitable dataset consisting of 2D facial images and 3D facial models and scans. The CNN works with only a single 2D facial image as input, requiring neither precise alignment nor dense correspondence between images and can be used to reconstruct the entire 3D facial geometry, including the non-visible parts.

Frame 803/1435

Neutral, feMale, 19 yrs.

Frame 563/1075

Sad, Male, 26 yrs.

Frame 1811/2155

Happy, Male, 34 yrs.

Frame 09/95

Surprise, FeMale, 48 yrs.

From Digital to Analog:

The Medium is the Message

Cloud Faces: The Collection

sad, Male, 26 yrs.

surprise, feMale, 48 yrs.

Happy, Male, 34 yrs.

neutral, feMale, 19 yrs.

3D Close-ups

of the structural outcome

3D Printing

In this stage of the process, the model generated through machine learning undergoes preparation within Cinema 4D to ensure compatibility with 3D printing. Following the meticulous preparation, the model is then sent to the 3D printer for the final printing phase.

Preparing the 3D model IN Ultimaker

Timelapse videos of the printing process

Exhibition at Berlin University of the Arts

During the Rundgang exhibition at the University of the Arts, four 3D-printed cloud faces were shown, accompanied by narratives of their origins, aiming to evoke contemplation and dialogue among visitors regarding the intersection of art, technology, and cultural heritage.